mirror of

https://github.com/9001/copyparty.git

synced 2025-10-27 18:13:43 +00:00

Compare commits

170 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

b28533f850 | ||

|

|

bd8c7e538a | ||

|

|

89e48cff24 | ||

|

|

ae90a7b7b6 | ||

|

|

6fc1be04da | ||

|

|

0061d29534 | ||

|

|

a891f34a93 | ||

|

|

d6a1e62a95 | ||

|

|

cda36ea8b4 | ||

|

|

909a76434a | ||

|

|

39348ef659 | ||

|

|

99d30edef3 | ||

|

|

b63ab15bf9 | ||

|

|

485cb4495c | ||

|

|

df018eb1f2 | ||

|

|

49aa47a9b8 | ||

|

|

7d20eb202a | ||

|

|

c533da9129 | ||

|

|

5cba31a814 | ||

|

|

1d824cb26c | ||

|

|

83b903d60e | ||

|

|

9c8ccabe8e | ||

|

|

b1f2c4e70d | ||

|

|

273ca0c8da | ||

|

|

d6f516b34f | ||

|

|

83127858ca | ||

|

|

d89329757e | ||

|

|

49ffec5320 | ||

|

|

2eaae2b66a | ||

|

|

ea4441e25c | ||

|

|

e5f34042f9 | ||

|

|

271096874a | ||

|

|

8efd780a72 | ||

|

|

41bcf7308d | ||

|

|

d102bb3199 | ||

|

|

d0bed95415 | ||

|

|

2528729971 | ||

|

|

292c18b3d0 | ||

|

|

0be7c5e2d8 | ||

|

|

eb5aaddba4 | ||

|

|

d8fd82bcb5 | ||

|

|

97be495861 | ||

|

|

8b53c159fc | ||

|

|

81e281f703 | ||

|

|

3948214050 | ||

|

|

c5e9a643e7 | ||

|

|

d25881d5c3 | ||

|

|

38d8d9733f | ||

|

|

118ebf668d | ||

|

|

a86f09fa46 | ||

|

|

dd4fb35c8f | ||

|

|

621eb4cf95 | ||

|

|

deea66ad0b | ||

|

|

bf99445377 | ||

|

|

7b54a63396 | ||

|

|

0fcb015f9a | ||

|

|

0a22b1ffb6 | ||

|

|

68cecc52ab | ||

|

|

53657ccfff | ||

|

|

96223fda01 | ||

|

|

374ff3433e | ||

|

|

5d63949e98 | ||

|

|

6b065d507d | ||

|

|

e79997498a | ||

|

|

f7ee02ec35 | ||

|

|

69dc433e1c | ||

|

|

c880cd848c | ||

|

|

5752b6db48 | ||

|

|

b36f905eab | ||

|

|

483dd527c6 | ||

|

|

e55678e28f | ||

|

|

3f4a8b9d6f | ||

|

|

02a856ecb4 | ||

|

|

4dff726310 | ||

|

|

cbc449036f | ||

|

|

8f53152220 | ||

|

|

bbb1e165d6 | ||

|

|

fed8d94885 | ||

|

|

58040cc0ed | ||

|

|

03d692db66 | ||

|

|

903f8e8453 | ||

|

|

405ae1308e | ||

|

|

8a0f583d71 | ||

|

|

b6d7017491 | ||

|

|

0f0217d203 | ||

|

|

a203e33347 | ||

|

|

3b8f697dd4 | ||

|

|

78ba16f722 | ||

|

|

0fcfe79994 | ||

|

|

c0e6df4b63 | ||

|

|

322abdcb43 | ||

|

|

31100787ce | ||

|

|

c57d721be4 | ||

|

|

3b5a03e977 | ||

|

|

ed807ee43e | ||

|

|

073c130ae6 | ||

|

|

8810e0be13 | ||

|

|

f93016ab85 | ||

|

|

b19cf260c2 | ||

|

|

db03e1e7eb | ||

|

|

e0d975e36a | ||

|

|

cfeb15259f | ||

|

|

3b3f8fc8fb | ||

|

|

88bd2c084c | ||

|

|

bd367389b0 | ||

|

|

58ba71a76f | ||

|

|

d03e34d55d | ||

|

|

24f239a46c | ||

|

|

2c0826f85a | ||

|

|

c061461d01 | ||

|

|

e7982a04fe | ||

|

|

33b91a7513 | ||

|

|

9bb1323e44 | ||

|

|

e62bb807a5 | ||

|

|

3fc0d2cc4a | ||

|

|

0c786b0766 | ||

|

|

68c7528911 | ||

|

|

26e18ae800 | ||

|

|

c30dc0b546 | ||

|

|

f94aa46a11 | ||

|

|

403261a293 | ||

|

|

c7d9cbb11f | ||

|

|

57e1c53cbb | ||

|

|

0754b553dd | ||

|

|

50661d941b | ||

|

|

c5db7c1a0c | ||

|

|

2cef5365f7 | ||

|

|

fbc4e94007 | ||

|

|

037ed5a2ad | ||

|

|

69dfa55705 | ||

|

|

a79a5c4e3e | ||

|

|

7e80eabfe6 | ||

|

|

375b72770d | ||

|

|

e2dd683def | ||

|

|

9eba50c6e4 | ||

|

|

5a579dba52 | ||

|

|

e86c719575 | ||

|

|

0e87f35547 | ||

|

|

b6d3d791a5 | ||

|

|

c9c3302664 | ||

|

|

c3e4d65b80 | ||

|

|

27a03510c5 | ||

|

|

ed7727f7cb | ||

|

|

127ec10c0d | ||

|

|

5a9c0ad225 | ||

|

|

7e8daf650e | ||

|

|

0cf737b4ce | ||

|

|

74635e0113 | ||

|

|

e5c4f49901 | ||

|

|

e4654ee7f1 | ||

|

|

e5d05c05ed | ||

|

|

73c4f99687 | ||

|

|

28c12ef3bf | ||

|

|

eed82dbb54 | ||

|

|

2c4b4ab928 | ||

|

|

505a8fc6f6 | ||

|

|

e4801d9b06 | ||

|

|

04f1b2cf3a | ||

|

|

c06d928bb5 | ||

|

|

ab09927e7b | ||

|

|

779437db67 | ||

|

|

28cbdb652e | ||

|

|

2b2415a7d8 | ||

|

|

746a8208aa | ||

|

|

a2a041a98a | ||

|

|

10b436e449 | ||

|

|

4d62b34786 | ||

|

|

0546210687 | ||

|

|

f8c11faada | ||

|

|

16d6e9be1f |

2

.vscode/launch.py

vendored

2

.vscode/launch.py

vendored

@@ -12,7 +12,7 @@ sys.path.insert(0, os.getcwd())

|

|||||||

import jstyleson

|

import jstyleson

|

||||||

from copyparty.__main__ import main as copyparty

|

from copyparty.__main__ import main as copyparty

|

||||||

|

|

||||||

with open(".vscode/launch.json", "r") as f:

|

with open(".vscode/launch.json", "r", encoding="utf-8") as f:

|

||||||

tj = f.read()

|

tj = f.read()

|

||||||

|

|

||||||

oj = jstyleson.loads(tj)

|

oj = jstyleson.loads(tj)

|

||||||

|

|||||||

269

README.md

269

README.md

@@ -9,9 +9,12 @@

|

|||||||

turn your phone or raspi into a portable file server with resumable uploads/downloads using IE6 or any other browser

|

turn your phone or raspi into a portable file server with resumable uploads/downloads using IE6 or any other browser

|

||||||

|

|

||||||

* server runs on anything with `py2.7` or `py3.3+`

|

* server runs on anything with `py2.7` or `py3.3+`

|

||||||

* *resumable* uploads need `firefox 12+` / `chrome 6+` / `safari 6+` / `IE 10+`

|

* browse/upload with IE4 / netscape4.0 on win3.11 (heh)

|

||||||

|

* *resumable* uploads need `firefox 34+` / `chrome 41+` / `safari 7+` for full speed

|

||||||

* code standard: `black`

|

* code standard: `black`

|

||||||

|

|

||||||

|

📷 **screenshots:** [browser](#the-browser) // [upload](#uploading) // [thumbnails](#thumbnails) // [md-viewer](#markdown-viewer) // [search](#searching) // [fsearch](#file-search) // [zip-DL](#zip-downloads) // [ie4](#browser-support)

|

||||||

|

|

||||||

|

|

||||||

## readme toc

|

## readme toc

|

||||||

|

|

||||||

@@ -20,8 +23,18 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

|||||||

* [notes](#notes)

|

* [notes](#notes)

|

||||||

* [status](#status)

|

* [status](#status)

|

||||||

* [bugs](#bugs)

|

* [bugs](#bugs)

|

||||||

* [usage](#usage)

|

* [general bugs](#general-bugs)

|

||||||

|

* [not my bugs](#not-my-bugs)

|

||||||

|

* [the browser](#the-browser)

|

||||||

|

* [tabs](#tabs)

|

||||||

|

* [hotkeys](#hotkeys)

|

||||||

|

* [tree-mode](#tree-mode)

|

||||||

|

* [thumbnails](#thumbnails)

|

||||||

* [zip downloads](#zip-downloads)

|

* [zip downloads](#zip-downloads)

|

||||||

|

* [uploading](#uploading)

|

||||||

|

* [file-search](#file-search)

|

||||||

|

* [markdown viewer](#markdown-viewer)

|

||||||

|

* [other tricks](#other-tricks)

|

||||||

* [searching](#searching)

|

* [searching](#searching)

|

||||||

* [search configuration](#search-configuration)

|

* [search configuration](#search-configuration)

|

||||||

* [metadata from audio files](#metadata-from-audio-files)

|

* [metadata from audio files](#metadata-from-audio-files)

|

||||||

@@ -29,7 +42,10 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

|||||||

* [complete examples](#complete-examples)

|

* [complete examples](#complete-examples)

|

||||||

* [browser support](#browser-support)

|

* [browser support](#browser-support)

|

||||||

* [client examples](#client-examples)

|

* [client examples](#client-examples)

|

||||||

|

* [up2k](#up2k)

|

||||||

* [dependencies](#dependencies)

|

* [dependencies](#dependencies)

|

||||||

|

* [optional dependencies](#optional-dependencies)

|

||||||

|

* [install recommended deps](#install-recommended-deps)

|

||||||

* [optional gpl stuff](#optional-gpl-stuff)

|

* [optional gpl stuff](#optional-gpl-stuff)

|

||||||

* [sfx](#sfx)

|

* [sfx](#sfx)

|

||||||

* [sfx repack](#sfx-repack)

|

* [sfx repack](#sfx-repack)

|

||||||

@@ -43,25 +59,31 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

|||||||

|

|

||||||

download [copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py) and you're all set!

|

download [copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py) and you're all set!

|

||||||

|

|

||||||

running the sfx without arguments (for example doubleclicking it on Windows) will let anyone access the current folder; see `-h` for help if you want accounts and volumes etc

|

running the sfx without arguments (for example doubleclicking it on Windows) will give everyone full access to the current folder; see `-h` for help if you want accounts and volumes etc

|

||||||

|

|

||||||

you may also want these, especially on servers:

|

you may also want these, especially on servers:

|

||||||

* [contrib/systemd/copyparty.service](contrib/systemd/copyparty.service) to run copyparty as a systemd service

|

* [contrib/systemd/copyparty.service](contrib/systemd/copyparty.service) to run copyparty as a systemd service

|

||||||

* [contrib/nginx/copyparty.conf](contrib/nginx/copyparty.conf) to reverse-proxy behind nginx (for legit https)

|

* [contrib/nginx/copyparty.conf](contrib/nginx/copyparty.conf) to reverse-proxy behind nginx (for better https)

|

||||||

|

|

||||||

|

|

||||||

## notes

|

## notes

|

||||||

|

|

||||||

* iPhone/iPad: use Firefox to download files

|

general:

|

||||||

* Android-Chrome: set max "parallel uploads" for 200% upload speed (android bug)

|

|

||||||

* Android-Firefox: takes a while to select files (in order to avoid the above android-chrome issue)

|

|

||||||

* Desktop-Firefox: may use gigabytes of RAM if your connection is great and your files are massive

|

|

||||||

* paper-printing is affected by dark/light-mode! use lightmode for color, darkmode for grayscale

|

* paper-printing is affected by dark/light-mode! use lightmode for color, darkmode for grayscale

|

||||||

* because no browsers currently implement the media-query to do this properly orz

|

* because no browsers currently implement the media-query to do this properly orz

|

||||||

|

|

||||||

|

browser-specific:

|

||||||

|

* iPhone/iPad: use Firefox to download files

|

||||||

|

* Android-Chrome: increase "parallel uploads" for higher speed (android bug)

|

||||||

|

* Android-Firefox: takes a while to select files (their fix for ☝️)

|

||||||

|

* Desktop-Firefox: ~~may use gigabytes of RAM if your files are massive~~ *seems to be OK now*

|

||||||

|

* Desktop-Firefox: may stop you from deleting folders you've uploaded until you visit `about:memory` and click `Minimize memory usage`

|

||||||

|

|

||||||

|

|

||||||

## status

|

## status

|

||||||

|

|

||||||

|

summary: all planned features work! now please enjoy the bloatening

|

||||||

|

|

||||||

* backend stuff

|

* backend stuff

|

||||||

* ☑ sanic multipart parser

|

* ☑ sanic multipart parser

|

||||||

* ☑ load balancer (multiprocessing)

|

* ☑ load balancer (multiprocessing)

|

||||||

@@ -79,9 +101,12 @@ you may also want these, especially on servers:

|

|||||||

* browser

|

* browser

|

||||||

* ☑ tree-view

|

* ☑ tree-view

|

||||||

* ☑ media player

|

* ☑ media player

|

||||||

* ✖ thumbnails

|

* ☑ thumbnails

|

||||||

* ✖ SPA (browse while uploading)

|

* ☑ images using Pillow

|

||||||

* currently safe using the file-tree on the left only, not folders in the file list

|

* ☑ videos using FFmpeg

|

||||||

|

* ☑ cache eviction (max-age; maybe max-size eventually)

|

||||||

|

* ☑ SPA (browse while uploading)

|

||||||

|

* if you use the file-tree on the left only, not folders in the file list

|

||||||

* server indexing

|

* server indexing

|

||||||

* ☑ locate files by contents

|

* ☑ locate files by contents

|

||||||

* ☑ search by name/path/date/size

|

* ☑ search by name/path/date/size

|

||||||

@@ -90,26 +115,70 @@ you may also want these, especially on servers:

|

|||||||

* ☑ viewer

|

* ☑ viewer

|

||||||

* ☑ editor (sure why not)

|

* ☑ editor (sure why not)

|

||||||

|

|

||||||

summary: it works! you can use it! (but technically not even close to beta)

|

|

||||||

|

|

||||||

|

|

||||||

# bugs

|

# bugs

|

||||||

|

|

||||||

* Windows: python 3.7 and older cannot read tags with ffprobe, so use mutagen or upgrade

|

* Windows: python 3.7 and older cannot read tags with ffprobe, so use mutagen or upgrade

|

||||||

* Windows: python 2.7 cannot index non-ascii filenames with `-e2d`

|

* Windows: python 2.7 cannot index non-ascii filenames with `-e2d`

|

||||||

* Windows: python 2.7 cannot handle filenames with mojibake

|

* Windows: python 2.7 cannot handle filenames with mojibake

|

||||||

|

|

||||||

|

## general bugs

|

||||||

|

|

||||||

|

* all volumes must exist / be available on startup; up2k (mtp especially) gets funky otherwise

|

||||||

|

* cannot mount something at `/d1/d2/d3` unless `d2` exists inside `d1`

|

||||||

* hiding the contents at url `/d1/d2/d3` using `-v :d1/d2/d3:cd2d` has the side-effect of creating databases (for files/tags) inside folders d1 and d2, and those databases take precedence over the main db at the top of the vfs - this means all files in d2 and below will be reindexed unless you already had a vfs entry at or below d2

|

* hiding the contents at url `/d1/d2/d3` using `-v :d1/d2/d3:cd2d` has the side-effect of creating databases (for files/tags) inside folders d1 and d2, and those databases take precedence over the main db at the top of the vfs - this means all files in d2 and below will be reindexed unless you already had a vfs entry at or below d2

|

||||||

* probably more, pls let me know

|

* probably more, pls let me know

|

||||||

|

|

||||||

|

## not my bugs

|

||||||

|

|

||||||

# usage

|

* Windows: msys2-python 3.8.6 occasionally throws "RuntimeError: release unlocked lock" when leaving a scoped mutex in up2k

|

||||||

|

* this is an msys2 bug, the regular windows edition of python is fine

|

||||||

|

|

||||||

|

|

||||||

|

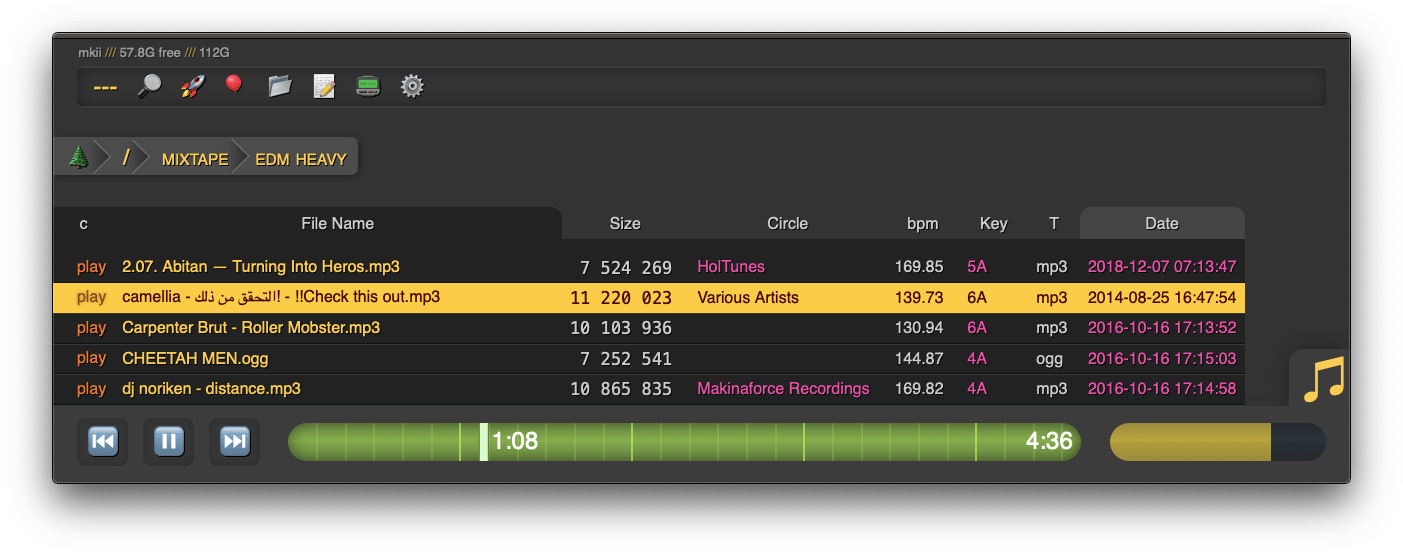

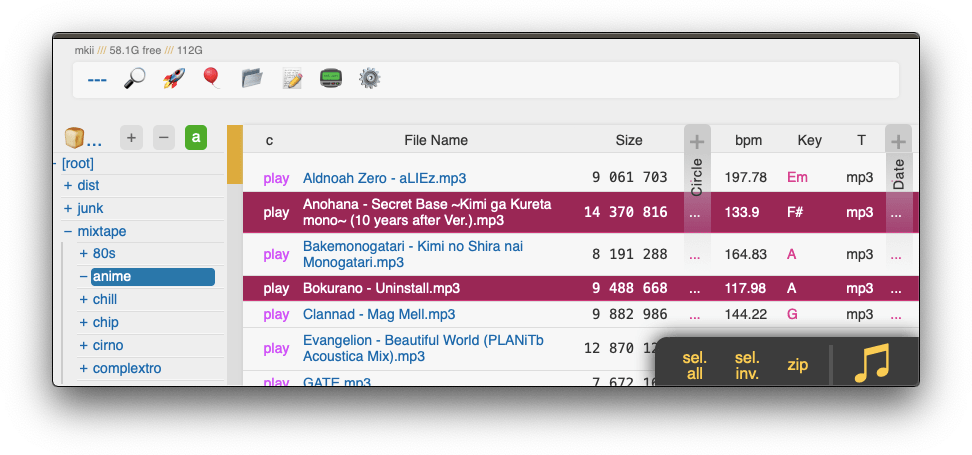

# the browser

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## tabs

|

||||||

|

|

||||||

|

* `[🔎]` search by size, date, path/name, mp3-tags ... see [searching](#searching)

|

||||||

|

* `[🚀]` and `[🎈]` are the uploaders, see [uploading](#uploading)

|

||||||

|

* `[📂]` mkdir, create directories

|

||||||

|

* `[📝]` new-md, create a new markdown document

|

||||||

|

* `[📟]` send-msg, either to server-log or into textfiles if `--urlform save`

|

||||||

|

* `[⚙️]` client configuration options

|

||||||

|

|

||||||

|

|

||||||

|

## hotkeys

|

||||||

|

|

||||||

the browser has the following hotkeys

|

the browser has the following hotkeys

|

||||||

* `0..9` jump to 10%..90%

|

|

||||||

* `U/O` skip 10sec back/forward

|

|

||||||

* `J/L` prev/next song

|

|

||||||

* `I/K` prev/next folder

|

* `I/K` prev/next folder

|

||||||

* `P` parent folder

|

* `P` parent folder

|

||||||

|

* `G` toggle list / grid view

|

||||||

|

* `T` toggle thumbnails / icons

|

||||||

|

* when playing audio:

|

||||||

|

* `0..9` jump to 10%..90%

|

||||||

|

* `U/O` skip 10sec back/forward

|

||||||

|

* `J/L` prev/next song

|

||||||

|

* `J` also starts playing the folder

|

||||||

|

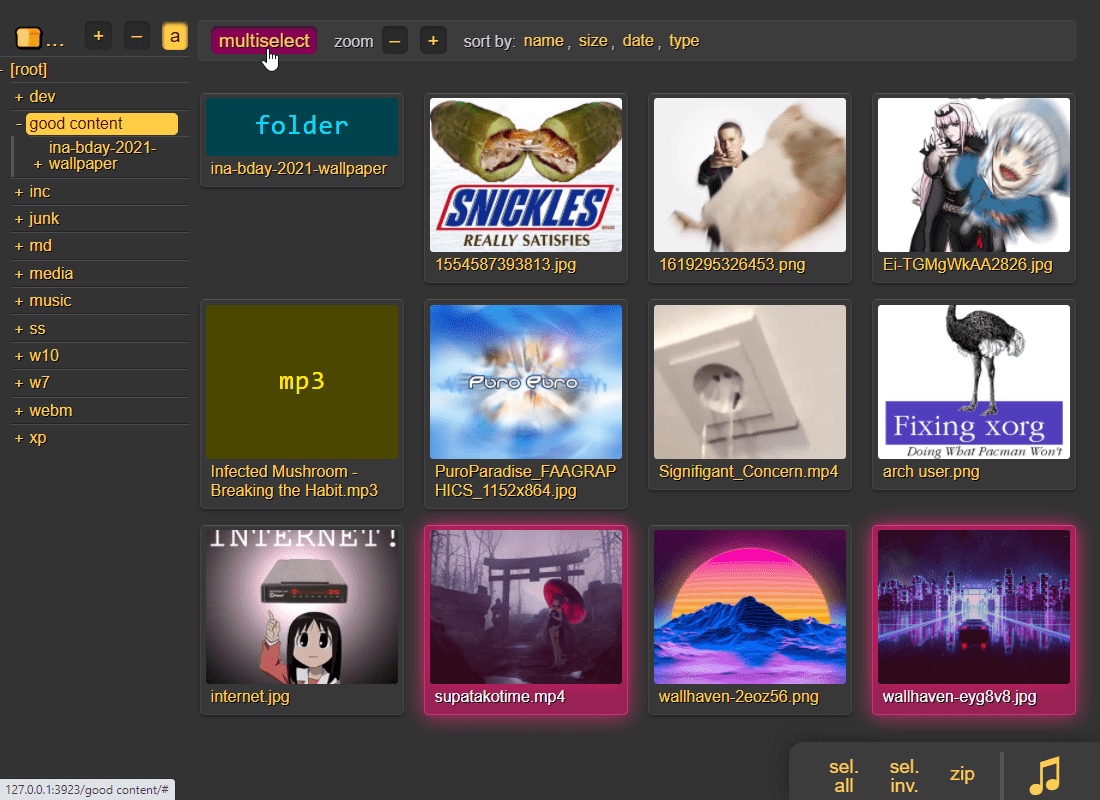

* in the grid view:

|

||||||

|

* `S` toggle multiselect

|

||||||

|

* `A/D` zoom

|

||||||

|

|

||||||

|

|

||||||

|

## tree-mode

|

||||||

|

|

||||||

|

by default there's a breadcrumbs path; you can replace this with a tree-browser sidebar thing by clicking the 🌲

|

||||||

|

|

||||||

|

click `[-]` and `[+]` to adjust the size, and the `[a]` toggles if the tree should widen dynamically as you go deeper or stay fixed-size

|

||||||

|

|

||||||

|

|

||||||

|

## thumbnails

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

it does static images with Pillow and uses FFmpeg for video files, so you may want to `--no-thumb` or maybe just `--no-vthumb` depending on how destructive your users are

|

||||||

|

|

||||||

|

|

||||||

## zip downloads

|

## zip downloads

|

||||||

@@ -128,12 +197,80 @@ the `zip` link next to folders can produce various types of zip/tar files using

|

|||||||

* `zip_crc` will take longer to download since the server has to read each file twice

|

* `zip_crc` will take longer to download since the server has to read each file twice

|

||||||

* please let me know if you find a program old enough to actually need this

|

* please let me know if you find a program old enough to actually need this

|

||||||

|

|

||||||

|

you can also zip a selection of files or folders by clicking them in the browser, that brings up a selection editor and zip button in the bottom right

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

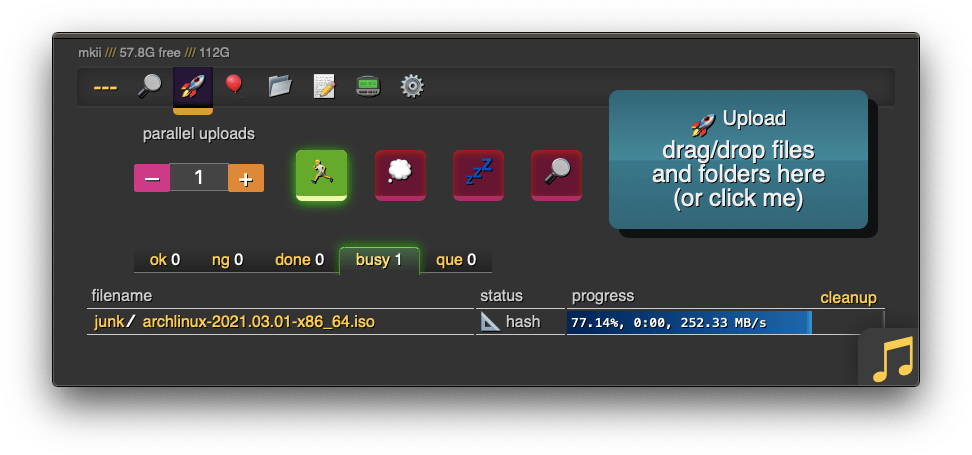

## uploading

|

||||||

|

|

||||||

|

two upload methods are available in the html client:

|

||||||

|

* `🎈 bup`, the basic uploader, supports almost every browser since netscape 4.0

|

||||||

|

* `🚀 up2k`, the fancy one

|

||||||

|

|

||||||

|

up2k has several advantages:

|

||||||

|

* you can drop folders into the browser (files are added recursively)

|

||||||

|

* files are processed in chunks, and each chunk is checksummed

|

||||||

|

* uploads resume if they are interrupted (for example by a reboot)

|

||||||

|

* server detects any corruption; the client reuploads affected chunks

|

||||||

|

* the client doesn't upload anything that already exists on the server

|

||||||

|

* the last-modified timestamp of the file is preserved

|

||||||

|

|

||||||

|

see [up2k](#up2k) for details on how it works

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**protip:** you can avoid scaring away users with [docs/minimal-up2k.html](docs/minimal-up2k.html) which makes it look [much simpler](https://user-images.githubusercontent.com/241032/118311195-dd6ca380-b4ef-11eb-86f3-75a3ff2e1332.png)

|

||||||

|

|

||||||

|

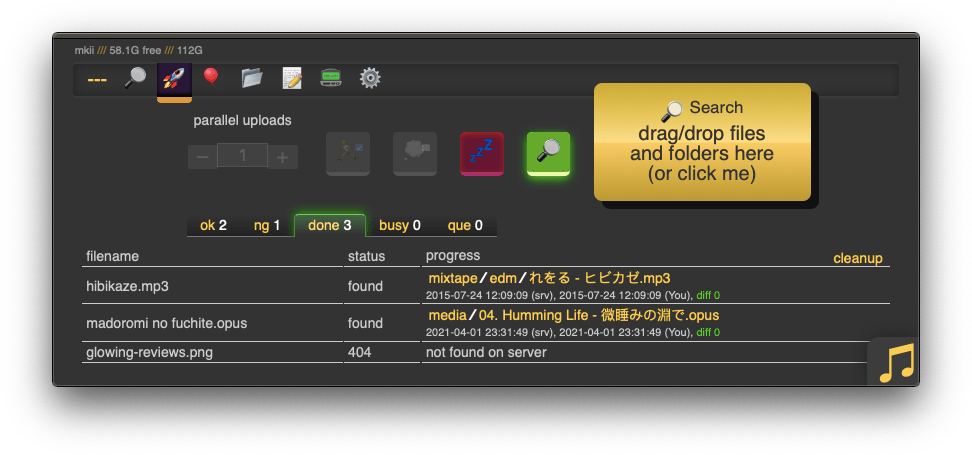

the up2k UI is the epitome of polished inutitive experiences:

|

||||||

|

* "parallel uploads" specifies how many chunks to upload at the same time

|

||||||

|

* `[🏃]` analysis of other files should continue while one is uploading

|

||||||

|

* `[💭]` ask for confirmation before files are added to the list

|

||||||

|

* `[💤]` sync uploading between other copyparty tabs so only one is active

|

||||||

|

* `[🔎]` switch between upload and file-search mode

|

||||||

|

|

||||||

|

and then theres the tabs below it,

|

||||||

|

* `[ok]` is uploads which completed successfully

|

||||||

|

* `[ng]` is the uploads which failed / got rejected (already exists, ...)

|

||||||

|

* `[done]` shows a combined list of `[ok]` and `[ng]`, chronological order

|

||||||

|

* `[busy]` files which are currently hashing, pending-upload, or uploading

|

||||||

|

* plus up to 3 entries each from `[done]` and `[que]` for context

|

||||||

|

* `[que]` is all the files that are still queued

|

||||||

|

|

||||||

|

### file-search

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

in the `[🚀 up2k]` tab, after toggling the `[🔎]` switch green, any files/folders you drop onto the dropzone will be hashed on the client-side. Each hash is sent to the server which checks if that file exists somewhere already

|

||||||

|

|

||||||

|

files go into `[ok]` if they exist (and you get a link to where it is), otherwise they land in `[ng]`

|

||||||

|

* the main reason filesearch is combined with the uploader is cause the code was too spaghetti to separate it out somewhere else, this is no longer the case but now i've warmed up to the idea too much

|

||||||

|

|

||||||

|

adding the same file multiple times is blocked, so if you first search for a file and then decide to upload it, you have to click the `[cleanup]` button to discard `[done]` files

|

||||||

|

|

||||||

|

note that since up2k has to read the file twice, `[🎈 bup]` can be up to 2x faster in extreme cases (if your internet connection is faster than the read-speed of your HDD)

|

||||||

|

|

||||||

|

up2k has saved a few uploads from becoming corrupted in-transfer already; caught an android phone on wifi redhanded in wireshark with a bitflip, however bup with https would *probably* have noticed as well thanks to tls also functioning as an integrity check

|

||||||

|

|

||||||

|

|

||||||

|

## markdown viewer

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

* the document preview has a max-width which is the same as an A4 paper when printed

|

||||||

|

|

||||||

|

|

||||||

|

## other tricks

|

||||||

|

|

||||||

|

* you can link a particular timestamp in an audio file by adding it to the URL, such as `&20` / `&20s` / `&1m20` / `&t=1:20` after the `.../#af-c8960dab`

|

||||||

|

|

||||||

|

|

||||||

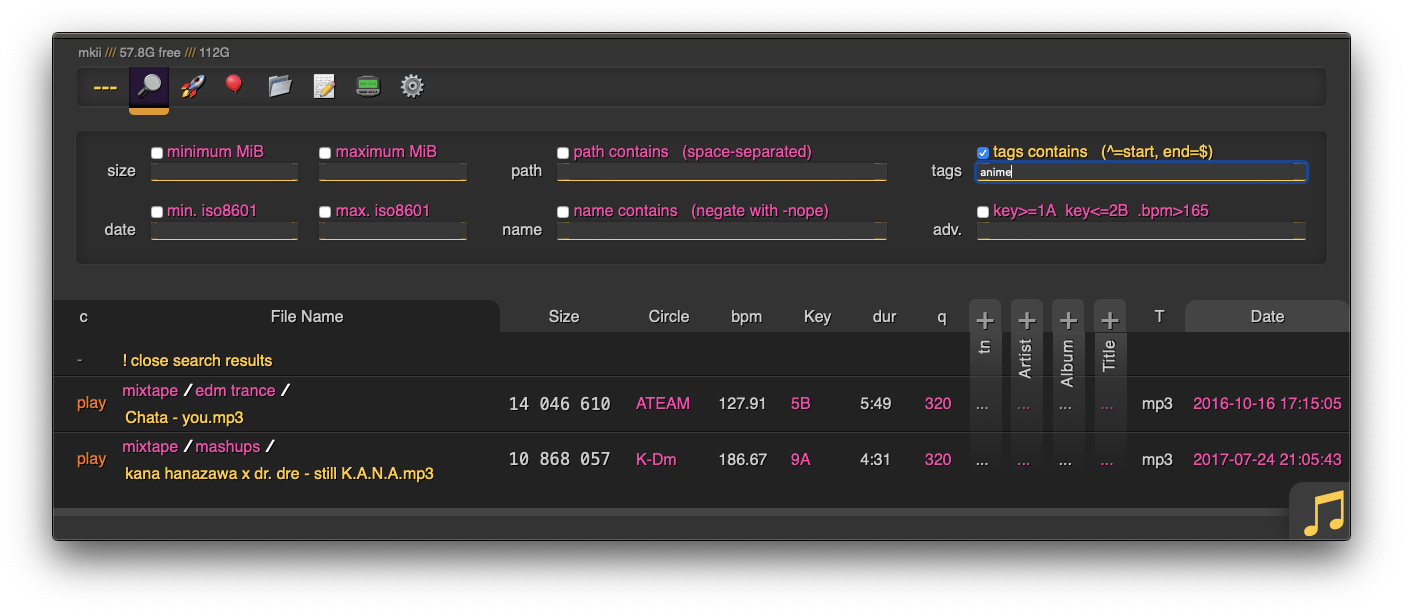

# searching

|

# searching

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

when started with `-e2dsa` copyparty will scan/index all your files. This avoids duplicates on upload, and also makes the volumes searchable through the web-ui:

|

when started with `-e2dsa` copyparty will scan/index all your files. This avoids duplicates on upload, and also makes the volumes searchable through the web-ui:

|

||||||

* make search queries by `size`/`date`/`directory-path`/`filename`, or...

|

* make search queries by `size`/`date`/`directory-path`/`filename`, or...

|

||||||

* drag/drop a local file to see if the same contents exist somewhere on the server (you get the URL if it does)

|

* drag/drop a local file to see if the same contents exist somewhere on the server, see [file-search](#file-search)

|

||||||

|

|

||||||

path/name queries are space-separated, AND'ed together, and words are negated with a `-` prefix, so for example:

|

path/name queries are space-separated, AND'ed together, and words are negated with a `-` prefix, so for example:

|

||||||

* path: `shibayan -bossa` finds all files where one of the folders contain `shibayan` but filters out any results where `bossa` exists somewhere in the path

|

* path: `shibayan -bossa` finds all files where one of the folders contain `shibayan` but filters out any results where `bossa` exists somewhere in the path

|

||||||

@@ -161,6 +298,8 @@ the same arguments can be set as volume flags, in addition to `d2d` and `d2t` fo

|

|||||||

|

|

||||||

`e2tsr` is probably always overkill, since `e2ds`/`e2dsa` would pick up any file modifications and cause `e2ts` to reindex those

|

`e2tsr` is probably always overkill, since `e2ds`/`e2dsa` would pick up any file modifications and cause `e2ts` to reindex those

|

||||||

|

|

||||||

|

the rescan button in the admin panel has no effect unless the volume has `-e2ds` or higher

|

||||||

|

|

||||||

|

|

||||||

## metadata from audio files

|

## metadata from audio files

|

||||||

|

|

||||||

@@ -178,6 +317,7 @@ see the beautiful mess of a dictionary in [mtag.py](https://github.com/9001/copy

|

|||||||

`--no-mutagen` disables mutagen and uses ffprobe instead, which...

|

`--no-mutagen` disables mutagen and uses ffprobe instead, which...

|

||||||

* is about 20x slower than mutagen

|

* is about 20x slower than mutagen

|

||||||

* catches a few tags that mutagen doesn't

|

* catches a few tags that mutagen doesn't

|

||||||

|

* melodic key, video resolution, framerate, pixfmt

|

||||||

* avoids pulling any GPL code into copyparty

|

* avoids pulling any GPL code into copyparty

|

||||||

* more importantly runs ffprobe on incoming files which is bad if your ffmpeg has a cve

|

* more importantly runs ffprobe on incoming files which is bad if your ffmpeg has a cve

|

||||||

|

|

||||||

@@ -190,6 +330,11 @@ copyparty can invoke external programs to collect additional metadata for files

|

|||||||

* `-mtp key=f,t5,~/bin/audio-key.py` uses `~/bin/audio-key.py` to get the `key` tag, replacing any existing metadata tag (`f,`), aborting if it takes longer than 5sec (`t5,`)

|

* `-mtp key=f,t5,~/bin/audio-key.py` uses `~/bin/audio-key.py` to get the `key` tag, replacing any existing metadata tag (`f,`), aborting if it takes longer than 5sec (`t5,`)

|

||||||

* `-v ~/music::r:cmtp=.bpm=~/bin/audio-bpm.py:cmtp=key=f,t5,~/bin/audio-key.py` both as a per-volume config wow this is getting ugly

|

* `-v ~/music::r:cmtp=.bpm=~/bin/audio-bpm.py:cmtp=key=f,t5,~/bin/audio-key.py` both as a per-volume config wow this is getting ugly

|

||||||

|

|

||||||

|

*but wait, there's more!* `-mtp` can be used for non-audio files as well using the `a` flag: `ay` only do audio files, `an` only do non-audio files, or `ad` do all files (d as in dontcare)

|

||||||

|

|

||||||

|

* `-mtp ext=an,~/bin/file-ext.py` runs `~/bin/file-ext.py` to get the `ext` tag only if file is not audio (`an`)

|

||||||

|

* `-mtp arch,built,ver,orig=an,eexe,edll,~/bin/exe.py` runs `~/bin/exe.py` to get properties about windows-binaries only if file is not audio (`an`) and file extension is exe or dll

|

||||||

|

|

||||||

|

|

||||||

## complete examples

|

## complete examples

|

||||||

|

|

||||||

@@ -199,6 +344,8 @@ copyparty can invoke external programs to collect additional metadata for files

|

|||||||

|

|

||||||

# browser support

|

# browser support

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

`ie` = internet-explorer, `ff` = firefox, `c` = chrome, `iOS` = iPhone/iPad, `Andr` = Android

|

`ie` = internet-explorer, `ff` = firefox, `c` = chrome, `iOS` = iPhone/iPad, `Andr` = Android

|

||||||

|

|

||||||

| feature | ie6 | ie9 | ie10 | ie11 | ff 52 | c 49 | iOS | Andr |

|

| feature | ie6 | ie9 | ie10 | ie11 | ff 52 | c 49 | iOS | Andr |

|

||||||

@@ -223,14 +370,18 @@ copyparty can invoke external programs to collect additional metadata for files

|

|||||||

* `*2` using a wasm decoder which can sometimes get stuck and consumes a bit more power

|

* `*2` using a wasm decoder which can sometimes get stuck and consumes a bit more power

|

||||||

|

|

||||||

quick summary of more eccentric web-browsers trying to view a directory index:

|

quick summary of more eccentric web-browsers trying to view a directory index:

|

||||||

* safari (14.0.3/macos) is chrome with janky wasm, so playing opus can deadlock the javascript engine

|

|

||||||

* safari (14.0.1/iOS) same as macos, except it recovers from the deadlocks if you poke it a bit

|

| browser | will it blend |

|

||||||

* links (2.21/macports) can browse, login, upload/mkdir/msg

|

| ------- | ------------- |

|

||||||

* lynx (2.8.9/macports) can browse, login, upload/mkdir/msg

|

| **safari** (14.0.3/macos) | is chrome with janky wasm, so playing opus can deadlock the javascript engine |

|

||||||

* w3m (0.5.3/macports) can browse, login, upload at 100kB/s, mkdir/msg

|

| **safari** (14.0.1/iOS) | same as macos, except it recovers from the deadlocks if you poke it a bit |

|

||||||

* netsurf (3.10/arch) is basically ie6 with much better css (javascript has almost no effect)

|

| **links** (2.21/macports) | can browse, login, upload/mkdir/msg |

|

||||||

* netscape 4.0 and 4.5 can browse (text is yellow on white), upload with `?b=u`

|

| **lynx** (2.8.9/macports) | can browse, login, upload/mkdir/msg |

|

||||||

* SerenityOS (22d13d8) hits a page fault, works with `?b=u`, file input not-impl, url params are multiplying

|

| **w3m** (0.5.3/macports) | can browse, login, upload at 100kB/s, mkdir/msg |

|

||||||

|

| **netsurf** (3.10/arch) | is basically ie6 with much better css (javascript has almost no effect) |

|

||||||

|

| **ie4** and **netscape** 4.0 | can browse (text is yellow on white), upload with `?b=u` |

|

||||||

|

| **SerenityOS** (22d13d8) | hits a page fault, works with `?b=u`, file input not-impl, url params are multiplying |

|

||||||

|

|

||||||

|

|

||||||

# client examples

|

# client examples

|

||||||

|

|

||||||

@@ -250,36 +401,72 @@ quick summary of more eccentric web-browsers trying to view a directory index:

|

|||||||

* cross-platform python client available in [./bin/](bin/)

|

* cross-platform python client available in [./bin/](bin/)

|

||||||

* [rclone](https://rclone.org/) as client can give ~5x performance, see [./docs/rclone.md](docs/rclone.md)

|

* [rclone](https://rclone.org/) as client can give ~5x performance, see [./docs/rclone.md](docs/rclone.md)

|

||||||

|

|

||||||

|

* sharex (screenshot utility): see [./contrib/sharex.sxcu](contrib/#sharexsxcu)

|

||||||

|

|

||||||

copyparty returns a truncated sha512sum of your PUT/POST as base64; you can generate the same checksum locally to verify uplaods:

|

copyparty returns a truncated sha512sum of your PUT/POST as base64; you can generate the same checksum locally to verify uplaods:

|

||||||

|

|

||||||

b512(){ printf "$((sha512sum||shasum -a512)|sed -E 's/ .*//;s/(..)/\\x\1/g')"|base64|head -c43;}

|

b512(){ printf "$((sha512sum||shasum -a512)|sed -E 's/ .*//;s/(..)/\\x\1/g')"|base64|head -c43;}

|

||||||

b512 <movie.mkv

|

b512 <movie.mkv

|

||||||

|

|

||||||

|

|

||||||

|

# up2k

|

||||||

|

|

||||||

|

quick outline of the up2k protocol, see [uploading](#uploading) for the web-client

|

||||||

|

* the up2k client splits a file into an "optimal" number of chunks

|

||||||

|

* 1 MiB each, unless that becomes more than 256 chunks

|

||||||

|

* tries 1.5M, 2M, 3, 4, 6, ... until <= 256 chunks or size >= 32M

|

||||||

|

* client posts the list of hashes, filename, size, last-modified

|

||||||

|

* server creates the `wark`, an identifier for this upload

|

||||||

|

* `sha512( salt + filesize + chunk_hashes )`

|

||||||

|

* and a sparse file is created for the chunks to drop into

|

||||||

|

* client uploads each chunk

|

||||||

|

* header entries for the chunk-hash and wark

|

||||||

|

* server writes chunks into place based on the hash

|

||||||

|

* client does another handshake with the hashlist; server replies with OK or a list of chunks to reupload

|

||||||

|

|

||||||

|

|

||||||

# dependencies

|

# dependencies

|

||||||

|

|

||||||

* `jinja2` (is built into the SFX)

|

* `jinja2` (is built into the SFX)

|

||||||

|

|

||||||

**optional,** enables music tags:

|

|

||||||

|

## optional dependencies

|

||||||

|

|

||||||

|

enable music tags:

|

||||||

* either `mutagen` (fast, pure-python, skips a few tags, makes copyparty GPL? idk)

|

* either `mutagen` (fast, pure-python, skips a few tags, makes copyparty GPL? idk)

|

||||||

* or `FFprobe` (20x slower, more accurate, possibly dangerous depending on your distro and users)

|

* or `FFprobe` (20x slower, more accurate, possibly dangerous depending on your distro and users)

|

||||||

|

|

||||||

**optional,** will eventually enable thumbnails:

|

enable image thumbnails:

|

||||||

* `Pillow` (requires py2.7 or py3.5+)

|

* `Pillow` (requires py2.7 or py3.5+)

|

||||||

|

|

||||||

|

enable video thumbnails:

|

||||||

|

* `ffmpeg` and `ffprobe` somewhere in `$PATH`

|

||||||

|

|

||||||

|

enable reading HEIF pictures:

|

||||||

|

* `pyheif-pillow-opener` (requires Linux or a C compiler)

|

||||||

|

|

||||||

|

enable reading AVIF pictures:

|

||||||

|

* `pillow-avif-plugin`

|

||||||

|

|

||||||

|

|

||||||

|

## install recommended deps

|

||||||

|

```

|

||||||

|

python -m pip install --user -U jinja2 mutagen Pillow

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

## optional gpl stuff

|

## optional gpl stuff

|

||||||

|

|

||||||

some bundled tools have copyleft dependencies, see [./bin/#mtag](bin/#mtag)

|

some bundled tools have copyleft dependencies, see [./bin/#mtag](bin/#mtag)

|

||||||

|

|

||||||

these are standalone and will never be imported / evaluated by copyparty

|

these are standalone programs and will never be imported / evaluated by copyparty

|

||||||

|

|

||||||

|

|

||||||

# sfx

|

# sfx

|

||||||

|

|

||||||

currently there are two self-contained binaries:

|

currently there are two self-contained "binaries":

|

||||||

* [copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py) -- pure python, works everywhere

|

* [copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py) -- pure python, works everywhere, **recommended**

|

||||||

* [copyparty-sfx.sh](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.sh) -- smaller, but only for linux and macos

|

* [copyparty-sfx.sh](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.sh) -- smaller, but only for linux and macos, kinda deprecated

|

||||||

|

|

||||||

launch either of them (**use sfx.py on systemd**) and it'll unpack and run copyparty, assuming you have python installed of course

|

launch either of them (**use sfx.py on systemd**) and it'll unpack and run copyparty, assuming you have python installed of course

|

||||||

|

|

||||||

@@ -339,21 +526,25 @@ in the `scripts` folder:

|

|||||||

|

|

||||||

roughly sorted by priority

|

roughly sorted by priority

|

||||||

|

|

||||||

* audio link with timestamp

|

|

||||||

* separate sqlite table per tag

|

|

||||||

* audio fingerprinting

|

|

||||||

* readme.md as epilogue

|

* readme.md as epilogue

|

||||||

|

* single sha512 across all up2k chunks? maybe

|

||||||

* reduce up2k roundtrips

|

* reduce up2k roundtrips

|

||||||

* start from a chunk index and just go

|

* start from a chunk index and just go

|

||||||

* terminate client on bad data

|

* terminate client on bad data

|

||||||

* `os.copy_file_range` for up2k cloning

|

|

||||||

* support pillow-simd

|

|

||||||

* figure out the deal with pixel3a not being connectable as hotspot

|

|

||||||

* pixel3a having unpredictable 3sec latency in general :||||

|

|

||||||

|

|

||||||

discarded ideas

|

discarded ideas

|

||||||

|

|

||||||

|

* separate sqlite table per tag

|

||||||

|

* performance fixed by skipping some indexes (`+mt.k`)

|

||||||

|

* audio fingerprinting

|

||||||

|

* only makes sense if there can be a wasm client and that doesn't exist yet (except for olaf which is agpl hence counts as not existing)

|

||||||

|

* `os.copy_file_range` for up2k cloning

|

||||||

|

* almost never hit this path anyways

|

||||||

* up2k partials ui

|

* up2k partials ui

|

||||||

|

* feels like there isn't much point

|

||||||

* cache sha512 chunks on client

|

* cache sha512 chunks on client

|

||||||

|

* too dangerous

|

||||||

* comment field

|

* comment field

|

||||||

|

* nah

|

||||||

* look into android thumbnail cache file format

|

* look into android thumbnail cache file format

|

||||||

|

* absolutely not

|

||||||

|

|||||||

@@ -45,3 +45,18 @@ you could replace winfsp with [dokan](https://github.com/dokan-dev/dokany/releas

|

|||||||

# [`mtag/`](mtag/)

|

# [`mtag/`](mtag/)

|

||||||

* standalone programs which perform misc. file analysis

|

* standalone programs which perform misc. file analysis

|

||||||

* copyparty can Popen programs like these during file indexing to collect additional metadata

|

* copyparty can Popen programs like these during file indexing to collect additional metadata

|

||||||

|

|

||||||

|

|

||||||

|

# [`dbtool.py`](dbtool.py)

|

||||||

|

upgrade utility which can show db info and help transfer data between databases, for example when a new version of copyparty recommends to wipe the DB and reindex because it now collects additional metadata during analysis, but you have some really expensive `-mtp` parsers and want to copy over the tags from the old db

|

||||||

|

|

||||||

|

for that example (upgrading to v0.11.0), first move the old db aside, launch copyparty, let it rebuild the db until the point where it starts running mtp (colored messages as it adds the mtp tags), then CTRL-C and patch in the old mtp tags from the old db instead

|

||||||

|

|

||||||

|

so assuming you have `-mtp` parsers to provide the tags `key` and `.bpm`:

|

||||||

|

|

||||||

|

```

|

||||||

|

~/bin/dbtool.py -ls up2k.db

|

||||||

|

~/bin/dbtool.py -src up2k.db.v0.10.22 up2k.db -cmp

|

||||||

|

~/bin/dbtool.py -src up2k.db.v0.10.22 up2k.db -rm-mtp-flag -copy key

|

||||||

|

~/bin/dbtool.py -src up2k.db.v0.10.22 up2k.db -rm-mtp-flag -copy .bpm -vac

|

||||||

|

```

|

||||||

|

|||||||

198

bin/dbtool.py

Executable file

198

bin/dbtool.py

Executable file

@@ -0,0 +1,198 @@

|

|||||||

|

#!/usr/bin/env python3

|

||||||

|

|

||||||

|

import os

|

||||||

|

import sys

|

||||||

|

import sqlite3

|

||||||

|

import argparse

|

||||||

|

|

||||||

|

DB_VER = 3

|

||||||

|

|

||||||

|

|

||||||

|

def die(msg):

|

||||||

|

print("\033[31m\n" + msg + "\n\033[0m")

|

||||||

|

sys.exit(1)

|

||||||

|

|

||||||

|

|

||||||

|

def read_ver(db):

|

||||||

|

for tab in ["ki", "kv"]:

|

||||||

|

try:

|

||||||

|

c = db.execute(r"select v from {} where k = 'sver'".format(tab))

|

||||||

|

except:

|

||||||

|

continue

|

||||||

|

|

||||||

|

rows = c.fetchall()

|

||||||

|

if rows:

|

||||||

|

return int(rows[0][0])

|

||||||

|

|

||||||

|

return "corrupt"

|

||||||

|

|

||||||

|

|

||||||

|

def ls(db):

|

||||||

|

nfiles = next(db.execute("select count(w) from up"))[0]

|

||||||

|

ntags = next(db.execute("select count(w) from mt"))[0]

|

||||||

|

print(f"{nfiles} files")

|

||||||

|

print(f"{ntags} tags\n")

|

||||||

|

|

||||||

|

print("number of occurences for each tag,")

|

||||||

|

print(" 'x' = file has no tags")

|

||||||

|

print(" 't:mtp' = the mtp flag (file not mtp processed yet)")

|

||||||

|

print()

|

||||||

|

for k, nk in db.execute("select k, count(k) from mt group by k order by k"):

|

||||||

|

print(f"{nk:9} {k}")

|

||||||

|

|

||||||

|

|

||||||

|

def compare(n1, d1, n2, d2, verbose):

|

||||||

|

nt = next(d1.execute("select count(w) from up"))[0]

|

||||||

|

n = 0

|

||||||

|

miss = 0

|

||||||

|

for w, rd, fn in d1.execute("select w, rd, fn from up"):

|

||||||

|

n += 1

|

||||||

|

if n % 25_000 == 0:

|

||||||

|

m = f"\033[36mchecked {n:,} of {nt:,} files in {n1} against {n2}\033[0m"

|

||||||

|

print(m)

|

||||||

|

|

||||||

|

q = "select w from up where substr(w,1,16) = ?"

|

||||||

|

hit = d2.execute(q, (w[:16],)).fetchone()

|

||||||

|

if not hit:

|

||||||

|

miss += 1

|

||||||

|

if verbose:

|

||||||

|

print(f"file in {n1} missing in {n2}: [{w}] {rd}/{fn}")

|

||||||

|

|

||||||

|

print(f" {miss} files in {n1} missing in {n2}\n")

|

||||||

|

|

||||||

|

nt = next(d1.execute("select count(w) from mt"))[0]

|

||||||

|

n = 0

|

||||||

|

miss = {}

|

||||||

|

nmiss = 0

|

||||||

|

for w, k, v in d1.execute("select * from mt"):

|

||||||

|

n += 1

|

||||||

|

if n % 100_000 == 0:

|

||||||

|

m = f"\033[36mchecked {n:,} of {nt:,} tags in {n1} against {n2}, so far {nmiss} missing tags\033[0m"

|

||||||

|

print(m)

|

||||||

|

|

||||||

|

v2 = d2.execute("select v from mt where w = ? and +k = ?", (w, k)).fetchone()

|

||||||

|

if v2:

|

||||||

|

v2 = v2[0]

|

||||||

|

|

||||||

|

# if v != v2 and v2 and k in [".bpm", "key"] and n2 == "src":

|

||||||

|

# print(f"{w} [{rd}/{fn}] {k} = [{v}] / [{v2}]")

|

||||||

|

|

||||||

|

if v2 is not None:

|

||||||

|

if k.startswith("."):

|

||||||

|

try:

|

||||||

|

diff = abs(float(v) - float(v2))

|

||||||

|

if diff > float(v) / 0.9:

|

||||||

|

v2 = None

|

||||||

|

else:

|

||||||

|

v2 = v

|

||||||

|

except:

|

||||||

|

pass

|

||||||

|

|

||||||

|

if v != v2:

|

||||||

|

v2 = None

|

||||||

|

|

||||||

|

if v2 is None:

|

||||||

|

nmiss += 1

|

||||||

|

try:

|

||||||

|

miss[k] += 1

|

||||||

|

except:

|

||||||

|

miss[k] = 1

|

||||||

|

|

||||||

|

if verbose:

|

||||||

|

q = "select rd, fn from up where substr(w,1,16) = ?"

|

||||||

|

rd, fn = d1.execute(q, (w,)).fetchone()

|

||||||

|

print(f"missing in {n2}: [{w}] [{rd}/{fn}] {k} = {v}")

|

||||||

|

|

||||||

|

for k, v in sorted(miss.items()):

|

||||||

|

if v:

|

||||||

|

print(f"{n1} has {v:6} more {k:<6} tags than {n2}")

|

||||||

|

|

||||||

|

print(f"in total, {nmiss} missing tags in {n2}\n")

|

||||||

|

|

||||||

|

|

||||||

|

def copy_mtp(d1, d2, tag, rm):

|

||||||

|

nt = next(d1.execute("select count(w) from mt where k = ?", (tag,)))[0]

|

||||||

|

n = 0

|

||||||

|

ndone = 0

|

||||||

|

for w, k, v in d1.execute("select * from mt where k = ?", (tag,)):

|

||||||

|

n += 1

|

||||||

|

if n % 25_000 == 0:

|

||||||

|

m = f"\033[36m{n:,} of {nt:,} tags checked, so far {ndone} copied\033[0m"

|

||||||

|

print(m)

|

||||||

|

|

||||||

|

hit = d2.execute("select v from mt where w = ? and +k = ?", (w, k)).fetchone()

|

||||||

|

if hit:

|

||||||

|

hit = hit[0]

|

||||||

|

|

||||||

|

if hit != v:

|

||||||

|

ndone += 1

|

||||||

|

if hit is not None:

|

||||||

|

d2.execute("delete from mt where w = ? and +k = ?", (w, k))

|

||||||

|

|

||||||

|

d2.execute("insert into mt values (?,?,?)", (w, k, v))

|

||||||

|

if rm:

|

||||||

|

d2.execute("delete from mt where w = ? and +k = 't:mtp'", (w,))

|

||||||

|

|

||||||

|

d2.commit()

|

||||||

|

print(f"copied {ndone} {tag} tags over")

|

||||||

|

|

||||||

|

|

||||||

|

def main():

|

||||||

|

os.system("")

|

||||||

|

print()

|

||||||

|

|

||||||

|

ap = argparse.ArgumentParser()

|

||||||

|

ap.add_argument("db", help="database to work on")

|

||||||

|

ap.add_argument("-src", metavar="DB", type=str, help="database to copy from")

|

||||||

|

|

||||||

|

ap2 = ap.add_argument_group("informational / read-only stuff")

|

||||||

|

ap2.add_argument("-v", action="store_true", help="verbose")

|

||||||

|

ap2.add_argument("-ls", action="store_true", help="list summary for db")

|

||||||

|

ap2.add_argument("-cmp", action="store_true", help="compare databases")

|

||||||

|

|

||||||

|

ap2 = ap.add_argument_group("options which modify target db")

|

||||||

|

ap2.add_argument("-copy", metavar="TAG", type=str, help="mtp tag to copy over")

|

||||||

|

ap2.add_argument(

|

||||||

|

"-rm-mtp-flag",

|

||||||

|

action="store_true",

|

||||||

|

help="when an mtp tag is copied over, also mark that as done, so copyparty won't run mtp on it",

|

||||||

|

)

|

||||||

|

ap2.add_argument("-vac", action="store_true", help="optimize DB")

|

||||||

|

|

||||||

|

ar = ap.parse_args()

|

||||||

|

|

||||||

|

for v in [ar.db, ar.src]:

|

||||||

|

if v and not os.path.exists(v):

|

||||||

|

die("database must exist")

|

||||||

|

|

||||||

|

db = sqlite3.connect(ar.db)

|

||||||

|

ds = sqlite3.connect(ar.src) if ar.src else None

|

||||||

|

|

||||||

|

for d, n in [[ds, "src"], [db, "dst"]]:

|

||||||

|

if not d:

|

||||||

|

continue

|

||||||

|

|

||||||

|

ver = read_ver(d)

|

||||||

|

if ver == "corrupt":

|

||||||

|

die("{} database appears to be corrupt, sorry")

|

||||||

|

|

||||||

|

if ver != DB_VER:

|

||||||

|

m = f"{n} db is version {ver}, this tool only supports version {DB_VER}, please upgrade it with copyparty first"

|

||||||

|

die(m)

|

||||||

|

|

||||||

|

if ar.ls:

|

||||||

|

ls(db)

|

||||||

|

|

||||||

|

if ar.cmp:

|

||||||

|

if not ds:

|

||||||

|

die("need src db to compare against")

|

||||||

|

|

||||||

|

compare("src", ds, "dst", db, ar.v)

|

||||||

|

compare("dst", db, "src", ds, ar.v)

|

||||||

|

|

||||||

|

if ar.copy:

|

||||||

|

copy_mtp(ds, db, ar.copy, ar.rm_mtp_flag)

|

||||||

|

|

||||||

|

|

||||||

|

if __name__ == "__main__":

|

||||||

|

main()

|

||||||

96

bin/mtag/exe.py

Normal file

96

bin/mtag/exe.py

Normal file

@@ -0,0 +1,96 @@

|

|||||||

|

#!/usr/bin/env python

|

||||||

|

|

||||||

|

import sys

|

||||||

|

import time

|

||||||

|

import json

|

||||||

|

import pefile

|

||||||

|

|

||||||

|

"""

|

||||||

|

retrieve exe info,

|

||||||

|

example for multivalue providers

|

||||||

|

"""

|

||||||

|

|

||||||

|

|

||||||

|

def unk(v):

|

||||||

|

return "unk({:04x})".format(v)

|

||||||

|

|

||||||

|

|

||||||

|

class PE2(pefile.PE):

|

||||||

|

def __init__(self, *a, **ka):

|

||||||

|

for k in [

|

||||||

|

# -- parse_data_directories:

|

||||||

|

"parse_import_directory",

|

||||||

|

"parse_export_directory",

|

||||||

|

# "parse_resources_directory",

|

||||||

|

"parse_debug_directory",

|

||||||

|

"parse_relocations_directory",

|

||||||

|

"parse_directory_tls",

|

||||||

|

"parse_directory_load_config",

|

||||||

|

"parse_delay_import_directory",

|

||||||

|

"parse_directory_bound_imports",

|

||||||

|

# -- full_load:

|

||||||

|

"parse_rich_header",

|

||||||

|

]:

|

||||||

|

setattr(self, k, self.noop)

|

||||||

|

|

||||||

|

super(PE2, self).__init__(*a, **ka)

|

||||||

|

|

||||||

|

def noop(*a, **ka):

|

||||||

|

pass

|

||||||

|

|

||||||

|

|

||||||

|

try:

|

||||||

|

pe = PE2(sys.argv[1], fast_load=False)

|

||||||

|

except:

|

||||||

|

sys.exit(0)

|

||||||

|

|

||||||

|

arch = pe.FILE_HEADER.Machine

|

||||||

|

if arch == 0x14C:

|

||||||

|

arch = "x86"

|

||||||

|

elif arch == 0x8664:

|

||||||

|

arch = "x64"

|

||||||

|

else:

|

||||||

|

arch = unk(arch)

|

||||||

|

|

||||||

|

try:

|

||||||

|

buildtime = time.gmtime(pe.FILE_HEADER.TimeDateStamp)

|

||||||

|

buildtime = time.strftime("%Y-%m-%d_%H:%M:%S", buildtime)

|

||||||

|

except:

|

||||||

|

buildtime = "invalid"

|

||||||

|

|

||||||

|

ui = pe.OPTIONAL_HEADER.Subsystem

|

||||||

|

if ui == 2:

|

||||||

|

ui = "GUI"

|

||||||

|

elif ui == 3:

|

||||||

|

ui = "cmdline"

|

||||||

|

else:

|

||||||

|

ui = unk(ui)

|

||||||

|

|

||||||

|

extra = {}

|

||||||

|

if hasattr(pe, "FileInfo"):

|

||||||

|

for v1 in pe.FileInfo:

|

||||||

|

for v2 in v1:

|

||||||

|

if v2.name != "StringFileInfo":

|

||||||

|

continue

|

||||||

|

|

||||||

|

for v3 in v2.StringTable:

|

||||||

|

for k, v in v3.entries.items():

|

||||||

|

v = v.decode("utf-8", "replace").strip()

|

||||||

|

if not v:

|

||||||

|

continue

|

||||||

|

|

||||||

|

if k in [b"FileVersion", b"ProductVersion"]:

|

||||||

|

extra["ver"] = v

|

||||||

|

|

||||||

|

if k in [b"OriginalFilename", b"InternalName"]:

|

||||||

|

extra["orig"] = v

|

||||||

|

|

||||||

|

r = {

|

||||||

|

"arch": arch,

|

||||||

|

"built": buildtime,

|

||||||

|

"ui": ui,

|

||||||

|

"cksum": "{:08x}".format(pe.OPTIONAL_HEADER.CheckSum),

|

||||||

|

}

|

||||||

|

r.update(extra)

|

||||||

|

|

||||||

|

print(json.dumps(r, indent=4))

|

||||||

9

bin/mtag/file-ext.py

Normal file

9

bin/mtag/file-ext.py

Normal file

@@ -0,0 +1,9 @@

|

|||||||

|

#!/usr/bin/env python

|

||||||

|

|

||||||

|

import sys

|

||||||

|

|

||||||

|

"""

|

||||||

|

example that just prints the file extension

|

||||||

|

"""

|

||||||

|

|

||||||

|

print(sys.argv[1].split(".")[-1])

|

||||||

@@ -9,6 +9,16 @@

|

|||||||

* assumes the webserver and copyparty is running on the same server/IP

|

* assumes the webserver and copyparty is running on the same server/IP

|

||||||

* modify `10.13.1.1` as necessary if you wish to support browsers without javascript

|

* modify `10.13.1.1` as necessary if you wish to support browsers without javascript

|

||||||

|

|

||||||

|

### [`sharex.sxcu`](sharex.sxcu)

|

||||||

|

* sharex config file to upload screenshots and grab the URL

|

||||||

|

* `RequestURL`: full URL to the target folder

|

||||||

|

* `pw`: password (remove the `pw` line if anon-write)

|

||||||

|

|

||||||

|

however if your copyparty is behind a reverse-proxy, you may want to use [`sharex-html.sxcu`](sharex-html.sxcu) instead:

|

||||||

|

* `RequestURL`: full URL to the target folder

|

||||||

|

* `URL`: full URL to the root folder (with trailing slash) followed by `$regex:1|1$`

|

||||||

|

* `pw`: password (remove `Parameters` if anon-write)

|

||||||

|

|

||||||

### [`explorer-nothumbs-nofoldertypes.reg`](explorer-nothumbs-nofoldertypes.reg)

|

### [`explorer-nothumbs-nofoldertypes.reg`](explorer-nothumbs-nofoldertypes.reg)

|

||||||

* disables thumbnails and folder-type detection in windows explorer

|

* disables thumbnails and folder-type detection in windows explorer

|

||||||

* makes it way faster (especially for slow/networked locations (such as copyparty-fuse))

|

* makes it way faster (especially for slow/networked locations (such as copyparty-fuse))

|

||||||

|

|||||||

19

contrib/sharex-html.sxcu

Normal file

19

contrib/sharex-html.sxcu

Normal file

@@ -0,0 +1,19 @@

|

|||||||

|

{

|

||||||

|

"Version": "13.5.0",

|

||||||

|

"Name": "copyparty-html",

|

||||||

|

"DestinationType": "ImageUploader",

|

||||||

|

"RequestMethod": "POST",

|

||||||

|

"RequestURL": "http://127.0.0.1:3923/sharex",

|

||||||

|

"Parameters": {

|

||||||

|

"pw": "wark"

|

||||||

|

},

|

||||||

|

"Body": "MultipartFormData",

|

||||||

|

"Arguments": {

|

||||||

|

"act": "bput"

|

||||||

|

},

|

||||||

|

"FileFormName": "f",

|

||||||

|

"RegexList": [

|

||||||

|

"bytes // <a href=\"/([^\"]+)\""

|

||||||

|

],

|

||||||

|

"URL": "http://127.0.0.1:3923/$regex:1|1$"

|

||||||

|

}

|

||||||

17

contrib/sharex.sxcu

Normal file

17

contrib/sharex.sxcu

Normal file

@@ -0,0 +1,17 @@

|

|||||||

|

{

|

||||||

|

"Version": "13.5.0",

|

||||||

|

"Name": "copyparty",

|

||||||

|

"DestinationType": "ImageUploader",

|

||||||

|

"RequestMethod": "POST",

|

||||||

|

"RequestURL": "http://127.0.0.1:3923/sharex",

|

||||||

|

"Parameters": {

|

||||||

|

"pw": "wark",

|

||||||

|

"j": null

|

||||||

|

},

|

||||||

|

"Body": "MultipartFormData",

|

||||||

|

"Arguments": {

|

||||||

|

"act": "bput"

|

||||||

|

},

|

||||||

|

"FileFormName": "f",

|

||||||

|

"URL": "$json:files[0].url$"

|

||||||

|

}

|

||||||

@@ -2,6 +2,7 @@

|

|||||||

from __future__ import print_function, unicode_literals

|

from __future__ import print_function, unicode_literals

|

||||||

|

|

||||||

import platform

|

import platform

|

||||||

|

import time

|

||||||

import sys

|

import sys

|

||||||

import os

|

import os

|

||||||

|

|

||||||

@@ -16,12 +17,18 @@ if platform.system() == "Windows":

|

|||||||

VT100 = not WINDOWS or WINDOWS >= [10, 0, 14393]

|

VT100 = not WINDOWS or WINDOWS >= [10, 0, 14393]

|

||||||

# introduced in anniversary update

|

# introduced in anniversary update

|

||||||

|

|

||||||

|

ANYWIN = WINDOWS or sys.platform in ["msys"]

|

||||||

|

|

||||||

MACOS = platform.system() == "Darwin"

|

MACOS = platform.system() == "Darwin"

|

||||||

|

|

||||||

|

|

||||||

class EnvParams(object):

|

class EnvParams(object):

|

||||||

def __init__(self):

|

def __init__(self):

|

||||||

|

self.t0 = time.time()

|

||||||

self.mod = os.path.dirname(os.path.realpath(__file__))

|

self.mod = os.path.dirname(os.path.realpath(__file__))

|

||||||

|

if self.mod.endswith("__init__"):

|

||||||

|

self.mod = os.path.dirname(self.mod)

|

||||||

|

|

||||||

if sys.platform == "win32":

|

if sys.platform == "win32":

|

||||||

self.cfg = os.path.normpath(os.environ["APPDATA"] + "/copyparty")

|

self.cfg = os.path.normpath(os.environ["APPDATA"] + "/copyparty")

|

||||||

elif sys.platform == "darwin":

|

elif sys.platform == "darwin":

|

||||||

|

|||||||

@@ -225,6 +225,20 @@ def run_argparse(argv, formatter):

|

|||||||

--ciphers help = available ssl/tls ciphers,

|

--ciphers help = available ssl/tls ciphers,

|

||||||

--ssl-ver help = available ssl/tls versions,

|

--ssl-ver help = available ssl/tls versions,

|

||||||

default is what python considers safe, usually >= TLS1

|

default is what python considers safe, usually >= TLS1

|

||||||

|

|

||||||

|

values for --ls:

|

||||||

|

"USR" is a user to browse as; * is anonymous, ** is all users

|

||||||

|

"VOL" is a single volume to scan, default is * (all vols)

|

||||||

|

"FLAG" is flags;

|

||||||

|

"v" in addition to realpaths, print usernames and vpaths

|

||||||

|

"ln" only prints symlinks leaving the volume mountpoint

|

||||||

|

"p" exits 1 if any such symlinks are found

|

||||||

|

"r" resumes startup after the listing

|

||||||

|

examples:

|

||||||

|

--ls '**' # list all files which are possible to read

|

||||||

|

--ls '**,*,ln' # check for dangerous symlinks

|

||||||

|

--ls '**,*,ln,p,r' # check, then start normally if safe

|

||||||

|

\033[0m

|

||||||

"""

|

"""

|

||||||

),

|

),

|

||||||

)

|

)

|

||||||

@@ -237,19 +251,33 @@ def run_argparse(argv, formatter):

|

|||||||

ap.add_argument("-a", metavar="ACCT", type=str, action="append", help="add account")

|

ap.add_argument("-a", metavar="ACCT", type=str, action="append", help="add account")

|

||||||

ap.add_argument("-v", metavar="VOL", type=str, action="append", help="add volume")

|

ap.add_argument("-v", metavar="VOL", type=str, action="append", help="add volume")

|

||||||

ap.add_argument("-q", action="store_true", help="quiet")

|

ap.add_argument("-q", action="store_true", help="quiet")

|

||||||

ap.add_argument("--log-conn", action="store_true", help="print tcp-server msgs")

|

|

||||||

ap.add_argument("-ed", action="store_true", help="enable ?dots")

|

ap.add_argument("-ed", action="store_true", help="enable ?dots")

|

||||||

ap.add_argument("-emp", action="store_true", help="enable markdown plugins")

|

ap.add_argument("-emp", action="store_true", help="enable markdown plugins")

|

||||||

ap.add_argument("-mcr", metavar="SEC", type=int, default=60, help="md-editor mod-chk rate")

|

ap.add_argument("-mcr", metavar="SEC", type=int, default=60, help="md-editor mod-chk rate")

|

||||||

ap.add_argument("-nw", action="store_true", help="disable writes (benchmark)")

|

ap.add_argument("-nw", action="store_true", help="disable writes (benchmark)")

|

||||||

ap.add_argument("-nih", action="store_true", help="no info hostname")

|

ap.add_argument("-nih", action="store_true", help="no info hostname")

|

||||||

ap.add_argument("-nid", action="store_true", help="no info disk-usage")

|

ap.add_argument("-nid", action="store_true", help="no info disk-usage")

|

||||||

|

ap.add_argument("--dotpart", action="store_true", help="dotfile incomplete uploads")

|

||||||

ap.add_argument("--no-zip", action="store_true", help="disable download as zip/tar")

|

ap.add_argument("--no-zip", action="store_true", help="disable download as zip/tar")

|

||||||

ap.add_argument("--no-sendfile", action="store_true", help="disable sendfile (for debugging)")

|

ap.add_argument("--sparse", metavar="MiB", type=int, default=4, help="up2k min.size threshold (mswin-only)")

|

||||||

ap.add_argument("--no-scandir", action="store_true", help="disable scandir (for debugging)")

|

|

||||||

ap.add_argument("--urlform", metavar="MODE", type=str, default="print,get", help="how to handle url-forms")

|

ap.add_argument("--urlform", metavar="MODE", type=str, default="print,get", help="how to handle url-forms")

|

||||||

ap.add_argument("--salt", type=str, default="hunter2", help="up2k file-hash salt")

|

ap.add_argument("--salt", type=str, default="hunter2", help="up2k file-hash salt")

|

||||||

|

|

||||||

|

ap2 = ap.add_argument_group('admin panel options')

|

||||||

|

ap2.add_argument("--no-rescan", action="store_true", help="disable ?scan (volume reindexing)")

|

||||||

|

ap2.add_argument("--no-stack", action="store_true", help="disable ?stack (list all stacks)")

|

||||||

|

|

||||||

|

ap2 = ap.add_argument_group('thumbnail options')

|

||||||

|

ap2.add_argument("--no-thumb", action="store_true", help="disable all thumbnails")

|

||||||

|

ap2.add_argument("--no-vthumb", action="store_true", help="disable video thumbnails")

|

||||||

|

ap2.add_argument("--th-size", metavar="WxH", default="320x256", help="thumbnail res")

|

||||||

|

ap2.add_argument("--th-no-crop", action="store_true", help="dynamic height; show full image")

|

||||||

|

ap2.add_argument("--th-no-jpg", action="store_true", help="disable jpg output")

|

||||||

|

ap2.add_argument("--th-no-webp", action="store_true", help="disable webp output")

|

||||||

|

ap2.add_argument("--th-poke", metavar="SEC", type=int, default=300, help="activity labeling cooldown")

|

||||||

|

ap2.add_argument("--th-clean", metavar="SEC", type=int, default=43200, help="cleanup interval")

|