mirror of

https://github.com/9001/copyparty.git

synced 2025-11-05 06:13:20 +00:00

Compare commits

264 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

f9be4c62b1 | ||

|

|

027e8c18f1 | ||

|

|

4a3bb35a95 | ||

|

|

4bfb0d4494 | ||

|

|

7e0ef03a1e | ||

|

|

f7dbd95a54 | ||

|

|

515ee2290b | ||

|

|

b0c78910bb | ||

|

|

f4ca62b664 | ||

|

|

8eb8043a3d | ||

|

|

3e8541362a | ||

|

|

789724e348 | ||

|

|

5125b9532f | ||

|

|

ebc9de02b0 | ||

|

|

ec788fa491 | ||

|

|

9b5e264574 | ||

|

|

57c297274b | ||

|

|

e9bf092317 | ||

|

|

d173887324 | ||

|

|

99820d854c | ||

|

|

62df0a0eb2 | ||

|

|

600e9ac947 | ||

|

|

3ca41be2b4 | ||

|

|

5c7debd900 | ||

|

|

7fa5b23ce3 | ||

|

|

ff82738aaf | ||

|

|

bf5ee9d643 | ||

|

|

72a8593ecd | ||

|

|

bc3bbe07d4 | ||

|

|

c7cb64bfef | ||

|

|

629f537d06 | ||

|

|

9e988041b8 | ||

|

|

f9a8b5c9d7 | ||

|

|

b9c3538253 | ||

|

|

2bc0cdf017 | ||

|

|

02a91f60d4 | ||

|

|

fae83da197 | ||

|

|

0fe4aa6418 | ||

|

|

21a51bf0dc | ||

|

|

bcb353cc30 | ||

|

|

6af4508518 | ||

|

|

6a559bc28a | ||

|

|

0f5026cd20 | ||

|

|

a91b80a311 | ||

|

|

ec534701c8 | ||

|

|

af5169f67f | ||

|

|

18676c5e65 | ||

|

|

e2df6fda7b | ||

|

|

e9ae9782fe | ||

|

|

016dba4ca9 | ||

|

|

39c7ef305f | ||

|

|

849c1dc848 | ||

|

|

61414014fe | ||

|

|

578a915884 | ||

|

|

eacafb8a63 | ||

|

|

4446760f74 | ||

|

|

6da2a083f9 | ||

|

|

8837c8f822 | ||

|

|

bac301ed66 | ||

|

|

061db3906d | ||

|

|

fd7df5c952 | ||

|

|

a270019147 | ||

|

|

55e0209901 | ||

|

|

2b255fbbed | ||

|

|

8a2345a0fb | ||

|

|

bfa9f535aa | ||

|

|

f757623ad8 | ||

|

|

3c7465e268 | ||

|

|

108665fc4f | ||

|

|

ed519c9138 | ||

|

|

2dd2e2c57e | ||

|

|

6c3a976222 | ||

|

|

80cc26bd95 | ||

|

|

970fb84fd8 | ||

|

|

20cbcf6931 | ||

|

|

8fcde2a579 | ||

|

|

b32d1f8ad3 | ||

|

|

03513e0cb1 | ||

|

|

e041a2b197 | ||

|

|

d7d625be2a | ||

|

|

4121266678 | ||

|

|

22971a6be4 | ||

|

|

efbf8d7e0d | ||

|

|

397396ea4a | ||

|

|

e59b077c21 | ||

|

|

4bc39f3084 | ||

|

|

21c3570786 | ||

|

|

2f85c1fb18 | ||

|

|

1e27a4c2df | ||

|

|

456f575637 | ||

|

|

51546c9e64 | ||

|

|

83b4b70ef4 | ||

|

|

a5120d4f6f | ||

|

|

c95941e14f | ||

|

|

0dd531149d | ||

|

|

67da1b5219 | ||

|

|

919bd16437 | ||

|

|

ecead109ab | ||

|

|

765294c263 | ||

|

|

d6b5351207 | ||

|

|

a2009bcc6b | ||

|

|

12709a8a0a | ||

|

|

c055baefd2 | ||

|

|

56522599b5 | ||

|

|

664f53b75d | ||

|

|

87200d9f10 | ||

|

|

5c3d0b6520 | ||

|

|

bd49979f4a | ||

|

|

7e606cdd9f | ||

|

|

8b4b7fa794 | ||

|

|

05345ddf8b | ||

|

|

66adb470ad | ||

|

|

e15c8fd146 | ||

|

|

0f09b98a39 | ||

|

|

b4d6f4e24d | ||

|

|

3217fa625b | ||

|

|

e719ff8a47 | ||

|

|

9fcf528d45 | ||

|

|

1ddbf5a158 | ||

|

|

64bf4574b0 | ||

|

|

5649d26077 | ||

|

|

92f923effe | ||

|

|

0d46d548b9 | ||

|

|

062df3f0c3 | ||

|

|

789fb53b8e | ||

|

|

351db5a18f | ||

|

|

aabbd271c8 | ||

|

|

aae8e0171e | ||

|

|

45827a2458 | ||

|

|

726030296f | ||

|

|

6659ab3881 | ||

|

|

c6a103609e | ||

|

|

c6b3f035e5 | ||

|

|

2b0a7e378e | ||

|

|

b75ce909c8 | ||

|

|

229c3f5dab | ||

|

|

ec73094506 | ||

|

|

c7650c9326 | ||

|

|

d94c6d4e72 | ||

|

|

3cc8760733 | ||

|

|

a2f6973495 | ||

|

|

f8648fa651 | ||

|

|

177aa038df | ||

|

|

e0a14ec881 | ||

|

|

9366512f2f | ||

|

|

ea38b8041a | ||

|

|

f1870daf0d | ||

|

|

9722441aad | ||

|

|

9d014087f4 | ||

|

|

83b4038b85 | ||

|

|

1e0a448feb | ||

|

|

fb81de3b36 | ||

|

|

aa4f352301 | ||

|

|

f1a1c2ea45 | ||

|

|

6249bd4163 | ||

|

|

2579dc64ce | ||

|

|

356512270a | ||

|

|

bed27f2b43 | ||

|

|

54013d861b | ||

|

|

ec100210dc | ||

|

|

3ab1acf32c | ||

|

|

8c28266418 | ||

|

|

7f8b8dcb92 | ||

|

|

6dd39811d4 | ||

|

|

35e2138e3e | ||

|

|

239b4e9fe6 | ||

|

|

2fcd0e7e72 | ||

|

|

357347ce3a | ||

|

|

36dc1107fb | ||

|

|

0a3bbc4b4a | ||

|

|

855b93dcf6 | ||

|

|

89b79ba267 | ||

|

|

f5651b7d94 | ||

|

|

1881019ede | ||

|

|

caba4e974c | ||

|

|

bc3c9613bc | ||

|

|

15a3ee252e | ||

|

|

be055961ae | ||

|

|

e3031bdeec | ||

|

|

75917b9f7c | ||

|

|

910732e02c | ||

|

|

264b497681 | ||

|

|

372b949622 | ||

|

|

789a602914 | ||

|

|

093e955100 | ||

|

|

c32a89bebf | ||

|

|

c0bebe9f9f | ||

|

|

57579b2fe5 | ||

|

|

51d14a6b4d | ||

|

|

c50f1b64e5 | ||

|

|

98aaab02c5 | ||

|

|

0fc7973d8b | ||

|

|

10362aa02e | ||

|

|

0a8e759fe6 | ||

|

|

d70981cdd1 | ||

|

|

e08c03b886 | ||

|

|

56086e8984 | ||

|

|

1aa9033022 | ||

|

|

076e103d53 | ||

|

|

38c00ea8fc | ||

|

|

415757af43 | ||

|

|

e72ed8c0ed | ||

|

|

32f9c6b5bb | ||

|

|

6251584ef6 | ||

|

|

f3e413bc28 | ||

|

|

6f6cc8f3f8 | ||

|

|

8b081e9e69 | ||

|

|

c8a510d10e | ||

|

|

6f834f6679 | ||

|

|

cf2d6650ac | ||

|

|

cd52dea488 | ||

|

|

6ea75df05d | ||

|

|

4846e1e8d6 | ||

|

|

fc024f789d | ||

|

|

473e773aea | ||

|

|

48a2e1a353 | ||

|

|

6da63fbd79 | ||

|

|

5bec37fcee | ||

|

|

3fd0ba0a31 | ||

|

|

241a143366 | ||

|

|

a537064da7 | ||

|

|

f3dfd24c92 | ||

|

|

fa0a7f50bb | ||

|

|

44a78a7e21 | ||

|

|

6b75cbf747 | ||

|

|

e7b18ab9fe | ||

|

|

aa12830015 | ||

|

|

f156e00064 | ||

|

|

d53c212516 | ||

|

|

ca27f8587c | ||

|

|

88ce008e16 | ||

|

|

081d2cc5d7 | ||

|

|

60ac68d000 | ||

|

|

fbe656957d | ||

|

|

5534c78c17 | ||

|

|

a45a53fdce | ||

|

|

972a56e738 | ||

|

|

5e03b3ca38 | ||

|

|

1078d933b4 | ||

|

|

d6bf300d80 | ||

|

|

a359d64d44 | ||

|

|

22396e8c33 | ||

|

|

5ded5a4516 | ||

|

|

79c7639aaf | ||

|

|

5bbf875385 | ||

|

|

5e159432af | ||

|

|

1d6ae409f6 | ||

|

|

9d729d3d1a | ||

|

|

4dd5d4e1b7 | ||

|

|

acd8149479 | ||

|

|

b97a1088fa | ||

|

|

b77bed3324 | ||

|

|

a2b7c85a1f | ||

|

|

b28533f850 | ||

|

|

bd8c7e538a | ||

|

|

89e48cff24 | ||

|

|

ae90a7b7b6 | ||

|

|

6fc1be04da | ||

|

|

0061d29534 | ||

|

|

a891f34a93 | ||

|

|

d6a1e62a95 | ||

|

|

cda36ea8b4 | ||

|

|

909a76434a | ||

|

|

39348ef659 |

17

.vscode/launch.json

vendored

17

.vscode/launch.json

vendored

@@ -16,12 +16,9 @@

|

|||||||

"-e2ts",

|

"-e2ts",

|

||||||

"-mtp",

|

"-mtp",

|

||||||

".bpm=f,bin/mtag/audio-bpm.py",

|

".bpm=f,bin/mtag/audio-bpm.py",

|

||||||

"-a",

|

"-aed:wark",

|

||||||

"ed:wark",

|

"-vsrv::r:aed:cnodupe",

|

||||||

"-v",

|

"-vdist:dist:r"

|

||||||

"srv::r:aed:cnodupe",

|

|

||||||

"-v",

|

|

||||||

"dist:dist:r"

|

|

||||||

]

|

]

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

@@ -43,5 +40,13 @@

|

|||||||

"${file}"

|

"${file}"

|

||||||

]

|

]

|

||||||

},

|

},

|

||||||

|

{

|

||||||

|

"name": "Python: Current File",

|

||||||

|

"type": "python",

|

||||||

|

"request": "launch",

|

||||||

|

"program": "${file}",

|

||||||

|

"console": "integratedTerminal",

|

||||||

|

"justMyCode": false

|

||||||

|

},

|

||||||

]

|

]

|

||||||

}

|

}

|

||||||

18

.vscode/launch.py

vendored

18

.vscode/launch.py

vendored

@@ -3,14 +3,16 @@

|

|||||||

# launches 10x faster than mspython debugpy

|

# launches 10x faster than mspython debugpy

|

||||||

# and is stoppable with ^C

|

# and is stoppable with ^C

|

||||||

|

|

||||||

|

import re

|

||||||

import os

|

import os

|

||||||

import sys

|

import sys

|

||||||

|

|

||||||

|

print(sys.executable)

|

||||||

|

|

||||||

import shlex

|

import shlex

|

||||||

|

|

||||||

sys.path.insert(0, os.getcwd())

|

|

||||||

|

|

||||||

import jstyleson

|

import jstyleson

|

||||||

from copyparty.__main__ import main as copyparty

|

import subprocess as sp

|

||||||

|

|

||||||

|

|

||||||

with open(".vscode/launch.json", "r", encoding="utf-8") as f:

|

with open(".vscode/launch.json", "r", encoding="utf-8") as f:

|

||||||

tj = f.read()

|

tj = f.read()

|

||||||

@@ -25,6 +27,14 @@ except:

|

|||||||

pass

|

pass

|

||||||

|

|

||||||

argv = [os.path.expanduser(x) if x.startswith("~") else x for x in argv]

|

argv = [os.path.expanduser(x) if x.startswith("~") else x for x in argv]

|

||||||

|

|

||||||

|

if re.search(" -j ?[0-9]", " ".join(argv)):

|

||||||

|

argv = [sys.executable, "-m", "copyparty"] + argv

|

||||||

|

sp.check_call(argv)

|

||||||

|

else:

|

||||||

|

sys.path.insert(0, os.getcwd())

|

||||||

|

from copyparty.__main__ import main as copyparty

|

||||||

|

|

||||||

try:

|

try:

|

||||||

copyparty(["a"] + argv)

|

copyparty(["a"] + argv)

|

||||||

except SystemExit as ex:

|

except SystemExit as ex:

|

||||||

|

|||||||

5

.vscode/tasks.json

vendored

5

.vscode/tasks.json

vendored

@@ -9,7 +9,10 @@

|

|||||||

{

|

{

|

||||||

"label": "no_dbg",

|

"label": "no_dbg",

|

||||||

"type": "shell",

|

"type": "shell",

|

||||||

"command": "${config:python.pythonPath} .vscode/launch.py"

|

"command": "${config:python.pythonPath}",

|

||||||

|

"args": [

|

||||||

|

".vscode/launch.py"

|

||||||

|

]

|

||||||

}

|

}

|

||||||

]

|

]

|

||||||

}

|

}

|

||||||

266

README.md

266

README.md

@@ -20,8 +20,10 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

|||||||

|

|

||||||

* top

|

* top

|

||||||

* [quickstart](#quickstart)

|

* [quickstart](#quickstart)

|

||||||

|

* [on debian](#on-debian)

|

||||||

* [notes](#notes)

|

* [notes](#notes)

|

||||||

* [status](#status)

|

* [status](#status)

|

||||||

|

* [testimonials](#testimonials)

|

||||||

* [bugs](#bugs)

|

* [bugs](#bugs)

|

||||||

* [general bugs](#general-bugs)

|

* [general bugs](#general-bugs)

|

||||||

* [not my bugs](#not-my-bugs)

|

* [not my bugs](#not-my-bugs)

|

||||||

@@ -37,12 +39,14 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

|||||||

* [other tricks](#other-tricks)

|

* [other tricks](#other-tricks)

|

||||||

* [searching](#searching)

|

* [searching](#searching)

|

||||||

* [search configuration](#search-configuration)

|

* [search configuration](#search-configuration)

|

||||||

|

* [database location](#database-location)

|

||||||

* [metadata from audio files](#metadata-from-audio-files)

|

* [metadata from audio files](#metadata-from-audio-files)

|

||||||

* [file parser plugins](#file-parser-plugins)

|

* [file parser plugins](#file-parser-plugins)

|

||||||

* [complete examples](#complete-examples)

|

* [complete examples](#complete-examples)

|

||||||

* [browser support](#browser-support)

|

* [browser support](#browser-support)

|

||||||

* [client examples](#client-examples)

|

* [client examples](#client-examples)

|

||||||

* [up2k](#up2k)

|

* [up2k](#up2k)

|

||||||

|

* [performance](#performance)

|

||||||

* [dependencies](#dependencies)

|

* [dependencies](#dependencies)

|

||||||

* [optional dependencies](#optional-dependencies)

|

* [optional dependencies](#optional-dependencies)

|

||||||

* [install recommended deps](#install-recommended-deps)

|

* [install recommended deps](#install-recommended-deps)

|

||||||

@@ -50,9 +54,12 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

|||||||

* [sfx](#sfx)

|

* [sfx](#sfx)

|

||||||

* [sfx repack](#sfx-repack)

|

* [sfx repack](#sfx-repack)

|

||||||

* [install on android](#install-on-android)

|

* [install on android](#install-on-android)

|

||||||

|

* [building](#building)

|

||||||

* [dev env setup](#dev-env-setup)

|

* [dev env setup](#dev-env-setup)

|

||||||

* [how to release](#how-to-release)

|

* [just the sfx](#just-the-sfx)

|

||||||

|

* [complete release](#complete-release)

|

||||||

* [todo](#todo)

|

* [todo](#todo)

|

||||||

|

* [discarded ideas](#discarded-ideas)

|

||||||

|

|

||||||

|

|

||||||

## quickstart

|

## quickstart

|

||||||

@@ -61,19 +68,45 @@ download [copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/do

|

|||||||

|

|

||||||

running the sfx without arguments (for example doubleclicking it on Windows) will give everyone full access to the current folder; see `-h` for help if you want accounts and volumes etc

|

running the sfx without arguments (for example doubleclicking it on Windows) will give everyone full access to the current folder; see `-h` for help if you want accounts and volumes etc

|

||||||

|

|

||||||

|

some recommended options:

|

||||||

|

* `-e2dsa` enables general file indexing, see [search configuration](#search-configuration)

|

||||||

|

* `-e2ts` enables audio metadata indexing (needs either FFprobe or Mutagen), see [optional dependencies](#optional-dependencies)

|

||||||

|

* `-v /mnt/music:/music:r:afoo -a foo:bar` shares `/mnt/music` as `/music`, `r`eadable by anyone, with user `foo` as `a`dmin (read/write), password `bar`

|

||||||

|

* the syntax is `-v src:dst:perm:perm:...` so local-path, url-path, and one or more permissions to set

|

||||||

|

* replace `:r:afoo` with `:rfoo` to only make the folder readable by `foo` and nobody else

|

||||||

|

* in addition to `r`ead and `a`dmin, `w`rite makes a folder write-only, so cannot list/access files in it

|

||||||

|

* `--ls '**,*,ln,p,r'` to crash on startup if any of the volumes contain a symlink which point outside the volume, as that could give users unintended access

|

||||||

|

|

||||||

you may also want these, especially on servers:

|

you may also want these, especially on servers:

|

||||||

* [contrib/systemd/copyparty.service](contrib/systemd/copyparty.service) to run copyparty as a systemd service

|

* [contrib/systemd/copyparty.service](contrib/systemd/copyparty.service) to run copyparty as a systemd service

|

||||||

* [contrib/nginx/copyparty.conf](contrib/nginx/copyparty.conf) to reverse-proxy behind nginx (for better https)

|

* [contrib/nginx/copyparty.conf](contrib/nginx/copyparty.conf) to reverse-proxy behind nginx (for better https)

|

||||||

|

|

||||||

|

|

||||||

|

### on debian

|

||||||

|

|

||||||

|

recommended steps to enable audio metadata and thumbnails (from images and videos):

|

||||||

|

|

||||||

|

* as root, run the following:

|

||||||

|

`apt install python3 python3-pip python3-dev ffmpeg`

|

||||||

|

|

||||||

|

* then, as the user which will be running copyparty (so hopefully not root), run this:

|

||||||

|

`python3 -m pip install --user -U Pillow pillow-avif-plugin`

|

||||||

|

|

||||||

|

(skipped `pyheif-pillow-opener` because apparently debian is too old to build it)

|

||||||

|

|

||||||

|

|

||||||

## notes

|

## notes

|

||||||

|

|

||||||

|

general:

|

||||||

|

* paper-printing is affected by dark/light-mode! use lightmode for color, darkmode for grayscale

|

||||||

|

* because no browsers currently implement the media-query to do this properly orz

|

||||||

|

|

||||||

|

browser-specific:

|

||||||

* iPhone/iPad: use Firefox to download files

|

* iPhone/iPad: use Firefox to download files

|

||||||

* Android-Chrome: increase "parallel uploads" for higher speed (android bug)

|

* Android-Chrome: increase "parallel uploads" for higher speed (android bug)

|

||||||

* Android-Firefox: takes a while to select files (their fix for ☝️)

|

* Android-Firefox: takes a while to select files (their fix for ☝️)

|

||||||

* Desktop-Firefox: ~~may use gigabytes of RAM if your files are massive~~ *seems to be OK now*

|

* Desktop-Firefox: ~~may use gigabytes of RAM if your files are massive~~ *seems to be OK now*

|

||||||

* paper-printing is affected by dark/light-mode! use lightmode for color, darkmode for grayscale

|

* Desktop-Firefox: may stop you from deleting folders you've uploaded until you visit `about:memory` and click `Minimize memory usage`

|

||||||

* because no browsers currently implement the media-query to do this properly orz

|

|

||||||

|

|

||||||

|

|

||||||

## status

|

## status

|

||||||

@@ -82,7 +115,7 @@ summary: all planned features work! now please enjoy the bloatening

|

|||||||

|

|

||||||

* backend stuff

|

* backend stuff

|

||||||

* ☑ sanic multipart parser

|

* ☑ sanic multipart parser

|

||||||

* ☑ load balancer (multiprocessing)

|

* ☑ multiprocessing (actual multithreading)

|

||||||

* ☑ volumes (mountpoints)

|

* ☑ volumes (mountpoints)

|

||||||

* ☑ accounts

|

* ☑ accounts

|

||||||

* upload

|

* upload

|

||||||

@@ -96,11 +129,12 @@ summary: all planned features work! now please enjoy the bloatening

|

|||||||

* ☑ FUSE client (read-only)

|

* ☑ FUSE client (read-only)

|

||||||

* browser

|

* browser

|

||||||

* ☑ tree-view

|

* ☑ tree-view

|

||||||

* ☑ media player

|

* ☑ audio player (with OS media controls)

|

||||||

* ☑ thumbnails

|

* ☑ thumbnails

|

||||||

* ☑ images using Pillow

|

* ☑ ...of images using Pillow

|

||||||

* ☑ videos using FFmpeg

|

* ☑ ...of videos using FFmpeg

|

||||||

* ☑ cache eviction (max-age; maybe max-size eventually)

|

* ☑ cache eviction (max-age; maybe max-size eventually)

|

||||||

|

* ☑ image gallery with webm player

|

||||||

* ☑ SPA (browse while uploading)

|

* ☑ SPA (browse while uploading)

|

||||||

* if you use the file-tree on the left only, not folders in the file list

|

* if you use the file-tree on the left only, not folders in the file list

|

||||||

* server indexing

|

* server indexing

|

||||||

@@ -112,24 +146,39 @@ summary: all planned features work! now please enjoy the bloatening

|

|||||||

* ☑ editor (sure why not)

|

* ☑ editor (sure why not)

|

||||||

|

|

||||||

|

|

||||||

|

## testimonials

|

||||||

|

|

||||||

|

small collection of user feedback

|

||||||

|

|

||||||

|

`good enough`, `surprisingly correct`, `certified good software`, `just works`, `why`

|

||||||

|

|

||||||

|

|

||||||

# bugs

|

# bugs

|

||||||

|

|

||||||

* Windows: python 3.7 and older cannot read tags with ffprobe, so use mutagen or upgrade

|

* Windows: python 3.7 and older cannot read tags with FFprobe, so use Mutagen or upgrade

|

||||||

* Windows: python 2.7 cannot index non-ascii filenames with `-e2d`

|

* Windows: python 2.7 cannot index non-ascii filenames with `-e2d`

|

||||||

* Windows: python 2.7 cannot handle filenames with mojibake

|

* Windows: python 2.7 cannot handle filenames with mojibake

|

||||||

|

* `--th-ff-jpg` may fix video thumbnails on some FFmpeg versions

|

||||||

|

|

||||||

## general bugs

|

## general bugs

|

||||||

|

|

||||||

* all volumes must exist / be available on startup; up2k (mtp especially) gets funky otherwise

|

* all volumes must exist / be available on startup; up2k (mtp especially) gets funky otherwise

|

||||||

* cannot mount something at `/d1/d2/d3` unless `d2` exists inside `d1`

|

* cannot mount something at `/d1/d2/d3` unless `d2` exists inside `d1`

|

||||||

* hiding the contents at url `/d1/d2/d3` using `-v :d1/d2/d3:cd2d` has the side-effect of creating databases (for files/tags) inside folders d1 and d2, and those databases take precedence over the main db at the top of the vfs - this means all files in d2 and below will be reindexed unless you already had a vfs entry at or below d2

|

* dupe files will not have metadata (audio tags etc) displayed in the file listing

|

||||||

|

* because they don't get `up` entries in the db (probably best fix) and `tx_browser` does not `lstat`

|

||||||

* probably more, pls let me know

|

* probably more, pls let me know

|

||||||

|

|

||||||

## not my bugs

|

## not my bugs

|

||||||

|

|

||||||

* Windows: msys2-python 3.8.6 occasionally throws "RuntimeError: release unlocked lock" when leaving a scoped mutex in up2k

|

* Windows: folders cannot be accessed if the name ends with `.`

|

||||||

|

* python or windows bug

|

||||||

|

|

||||||

|

* Windows: msys2-python 3.8.6 occasionally throws `RuntimeError: release unlocked lock` when leaving a scoped mutex in up2k

|

||||||

* this is an msys2 bug, the regular windows edition of python is fine

|

* this is an msys2 bug, the regular windows edition of python is fine

|

||||||

|

|

||||||

|

* VirtualBox: sqlite throws `Disk I/O Error` when running in a VM and the up2k database is in a vboxsf

|

||||||

|

* use `--hist` or the `hist` volflag (`-v [...]:chist=/tmp/foo`) to place the db inside the vm instead

|

||||||

|

|

||||||

|

|

||||||

# the browser

|

# the browser

|

||||||

|

|

||||||

@@ -143,38 +192,63 @@ summary: all planned features work! now please enjoy the bloatening

|

|||||||

* `[📂]` mkdir, create directories

|

* `[📂]` mkdir, create directories

|

||||||

* `[📝]` new-md, create a new markdown document

|

* `[📝]` new-md, create a new markdown document

|

||||||

* `[📟]` send-msg, either to server-log or into textfiles if `--urlform save`

|

* `[📟]` send-msg, either to server-log or into textfiles if `--urlform save`

|

||||||

* `[⚙️]` client configuration options

|

* `[🎺]` audio-player config options

|

||||||

|

* `[⚙️]` general client config options

|

||||||

|

|

||||||

|

|

||||||

## hotkeys

|

## hotkeys

|

||||||

|

|

||||||

the browser has the following hotkeys

|

the browser has the following hotkeys (assumes qwerty, ignores actual layout)

|

||||||

|

* `B` toggle breadcrumbs / directory tree

|

||||||

* `I/K` prev/next folder

|

* `I/K` prev/next folder

|

||||||

* `P` parent folder

|

* `M` parent folder (or unexpand current)

|

||||||

* `G` toggle list / grid view

|

* `G` toggle list / grid view

|

||||||

* `T` toggle thumbnails / icons

|

* `T` toggle thumbnails / icons

|

||||||

* when playing audio:

|

* when playing audio:

|

||||||

* `0..9` jump to 10%..90%

|

|

||||||

* `U/O` skip 10sec back/forward

|

|

||||||

* `J/L` prev/next song

|

* `J/L` prev/next song

|

||||||

* `J` also starts playing the folder

|

* `U/O` skip 10sec back/forward

|

||||||

|

* `0..9` jump to 0%..90%

|

||||||

|

* `P` play/pause (also starts playing the folder)

|

||||||

|

* when viewing images / playing videos:

|

||||||

|

* `J/L, Left/Right` prev/next file

|

||||||

|

* `Home/End` first/last file

|

||||||

|

* `Esc` close viewer

|

||||||

|

* videos:

|

||||||

|

* `U/O` skip 10sec back/forward

|

||||||

|

* `P/K/Space` play/pause

|

||||||

|

* `F` fullscreen

|

||||||

|

* `C` continue playing next video

|

||||||

|

* `R` loop

|

||||||

|

* `M` mute

|

||||||

|

* when tree-sidebar is open:

|

||||||

|

* `A/D` adjust tree width

|

||||||

* in the grid view:

|

* in the grid view:

|

||||||

* `S` toggle multiselect

|

* `S` toggle multiselect

|

||||||

* `A/D` zoom

|

* shift+`A/D` zoom

|

||||||

|

* in the markdown editor:

|

||||||

|

* `^s` save

|

||||||

|

* `^h` header

|

||||||

|

* `^k` autoformat table

|

||||||

|

* `^u` jump to next unicode character

|

||||||

|

* `^e` toggle editor / preview

|

||||||

|

* `^up, ^down` jump paragraphs

|

||||||

|

|

||||||

## tree-mode

|

## tree-mode

|

||||||

|

|

||||||

by default there's a breadcrumbs path; you can replace this with a tree-browser sidebar thing by clicking the 🌲

|

by default there's a breadcrumbs path; you can replace this with a tree-browser sidebar thing by clicking the `🌲` or pressing the `B` hotkey

|

||||||

|

|

||||||

click `[-]` and `[+]` to adjust the size, and the `[a]` toggles if the tree should widen dynamically as you go deeper or stay fixed-size

|

click `[-]` and `[+]` (or hotkeys `A`/`D`) to adjust the size, and the `[a]` toggles if the tree should widen dynamically as you go deeper or stay fixed-size

|

||||||

|

|

||||||

|

|

||||||

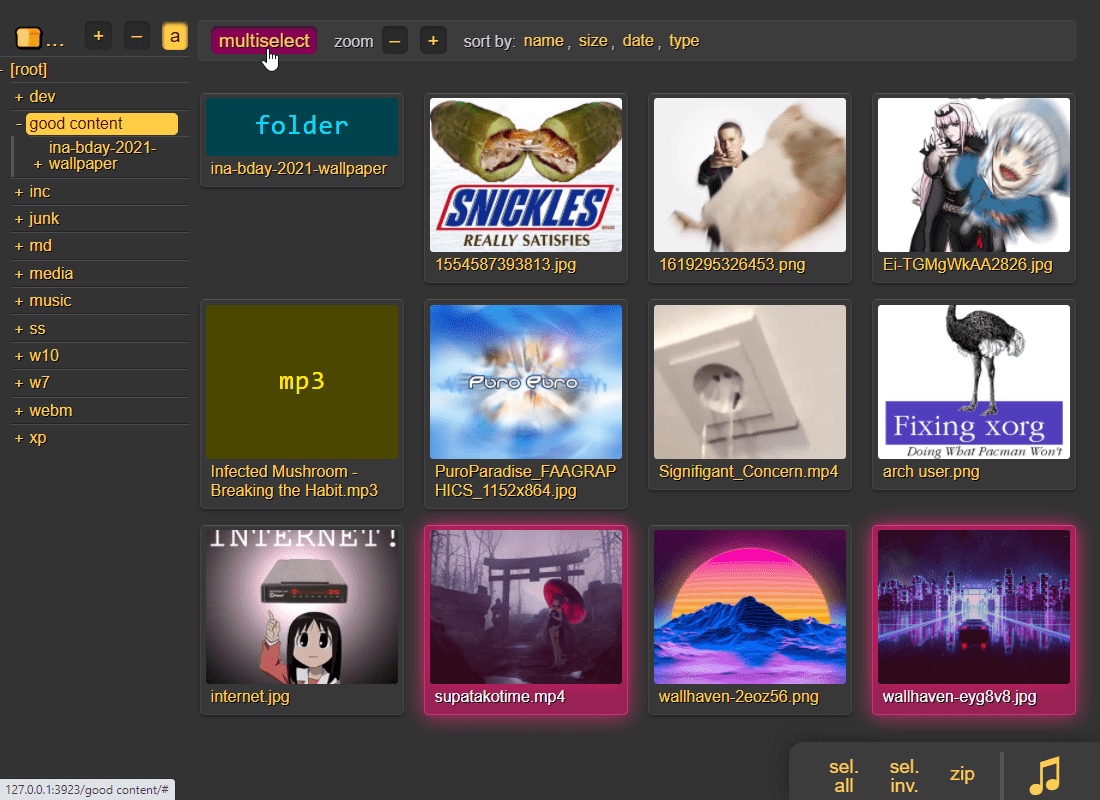

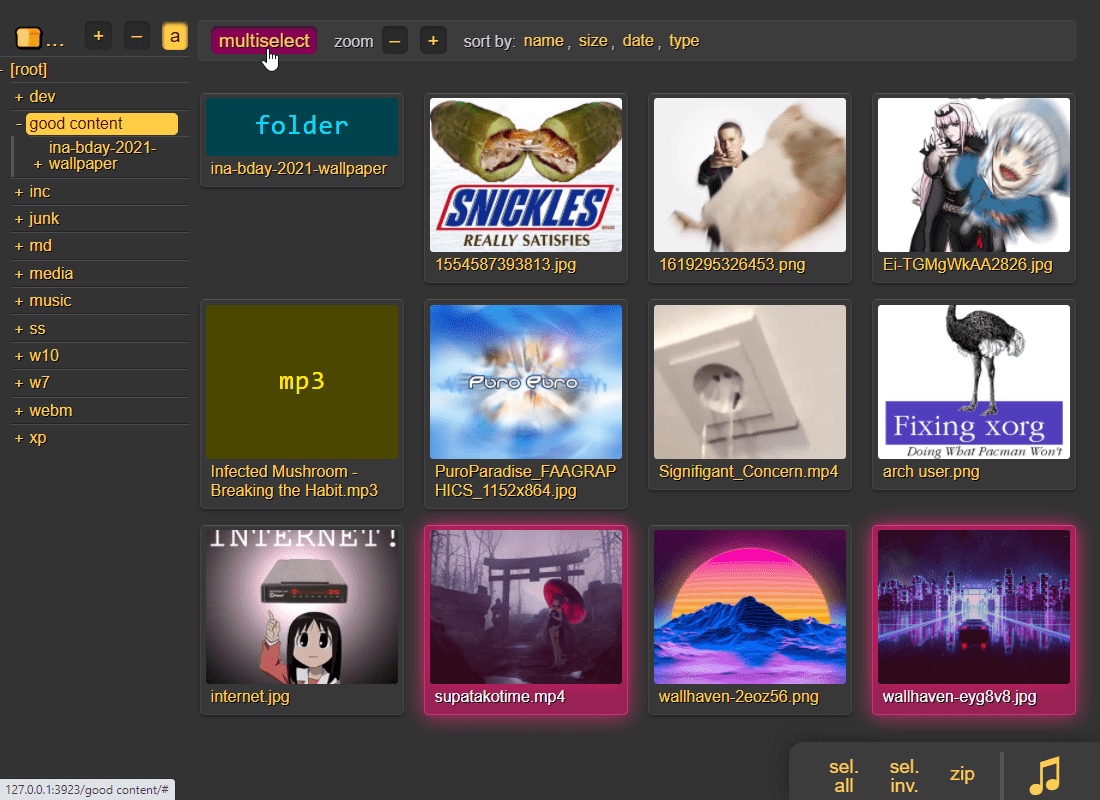

## thumbnails

|

## thumbnails

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

it does static images with Pillow and uses FFmpeg for video files, so you may want to `--no-thumb` or maybe just `--no-vthumb` depending on how destructive your users are

|

it does static images with Pillow and uses FFmpeg for video files, so you may want to `--no-thumb` or maybe just `--no-vthumb` depending on how dangerous your users are

|

||||||

|

|

||||||

|

images with the following names (see `--th-covers`) become the thumbnail of the folder they're in: `folder.png`, `folder.jpg`, `cover.png`, `cover.jpg`

|

||||||

|

|

||||||

|

in the grid/thumbnail view, if the audio player panel is open, songs will start playing when clicked

|

||||||

|

|

||||||

|

|

||||||

## zip downloads

|

## zip downloads

|

||||||

@@ -189,9 +263,10 @@ the `zip` link next to folders can produce various types of zip/tar files using

|

|||||||

| `zip_crc` | `?zip=crc` | cp437 with crc32 computed early for truly ancient software |

|

| `zip_crc` | `?zip=crc` | cp437 with crc32 computed early for truly ancient software |

|

||||||

|

|

||||||

* hidden files (dotfiles) are excluded unless `-ed`

|

* hidden files (dotfiles) are excluded unless `-ed`

|

||||||

* the up2k.db is always excluded

|

* `up2k.db` and `dir.txt` is always excluded

|

||||||

* `zip_crc` will take longer to download since the server has to read each file twice

|

* `zip_crc` will take longer to download since the server has to read each file twice

|

||||||

* please let me know if you find a program old enough to actually need this

|

* this is only to support MS-DOS PKZIP v2.04g (october 1993) and older

|

||||||

|

* how are you accessing copyparty actually

|

||||||

|

|

||||||

you can also zip a selection of files or folders by clicking them in the browser, that brings up a selection editor and zip button in the bottom right

|

you can also zip a selection of files or folders by clicking them in the browser, that brings up a selection editor and zip button in the bottom right

|

||||||

|

|

||||||

@@ -206,9 +281,11 @@ two upload methods are available in the html client:

|

|||||||

up2k has several advantages:

|

up2k has several advantages:

|

||||||

* you can drop folders into the browser (files are added recursively)

|

* you can drop folders into the browser (files are added recursively)

|

||||||

* files are processed in chunks, and each chunk is checksummed

|

* files are processed in chunks, and each chunk is checksummed

|

||||||

* uploads resume if they are interrupted (for example by a reboot)

|

* uploads autoresume if they are interrupted by network issues

|

||||||

|

* uploads resume if you reboot your browser or pc, just upload the same files again

|

||||||

* server detects any corruption; the client reuploads affected chunks

|

* server detects any corruption; the client reuploads affected chunks

|

||||||

* the client doesn't upload anything that already exists on the server

|

* the client doesn't upload anything that already exists on the server

|

||||||

|

* much higher speeds than ftp/scp/tarpipe on some internet connections (mainly american ones) thanks to parallel connections

|

||||||

* the last-modified timestamp of the file is preserved

|

* the last-modified timestamp of the file is preserved

|

||||||

|

|

||||||

see [up2k](#up2k) for details on how it works

|

see [up2k](#up2k) for details on how it works

|

||||||

@@ -241,11 +318,11 @@ in the `[🚀 up2k]` tab, after toggling the `[🔎]` switch green, any files/fo

|

|||||||

files go into `[ok]` if they exist (and you get a link to where it is), otherwise they land in `[ng]`

|

files go into `[ok]` if they exist (and you get a link to where it is), otherwise they land in `[ng]`

|

||||||

* the main reason filesearch is combined with the uploader is cause the code was too spaghetti to separate it out somewhere else, this is no longer the case but now i've warmed up to the idea too much

|

* the main reason filesearch is combined with the uploader is cause the code was too spaghetti to separate it out somewhere else, this is no longer the case but now i've warmed up to the idea too much

|

||||||

|

|

||||||

adding the same file multiple times is blocked, so if you first search for a file and then decide to upload it, you have to click the `[cleanup]` button to discard `[done]` files

|

adding the same file multiple times is blocked, so if you first search for a file and then decide to upload it, you have to click the `[cleanup]` button to discard `[done]` files (or just refresh the page)

|

||||||

|

|

||||||

note that since up2k has to read the file twice, `[🎈 bup]` can be up to 2x faster in extreme cases (if your internet connection is faster than the read-speed of your HDD)

|

note that since up2k has to read the file twice, `[🎈 bup]` can be up to 2x faster in extreme cases (if your internet connection is faster than the read-speed of your HDD)

|

||||||

|

|

||||||

up2k has saved a few uploads from becoming corrupted in-transfer already; caught an android phone on wifi redhanded in wireshark with a bitflip, however bup with https would *probably* have noticed as well thanks to tls also functioning as an integrity check

|

up2k has saved a few uploads from becoming corrupted in-transfer already; caught an android phone on wifi redhanded in wireshark with a bitflip, however bup with https would *probably* have noticed as well (thanks to tls also functioning as an integrity check)

|

||||||

|

|

||||||

|

|

||||||

## markdown viewer

|

## markdown viewer

|

||||||

@@ -259,6 +336,8 @@ up2k has saved a few uploads from becoming corrupted in-transfer already; caught

|

|||||||

|

|

||||||

* you can link a particular timestamp in an audio file by adding it to the URL, such as `&20` / `&20s` / `&1m20` / `&t=1:20` after the `.../#af-c8960dab`

|

* you can link a particular timestamp in an audio file by adding it to the URL, such as `&20` / `&20s` / `&1m20` / `&t=1:20` after the `.../#af-c8960dab`

|

||||||

|

|

||||||

|

* if you are using media hotkeys to switch songs and are getting tired of seeing the OSD popup which Windows doesn't let you disable, consider https://ocv.me/dev/?media-osd-bgone.ps1

|

||||||

|

|

||||||

|

|

||||||

# searching

|

# searching

|

||||||

|

|

||||||

@@ -281,20 +360,40 @@ searching relies on two databases, the up2k filetree (`-e2d`) and the metadata t

|

|||||||

|

|

||||||

through arguments:

|

through arguments:

|

||||||

* `-e2d` enables file indexing on upload

|

* `-e2d` enables file indexing on upload

|

||||||

* `-e2ds` scans writable folders on startup

|

* `-e2ds` scans writable folders for new files on startup

|

||||||

* `-e2dsa` scans all mounted volumes (including readonly ones)

|

* `-e2dsa` scans all mounted volumes (including readonly ones)

|

||||||

* `-e2t` enables metadata indexing on upload

|

* `-e2t` enables metadata indexing on upload

|

||||||

* `-e2ts` scans for tags in all files that don't have tags yet

|

* `-e2ts` scans for tags in all files that don't have tags yet

|

||||||

* `-e2tsr` deletes all existing tags, so a full reindex

|

* `-e2tsr` deletes all existing tags, does a full reindex

|

||||||

|

|

||||||

the same arguments can be set as volume flags, in addition to `d2d` and `d2t` for disabling:

|

the same arguments can be set as volume flags, in addition to `d2d` and `d2t` for disabling:

|

||||||

* `-v ~/music::r:ce2dsa:ce2tsr` does a full reindex of everything on startup

|

* `-v ~/music::r:ce2dsa:ce2tsr` does a full reindex of everything on startup

|

||||||

* `-v ~/music::r:cd2d` disables **all** indexing, even if any `-e2*` are on

|

* `-v ~/music::r:cd2d` disables **all** indexing, even if any `-e2*` are on

|

||||||

* `-v ~/music::r:cd2t` disables all `-e2t*` (tags), does not affect `-e2d*`

|

* `-v ~/music::r:cd2t` disables all `-e2t*` (tags), does not affect `-e2d*`

|

||||||

|

|

||||||

`e2tsr` is probably always overkill, since `e2ds`/`e2dsa` would pick up any file modifications and cause `e2ts` to reindex those

|

note:

|

||||||

|

* `e2tsr` is probably always overkill, since `e2ds`/`e2dsa` would pick up any file modifications and `e2ts` would then reindex those, unless there is a new copyparty version with new parsers and the release note says otherwise

|

||||||

|

* the rescan button in the admin panel has no effect unless the volume has `-e2ds` or higher

|

||||||

|

|

||||||

the rescan button in the admin panel has no effect unless the volume has `-e2ds` or higher

|

you can choose to only index filename/path/size/last-modified (and not the hash of the file contents) by setting `--no-hash` or the volume-flag `cdhash`, this has the following consequences:

|

||||||

|

* initial indexing is way faster, especially when the volume is on a network disk

|

||||||

|

* makes it impossible to [file-search](#file-search)

|

||||||

|

* if someone uploads the same file contents, the upload will not be detected as a dupe, so it will not get symlinked or rejected

|

||||||

|

|

||||||

|

if you set `--no-hash`, you can enable hashing for specific volumes using flag `cehash`

|

||||||

|

|

||||||

|

|

||||||

|

## database location

|

||||||

|

|

||||||

|

copyparty creates a subfolder named `.hist` inside each volume where it stores the database, thumbnails, and some other stuff

|

||||||

|

|

||||||

|

this can instead be kept in a single place using the `--hist` argument, or the `hist=` volume flag, or a mix of both:

|

||||||

|

* `--hist ~/.cache/copyparty -v ~/music::r:chist=-` sets `~/.cache/copyparty` as the default place to put volume info, but `~/music` gets the regular `.hist` subfolder (`-` restores default behavior)

|

||||||

|

|

||||||

|

note:

|

||||||

|

* markdown edits are always stored in a local `.hist` subdirectory

|

||||||

|

* on windows the volflag path is cyglike, so `/c/temp` means `C:\temp` but use regular paths for `--hist`

|

||||||

|

* you can use cygpaths for volumes too, `-v C:\Users::r` and `-v /c/users::r` both work

|

||||||

|

|

||||||

|

|

||||||

## metadata from audio files

|

## metadata from audio files

|

||||||

@@ -310,17 +409,17 @@ tags that start with a `.` such as `.bpm` and `.dur`(ation) indicate numeric val

|

|||||||

|

|

||||||

see the beautiful mess of a dictionary in [mtag.py](https://github.com/9001/copyparty/blob/master/copyparty/mtag.py) for the default mappings (should cover mp3,opus,flac,m4a,wav,aif,)

|

see the beautiful mess of a dictionary in [mtag.py](https://github.com/9001/copyparty/blob/master/copyparty/mtag.py) for the default mappings (should cover mp3,opus,flac,m4a,wav,aif,)

|

||||||

|

|

||||||

`--no-mutagen` disables mutagen and uses ffprobe instead, which...

|

`--no-mutagen` disables Mutagen and uses FFprobe instead, which...

|

||||||

* is about 20x slower than mutagen

|

* is about 20x slower than Mutagen

|

||||||

* catches a few tags that mutagen doesn't

|

* catches a few tags that Mutagen doesn't

|

||||||

* melodic key, video resolution, framerate, pixfmt

|

* melodic key, video resolution, framerate, pixfmt

|

||||||

* avoids pulling any GPL code into copyparty

|

* avoids pulling any GPL code into copyparty

|

||||||

* more importantly runs ffprobe on incoming files which is bad if your ffmpeg has a cve

|

* more importantly runs FFprobe on incoming files which is bad if your FFmpeg has a cve

|

||||||

|

|

||||||

|

|

||||||

## file parser plugins

|

## file parser plugins

|

||||||

|

|

||||||

copyparty can invoke external programs to collect additional metadata for files using `mtp` (as argument or volume flag), there is a default timeout of 30sec

|

copyparty can invoke external programs to collect additional metadata for files using `mtp` (either as argument or volume flag), there is a default timeout of 30sec

|

||||||

|

|

||||||

* `-mtp .bpm=~/bin/audio-bpm.py` will execute `~/bin/audio-bpm.py` with the audio file as argument 1 to provide the `.bpm` tag, if that does not exist in the audio metadata

|

* `-mtp .bpm=~/bin/audio-bpm.py` will execute `~/bin/audio-bpm.py` with the audio file as argument 1 to provide the `.bpm` tag, if that does not exist in the audio metadata

|

||||||

* `-mtp key=f,t5,~/bin/audio-key.py` uses `~/bin/audio-key.py` to get the `key` tag, replacing any existing metadata tag (`f,`), aborting if it takes longer than 5sec (`t5,`)

|

* `-mtp key=f,t5,~/bin/audio-key.py` uses `~/bin/audio-key.py` to get the `key` tag, replacing any existing metadata tag (`f,`), aborting if it takes longer than 5sec (`t5,`)

|

||||||

@@ -354,11 +453,13 @@ copyparty can invoke external programs to collect additional metadata for files

|

|||||||

| zip selection | - | yep | yep | yep | yep | yep | yep | yep |

|

| zip selection | - | yep | yep | yep | yep | yep | yep | yep |

|

||||||

| directory tree | - | - | `*1` | yep | yep | yep | yep | yep |

|

| directory tree | - | - | `*1` | yep | yep | yep | yep | yep |

|

||||||

| up2k | - | - | yep | yep | yep | yep | yep | yep |

|

| up2k | - | - | yep | yep | yep | yep | yep | yep |

|

||||||

| icons work | - | - | yep | yep | yep | yep | yep | yep |

|

|

||||||

| markdown editor | - | - | yep | yep | yep | yep | yep | yep |

|

| markdown editor | - | - | yep | yep | yep | yep | yep | yep |

|

||||||

| markdown viewer | - | - | yep | yep | yep | yep | yep | yep |

|

| markdown viewer | - | - | yep | yep | yep | yep | yep | yep |

|

||||||

| play mp3/m4a | - | yep | yep | yep | yep | yep | yep | yep |

|

| play mp3/m4a | - | yep | yep | yep | yep | yep | yep | yep |

|

||||||

| play ogg/opus | - | - | - | - | yep | yep | `*2` | yep |

|

| play ogg/opus | - | - | - | - | yep | yep | `*2` | yep |

|

||||||

|

| thumbnail view | - | - | - | - | yep | yep | yep | yep |

|

||||||

|

| image viewer | - | - | - | - | yep | yep | yep | yep |

|

||||||

|

| **= feature =** | ie6 | ie9 | ie10 | ie11 | ff 52 | c 49 | iOS | Andr |

|

||||||

|

|

||||||

* internet explorer 6 to 8 behave the same

|

* internet explorer 6 to 8 behave the same

|

||||||

* firefox 52 and chrome 49 are the last winxp versions

|

* firefox 52 and chrome 49 are the last winxp versions

|

||||||

@@ -376,7 +477,7 @@ quick summary of more eccentric web-browsers trying to view a directory index:

|

|||||||

| **w3m** (0.5.3/macports) | can browse, login, upload at 100kB/s, mkdir/msg |

|

| **w3m** (0.5.3/macports) | can browse, login, upload at 100kB/s, mkdir/msg |

|

||||||

| **netsurf** (3.10/arch) | is basically ie6 with much better css (javascript has almost no effect) |

|

| **netsurf** (3.10/arch) | is basically ie6 with much better css (javascript has almost no effect) |

|

||||||

| **ie4** and **netscape** 4.0 | can browse (text is yellow on white), upload with `?b=u` |

|

| **ie4** and **netscape** 4.0 | can browse (text is yellow on white), upload with `?b=u` |

|

||||||

| **SerenityOS** (22d13d8) | hits a page fault, works with `?b=u`, file input not-impl, url params are multiplying |

|

| **SerenityOS** (7e98457) | hits a page fault, works with `?b=u`, file upload not-impl |

|

||||||

|

|

||||||

|

|

||||||

# client examples

|

# client examples

|

||||||

@@ -397,9 +498,11 @@ quick summary of more eccentric web-browsers trying to view a directory index:

|

|||||||

* cross-platform python client available in [./bin/](bin/)

|

* cross-platform python client available in [./bin/](bin/)

|

||||||

* [rclone](https://rclone.org/) as client can give ~5x performance, see [./docs/rclone.md](docs/rclone.md)

|

* [rclone](https://rclone.org/) as client can give ~5x performance, see [./docs/rclone.md](docs/rclone.md)

|

||||||

|

|

||||||

|

* sharex (screenshot utility): see [./contrib/sharex.sxcu](contrib/#sharexsxcu)

|

||||||

|

|

||||||

copyparty returns a truncated sha512sum of your PUT/POST as base64; you can generate the same checksum locally to verify uplaods:

|

copyparty returns a truncated sha512sum of your PUT/POST as base64; you can generate the same checksum locally to verify uplaods:

|

||||||

|

|

||||||

b512(){ printf "$((sha512sum||shasum -a512)|sed -E 's/ .*//;s/(..)/\\x\1/g')"|base64|head -c43;}

|

b512(){ printf "$((sha512sum||shasum -a512)|sed -E 's/ .*//;s/(..)/\\x\1/g')"|base64|tr '+/' '-_'|head -c44;}

|

||||||

b512 <movie.mkv

|

b512 <movie.mkv

|

||||||

|

|

||||||

|

|

||||||

@@ -419,6 +522,23 @@ quick outline of the up2k protocol, see [uploading](#uploading) for the web-clie

|

|||||||

* client does another handshake with the hashlist; server replies with OK or a list of chunks to reupload

|

* client does another handshake with the hashlist; server replies with OK or a list of chunks to reupload

|

||||||

|

|

||||||

|

|

||||||

|

# performance

|

||||||

|

|

||||||

|

defaults are good for most cases, don't mind the `cannot efficiently use multiple CPU cores` message, it's very unlikely to be a problem

|

||||||

|

|

||||||

|

below are some tweaks roughly ordered by usefulness:

|

||||||

|

|

||||||

|

* `-q` disables logging and can help a bunch, even when combined with `-lo` to redirect logs to file

|

||||||

|

* `--http-only` or `--https-only` (unless you want to support both protocols) will reduce the delay before a new connection is established

|

||||||

|

* `--hist` pointing to a fast location (ssd) will make directory listings and searches faster when `-e2d` or `-e2t` is set

|

||||||

|

* `--no-hash` when indexing a network-disk if you don't care about the actual filehashes and only want the names/tags searchable

|

||||||

|

* `-j` enables multiprocessing (actual multithreading) and can make copyparty perform better in cpu-intensive workloads, for example:

|

||||||

|

* huge amount of short-lived connections

|

||||||

|

* really heavy traffic (downloads/uploads)

|

||||||

|

|

||||||

|

...however it adds an overhead to internal communication so it might be a net loss, see if it works 4 u

|

||||||

|

|

||||||

|

|

||||||

# dependencies

|

# dependencies

|

||||||

|

|

||||||

* `jinja2` (is built into the SFX)

|

* `jinja2` (is built into the SFX)

|

||||||

@@ -428,18 +548,18 @@ quick outline of the up2k protocol, see [uploading](#uploading) for the web-clie

|

|||||||

|

|

||||||

enable music tags:

|

enable music tags:

|

||||||

* either `mutagen` (fast, pure-python, skips a few tags, makes copyparty GPL? idk)

|

* either `mutagen` (fast, pure-python, skips a few tags, makes copyparty GPL? idk)

|

||||||

* or `FFprobe` (20x slower, more accurate, possibly dangerous depending on your distro and users)

|

* or `ffprobe` (20x slower, more accurate, possibly dangerous depending on your distro and users)

|

||||||

|

|

||||||

enable image thumbnails:

|

enable thumbnails of images:

|

||||||

* `Pillow` (requires py2.7 or py3.5+)

|

* `Pillow` (requires py2.7 or py3.5+)

|

||||||

|

|

||||||

enable video thumbnails:

|

enable thumbnails of videos:

|

||||||

* `ffmpeg` and `ffprobe` somewhere in `$PATH`

|

* `ffmpeg` and `ffprobe` somewhere in `$PATH`

|

||||||

|

|

||||||

enable reading HEIF pictures:

|

enable thumbnails of HEIF pictures:

|

||||||

* `pyheif-pillow-opener` (requires Linux or a C compiler)

|

* `pyheif-pillow-opener` (requires Linux or a C compiler)

|

||||||

|

|

||||||

enable reading AVIF pictures:

|

enable thumbnails of AVIF pictures:

|

||||||

* `pillow-avif-plugin`

|

* `pillow-avif-plugin`

|

||||||

|

|

||||||

|

|

||||||

@@ -453,7 +573,7 @@ python -m pip install --user -U jinja2 mutagen Pillow

|

|||||||

|

|

||||||

some bundled tools have copyleft dependencies, see [./bin/#mtag](bin/#mtag)

|

some bundled tools have copyleft dependencies, see [./bin/#mtag](bin/#mtag)

|

||||||

|

|

||||||

these are standalone programs and will never be imported / evaluated by copyparty

|

these are standalone programs and will never be imported / evaluated by copyparty, and must be enabled through `-mtp` configs

|

||||||

|

|

||||||

|

|

||||||

# sfx

|

# sfx

|

||||||

@@ -469,10 +589,10 @@ pls note that `copyparty-sfx.sh` will fail if you rename `copyparty-sfx.py` to `

|

|||||||

|

|

||||||

## sfx repack

|

## sfx repack

|

||||||

|

|

||||||

if you don't need all the features you can repack the sfx and save a bunch of space; all you need is an sfx and a copy of this repo (nothing else to download or build, except for either msys2 or WSL if you're on windows)

|

if you don't need all the features, you can repack the sfx and save a bunch of space; all you need is an sfx and a copy of this repo (nothing else to download or build, except if you're on windows then you need msys2 or WSL)

|

||||||

* `724K` original size as of v0.4.0

|

* `525k` size of original sfx.py as of v0.11.30

|

||||||

* `256K` after `./scripts/make-sfx.sh re no-ogv`

|

* `315k` after `./scripts/make-sfx.sh re no-ogv`

|

||||||

* `164K` after `./scripts/make-sfx.sh re no-ogv no-cm`

|

* `223k` after `./scripts/make-sfx.sh re no-ogv no-cm`

|

||||||

|

|

||||||

the features you can opt to drop are

|

the features you can opt to drop are

|

||||||

* `ogv`.js, the opus/vorbis decoder which is needed by apple devices to play foss audio files

|

* `ogv`.js, the opus/vorbis decoder which is needed by apple devices to play foss audio files

|

||||||

@@ -494,18 +614,45 @@ echo $?

|

|||||||

after the initial setup, you can launch copyparty at any time by running `copyparty` anywhere in Termux

|

after the initial setup, you can launch copyparty at any time by running `copyparty` anywhere in Termux

|

||||||

|

|

||||||

|

|

||||||

# dev env setup

|

# building

|

||||||

|

|

||||||

|

## dev env setup

|

||||||

|

|

||||||

|

mostly optional; if you need a working env for vscode or similar

|

||||||

|

|

||||||

```sh

|

```sh

|

||||||

python3 -m venv .venv

|

python3 -m venv .venv

|

||||||

. .venv/bin/activate

|

. .venv/bin/activate

|

||||||

pip install jinja2 # mandatory deps

|

pip install jinja2 # mandatory

|

||||||

pip install Pillow # thumbnail deps

|

pip install mutagen # audio metadata

|

||||||

|

pip install Pillow pyheif-pillow-opener pillow-avif-plugin # thumbnails

|

||||||

pip install black bandit pylint flake8 # vscode tooling

|

pip install black bandit pylint flake8 # vscode tooling

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

# how to release

|

## just the sfx

|

||||||

|

|

||||||

|

unless you need to modify something in the web-dependencies, it's faster to grab those from a previous release:

|

||||||

|

|

||||||

|

```sh

|

||||||

|

rm -rf copyparty/web/deps

|

||||||

|

curl -L https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py >x.py

|

||||||

|

python3 x.py -h

|

||||||

|

rm x.py

|

||||||

|

mv /tmp/pe-copyparty/copyparty/web/deps/ copyparty/web/deps/

|

||||||

|

```

|

||||||

|

|

||||||

|

then build the sfx using any of the following examples:

|

||||||

|

|

||||||

|

```sh

|

||||||

|

./scripts/make-sfx.sh # both python and sh editions

|

||||||

|

./scripts/make-sfx.sh no-sh gz # just python with gzip

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

## complete release

|

||||||

|

|

||||||

|

also builds the sfx so disregard the sfx section above

|

||||||

|

|

||||||

in the `scripts` folder:

|

in the `scripts` folder:

|

||||||

|

|

||||||

@@ -520,14 +667,18 @@ in the `scripts` folder:

|

|||||||

|

|

||||||

roughly sorted by priority

|

roughly sorted by priority

|

||||||

|

|

||||||

|

* hls framework for Someone Else to drop code into :^)

|

||||||

* readme.md as epilogue

|

* readme.md as epilogue

|

||||||

* single sha512 across all up2k chunks? maybe

|

|

||||||

|

|

||||||

|

## discarded ideas

|

||||||

|

|

||||||

* reduce up2k roundtrips

|

* reduce up2k roundtrips

|

||||||

* start from a chunk index and just go

|

* start from a chunk index and just go

|

||||||

* terminate client on bad data

|

* terminate client on bad data

|

||||||

|

* not worth the effort, just throw enough conncetions at it

|

||||||

discarded ideas

|

* single sha512 across all up2k chunks?

|

||||||

|

* crypto.subtle cannot into streaming, would have to use hashwasm, expensive

|

||||||

* separate sqlite table per tag

|

* separate sqlite table per tag

|

||||||

* performance fixed by skipping some indexes (`+mt.k`)

|

* performance fixed by skipping some indexes (`+mt.k`)

|

||||||

* audio fingerprinting

|

* audio fingerprinting

|

||||||

@@ -542,3 +693,6 @@ discarded ideas

|

|||||||

* nah

|

* nah

|

||||||

* look into android thumbnail cache file format

|

* look into android thumbnail cache file format

|

||||||

* absolutely not

|

* absolutely not

|

||||||

|

* indexedDB for hashes, cfg enable/clear/sz, 2gb avail, ~9k for 1g, ~4k for 100m, 500k items before autoeviction

|

||||||

|

* blank hashlist when up-ok to skip handshake

|

||||||

|

* too many confusing side-effects

|

||||||

|

|||||||

@@ -48,15 +48,16 @@ you could replace winfsp with [dokan](https://github.com/dokan-dev/dokany/releas

|

|||||||

|

|

||||||

|

|

||||||

# [`dbtool.py`](dbtool.py)

|

# [`dbtool.py`](dbtool.py)

|

||||||

upgrade utility which can show db info and help transfer data between databases, for example when a new version of copyparty recommends to wipe the DB and reindex because it now collects additional metadata during analysis, but you have some really expensive `-mtp` parsers and want to copy over the tags from the old db

|

upgrade utility which can show db info and help transfer data between databases, for example when a new version of copyparty is incompatible with the old DB and automatically rebuilds the DB from scratch, but you have some really expensive `-mtp` parsers and want to copy over the tags from the old db

|

||||||

|

|

||||||

for that example (upgrading to v0.11.0), first move the old db aside, launch copyparty, let it rebuild the db until the point where it starts running mtp (colored messages as it adds the mtp tags), then CTRL-C and patch in the old mtp tags from the old db instead

|

for that example (upgrading to v0.11.20), first launch the new version of copyparty like usual, let it make a backup of the old db and rebuild the new db until the point where it starts running mtp (colored messages as it adds the mtp tags), that's when you hit CTRL-C and patch in the old mtp tags from the old db instead

|

||||||

|

|

||||||

so assuming you have `-mtp` parsers to provide the tags `key` and `.bpm`:

|

so assuming you have `-mtp` parsers to provide the tags `key` and `.bpm`:

|

||||||

|

|

||||||

```

|

```

|

||||||

~/bin/dbtool.py -ls up2k.db

|

cd /mnt/nas/music/.hist

|

||||||

~/bin/dbtool.py -src up2k.db.v0.10.22 up2k.db -cmp

|

~/src/copyparty/bin/dbtool.py -ls up2k.db

|

||||||

~/bin/dbtool.py -src up2k.db.v0.10.22 up2k.db -rm-mtp-flag -copy key

|

~/src/copyparty/bin/dbtool.py -src up2k.*.v3 up2k.db -cmp

|

||||||

~/bin/dbtool.py -src up2k.db.v0.10.22 up2k.db -rm-mtp-flag -copy .bpm -vac

|

~/src/copyparty/bin/dbtool.py -src up2k.*.v3 up2k.db -rm-mtp-flag -copy key

|

||||||

|

~/src/copyparty/bin/dbtool.py -src up2k.*.v3 up2k.db -rm-mtp-flag -copy .bpm -vac

|

||||||

```

|

```

|

||||||

|

|||||||

@@ -345,7 +345,7 @@ class Gateway(object):

|

|||||||

except:

|

except:

|

||||||

pass

|

pass

|

||||||

|

|

||||||

def sendreq(self, *args, headers={}, **kwargs):

|

def sendreq(self, meth, path, headers, **kwargs):

|

||||||

if self.password:

|

if self.password:

|

||||||

headers["Cookie"] = "=".join(["cppwd", self.password])

|

headers["Cookie"] = "=".join(["cppwd", self.password])

|

||||||

|

|

||||||

@@ -354,21 +354,21 @@ class Gateway(object):

|

|||||||

if c.rx_path:

|

if c.rx_path:

|

||||||

raise Exception()

|

raise Exception()

|

||||||

|

|

||||||

c.request(*list(args), headers=headers, **kwargs)

|

c.request(meth, path, headers=headers, **kwargs)

|

||||||

c.rx = c.getresponse()

|

c.rx = c.getresponse()

|

||||||

return c

|

return c

|

||||||

except:

|

except:

|

||||||

tid = threading.current_thread().ident

|

tid = threading.current_thread().ident

|

||||||

dbg(

|

dbg(

|

||||||

"\033[1;37;44mbad conn {:x}\n {}\n {}\033[0m".format(

|

"\033[1;37;44mbad conn {:x}\n {} {}\n {}\033[0m".format(

|

||||||

tid, " ".join(str(x) for x in args), c.rx_path if c else "(null)"

|

tid, meth, path, c.rx_path if c else "(null)"

|

||||||

)

|

)

|

||||||

)

|

)

|

||||||

|

|

||||||

self.closeconn(c)

|

self.closeconn(c)

|

||||||

c = self.getconn()

|

c = self.getconn()

|

||||||

try:

|

try:

|

||||||

c.request(*list(args), headers=headers, **kwargs)

|

c.request(meth, path, headers=headers, **kwargs)

|

||||||

c.rx = c.getresponse()

|

c.rx = c.getresponse()

|

||||||

return c

|

return c

|

||||||

except:

|

except:

|

||||||

@@ -386,7 +386,7 @@ class Gateway(object):

|

|||||||

path = dewin(path)

|

path = dewin(path)

|

||||||

|

|

||||||

web_path = self.quotep("/" + "/".join([self.web_root, path])) + "?dots"

|

web_path = self.quotep("/" + "/".join([self.web_root, path])) + "?dots"

|

||||||

c = self.sendreq("GET", web_path)

|

c = self.sendreq("GET", web_path, {})

|

||||||

if c.rx.status != 200:

|

if c.rx.status != 200:

|

||||||

self.closeconn(c)

|

self.closeconn(c)

|

||||||

log(

|

log(

|

||||||

@@ -440,7 +440,7 @@ class Gateway(object):

|

|||||||

)

|

)

|

||||||

)

|

)

|

||||||

|

|

||||||

c = self.sendreq("GET", web_path, headers={"Range": hdr_range})

|

c = self.sendreq("GET", web_path, {"Range": hdr_range})

|

||||||

if c.rx.status != http.client.PARTIAL_CONTENT:

|

if c.rx.status != http.client.PARTIAL_CONTENT:

|

||||||

self.closeconn(c)

|

self.closeconn(c)

|

||||||

raise Exception(

|

raise Exception(

|

||||||

|

|||||||

@@ -54,6 +54,15 @@ MACOS = platform.system() == "Darwin"

|

|||||||

info = log = dbg = None

|

info = log = dbg = None

|

||||||

|

|

||||||

|

|

||||||

|

print(

|

||||||

|

"{} v{} @ {}".format(

|

||||||

|

platform.python_implementation(),

|

||||||

|

".".join([str(x) for x in sys.version_info]),

|

||||||

|

sys.executable,

|

||||||

|

)

|

||||||

|

)

|

||||||

|

|

||||||

|

|

||||||

try:

|

try:

|

||||||

from fuse import FUSE, FuseOSError, Operations

|

from fuse import FUSE, FuseOSError, Operations

|

||||||

except:

|

except:

|

||||||

@@ -293,14 +302,14 @@ class Gateway(object):

|

|||||||

except:

|

except:

|

||||||

pass

|

pass

|

||||||

|

|

||||||

def sendreq(self, *args, headers={}, **kwargs):

|

def sendreq(self, meth, path, headers, **kwargs):

|

||||||

tid = get_tid()

|

tid = get_tid()

|

||||||

if self.password:

|

if self.password:

|

||||||

headers["Cookie"] = "=".join(["cppwd", self.password])

|

headers["Cookie"] = "=".join(["cppwd", self.password])

|

||||||

|

|

||||||

try:

|

try:

|

||||||

c = self.getconn(tid)

|

c = self.getconn(tid)

|

||||||

c.request(*list(args), headers=headers, **kwargs)

|

c.request(meth, path, headers=headers, **kwargs)

|

||||||

return c.getresponse()

|

return c.getresponse()

|

||||||

except:

|

except:

|

||||||

dbg("bad conn")

|

dbg("bad conn")

|

||||||

@@ -308,7 +317,7 @@ class Gateway(object):

|

|||||||

self.closeconn(tid)

|

self.closeconn(tid)

|

||||||

try:

|

try:

|

||||||

c = self.getconn(tid)

|

c = self.getconn(tid)

|

||||||

c.request(*list(args), headers=headers, **kwargs)

|

c.request(meth, path, headers=headers, **kwargs)

|

||||||

return c.getresponse()

|

return c.getresponse()

|

||||||

except:

|

except:

|

||||||

info("http connection failed:\n" + traceback.format_exc())

|

info("http connection failed:\n" + traceback.format_exc())

|

||||||

@@ -325,7 +334,7 @@ class Gateway(object):

|

|||||||

path = dewin(path)

|

path = dewin(path)

|

||||||

|

|

||||||

web_path = self.quotep("/" + "/".join([self.web_root, path])) + "?dots&ls"

|

web_path = self.quotep("/" + "/".join([self.web_root, path])) + "?dots&ls"

|

||||||

r = self.sendreq("GET", web_path)

|

r = self.sendreq("GET", web_path, {})

|

||||||

if r.status != 200:

|

if r.status != 200:

|

||||||

self.closeconn()

|

self.closeconn()

|

||||||

log(

|

log(

|

||||||

@@ -362,7 +371,7 @@ class Gateway(object):

|

|||||||

)

|

)

|

||||||

)

|

)

|

||||||

|

|

||||||

r = self.sendreq("GET", web_path, headers={"Range": hdr_range})

|

r = self.sendreq("GET", web_path, {"Range": hdr_range})

|

||||||

if r.status != http.client.PARTIAL_CONTENT:

|

if r.status != http.client.PARTIAL_CONTENT:

|

||||||

self.closeconn()

|

self.closeconn()

|

||||||

raise Exception(

|

raise Exception(

|

||||||

|

|||||||

@@ -2,10 +2,13 @@

|

|||||||

|

|

||||||

import os

|

import os

|

||||||

import sys

|

import sys

|

||||||

|

import time

|

||||||

|

import shutil

|

||||||

import sqlite3

|

import sqlite3

|

||||||

import argparse

|

import argparse

|

||||||

|

|

||||||

DB_VER = 3

|

DB_VER1 = 3

|

||||||

|

DB_VER2 = 4

|

||||||

|

|

||||||

|

|

||||||

def die(msg):

|

def die(msg):

|

||||||

@@ -45,18 +48,21 @@ def compare(n1, d1, n2, d2, verbose):

|

|||||||

nt = next(d1.execute("select count(w) from up"))[0]

|

nt = next(d1.execute("select count(w) from up"))[0]

|

||||||

n = 0

|

n = 0

|

||||||

miss = 0

|

miss = 0

|

||||||

for w, rd, fn in d1.execute("select w, rd, fn from up"):

|

for w1, rd, fn in d1.execute("select w, rd, fn from up"):

|

||||||

n += 1

|

n += 1

|

||||||

if n % 25_000 == 0:

|

if n % 25_000 == 0:

|

||||||

m = f"\033[36mchecked {n:,} of {nt:,} files in {n1} against {n2}\033[0m"

|

m = f"\033[36mchecked {n:,} of {nt:,} files in {n1} against {n2}\033[0m"

|

||||||

print(m)

|

print(m)

|

||||||

|

|

||||||

q = "select w from up where substr(w,1,16) = ?"

|

if rd.split("/", 1)[0] == ".hist":

|

||||||

hit = d2.execute(q, (w[:16],)).fetchone()

|