mirror of

https://github.com/9001/copyparty.git

synced 2025-10-24 16:43:55 +00:00

Compare commits

37 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

eb5aaddba4 | ||

|

|

d8fd82bcb5 | ||

|

|

97be495861 | ||

|

|

8b53c159fc | ||

|

|

81e281f703 | ||

|

|

3948214050 | ||

|

|

c5e9a643e7 | ||

|

|

d25881d5c3 | ||

|

|

38d8d9733f | ||

|

|

118ebf668d | ||

|

|

a86f09fa46 | ||

|

|

dd4fb35c8f | ||

|

|

621eb4cf95 | ||

|

|

deea66ad0b | ||

|

|

bf99445377 | ||

|

|

7b54a63396 | ||

|

|

0fcb015f9a | ||

|

|

0a22b1ffb6 | ||

|

|

68cecc52ab | ||

|

|

53657ccfff | ||

|

|

96223fda01 | ||

|

|

374ff3433e | ||

|

|

5d63949e98 | ||

|

|

6b065d507d | ||

|

|

e79997498a | ||

|

|

f7ee02ec35 | ||

|

|

69dc433e1c | ||

|

|

c880cd848c | ||

|

|

5752b6db48 | ||

|

|

b36f905eab | ||

|

|

483dd527c6 | ||

|

|

e55678e28f | ||

|

|

3f4a8b9d6f | ||

|

|

02a856ecb4 | ||

|

|

4dff726310 | ||

|

|

cbc449036f | ||

|

|

8f53152220 |

51

README.md

51

README.md

@@ -13,7 +13,7 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

||||

* *resumable* uploads need `firefox 34+` / `chrome 41+` / `safari 7+` for full speed

|

||||

* code standard: `black`

|

||||

|

||||

📷 screenshots: [browser](#the-browser) // [upload](#uploading) // [md-viewer](#markdown-viewer) // [search](#searching) // [fsearch](#file-search) // [zip-DL](#zip-downloads) // [ie4](#browser-support)

|

||||

📷 **screenshots:** [browser](#the-browser) // [upload](#uploading) // [thumbnails](#thumbnails) // [md-viewer](#markdown-viewer) // [search](#searching) // [fsearch](#file-search) // [zip-DL](#zip-downloads) // [ie4](#browser-support)

|

||||

|

||||

|

||||

## readme toc

|

||||

@@ -29,6 +29,7 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

||||

* [tabs](#tabs)

|

||||

* [hotkeys](#hotkeys)

|

||||

* [tree-mode](#tree-mode)

|

||||

* [thumbnails](#thumbnails)

|

||||

* [zip downloads](#zip-downloads)

|

||||

* [uploading](#uploading)

|

||||

* [file-search](#file-search)

|

||||

@@ -43,6 +44,8 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

||||

* [client examples](#client-examples)

|

||||

* [up2k](#up2k)

|

||||

* [dependencies](#dependencies)

|

||||

* [optional dependencies](#optional-dependencies)

|

||||

* [install recommended deps](#install-recommended-deps)

|

||||

* [optional gpl stuff](#optional-gpl-stuff)

|

||||

* [sfx](#sfx)

|

||||

* [sfx repack](#sfx-repack)

|

||||

@@ -75,6 +78,8 @@ you may also want these, especially on servers:

|

||||

|

||||

## status

|

||||

|

||||

summary: all planned features work! now please enjoy the bloatening

|

||||

|

||||

* backend stuff

|

||||

* ☑ sanic multipart parser

|

||||

* ☑ load balancer (multiprocessing)

|

||||

@@ -92,7 +97,10 @@ you may also want these, especially on servers:

|

||||

* browser

|

||||

* ☑ tree-view

|

||||

* ☑ media player

|

||||

* ✖ thumbnails

|

||||

* ☑ thumbnails

|

||||

* ☑ images using Pillow

|

||||

* ☑ videos using FFmpeg

|

||||

* ☑ cache eviction (max-age; maybe max-size eventually)

|

||||

* ☑ SPA (browse while uploading)

|

||||

* if you use the file-tree on the left only, not folders in the file list

|

||||

* server indexing

|

||||

@@ -103,8 +111,6 @@ you may also want these, especially on servers:

|

||||

* ☑ viewer

|

||||

* ☑ editor (sure why not)

|

||||

|

||||

summary: it works! you can use it! (but technically not even close to beta)

|

||||

|

||||

|

||||

# bugs

|

||||

|

||||

@@ -145,11 +151,16 @@ summary: it works! you can use it! (but technically not even close to beta)

|

||||

the browser has the following hotkeys

|

||||

* `I/K` prev/next folder

|

||||

* `P` parent folder

|

||||

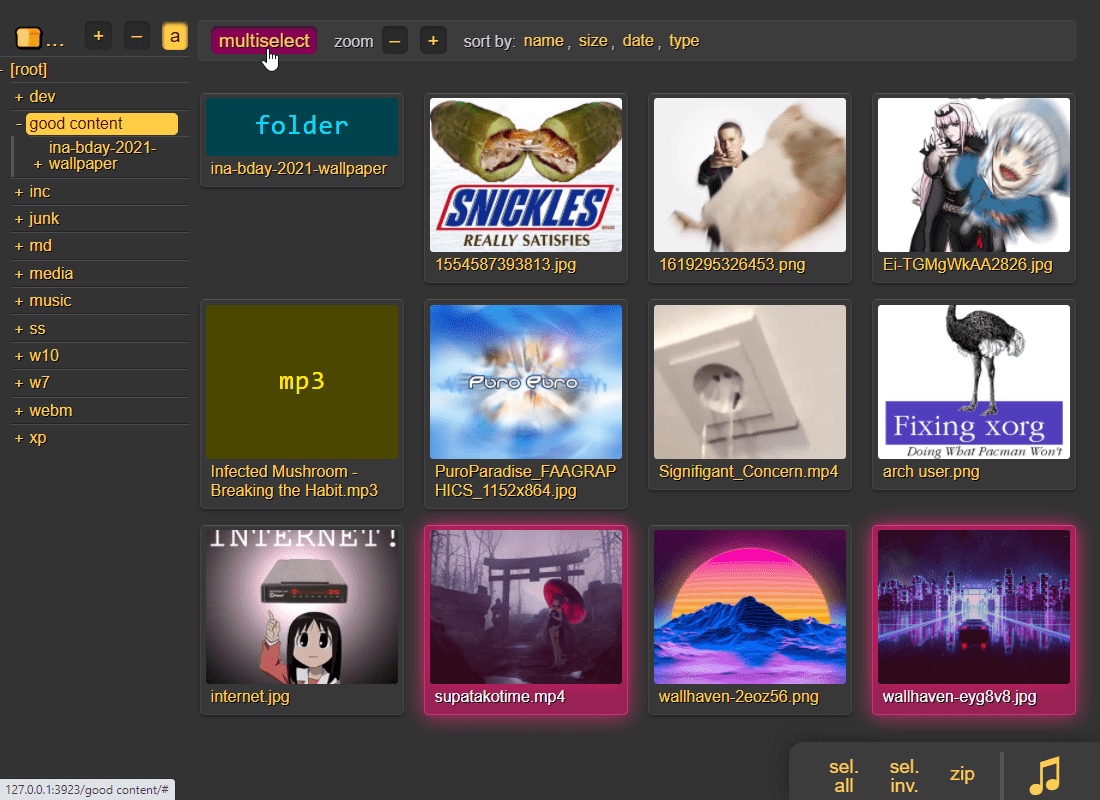

* `G` toggle list / grid view

|

||||

* `T` toggle thumbnails / icons

|

||||

* when playing audio:

|

||||

* `0..9` jump to 10%..90%

|

||||

* `U/O` skip 10sec back/forward

|

||||

* `J/L` prev/next song

|

||||

* `J` also starts playing the folder

|

||||

* in the grid view:

|

||||

* `S` toggle multiselect

|

||||

* `A/D` zoom

|

||||

|

||||

|

||||

## tree-mode

|

||||

@@ -159,6 +170,13 @@ by default there's a breadcrumbs path; you can replace this with a tree-browser

|

||||

click `[-]` and `[+]` to adjust the size, and the `[a]` toggles if the tree should widen dynamically as you go deeper or stay fixed-size

|

||||

|

||||

|

||||

## thumbnails

|

||||

|

||||

|

||||

|

||||

it does static images with Pillow and uses FFmpeg for video files, so you may want to `--no-thumb` or maybe just `--no-vthumb` depending on how destructive your users are

|

||||

|

||||

|

||||

## zip downloads

|

||||

|

||||

the `zip` link next to folders can produce various types of zip/tar files using these alternatives in the browser settings tab:

|

||||

@@ -293,6 +311,7 @@ see the beautiful mess of a dictionary in [mtag.py](https://github.com/9001/copy

|

||||

`--no-mutagen` disables mutagen and uses ffprobe instead, which...

|

||||

* is about 20x slower than mutagen

|

||||

* catches a few tags that mutagen doesn't

|

||||

* melodic key, video resolution, framerate, pixfmt

|

||||

* avoids pulling any GPL code into copyparty

|

||||

* more importantly runs ffprobe on incoming files which is bad if your ffmpeg has a cve

|

||||

|

||||

@@ -308,6 +327,7 @@ copyparty can invoke external programs to collect additional metadata for files

|

||||

*but wait, there's more!* `-mtp` can be used for non-audio files as well using the `a` flag: `ay` only do audio files, `an` only do non-audio files, or `ad` do all files (d as in dontcare)

|

||||

|

||||

* `-mtp ext=an,~/bin/file-ext.py` runs `~/bin/file-ext.py` to get the `ext` tag only if file is not audio (`an`)

|

||||

* `-mtp arch,built,ver,orig=an,eexe,edll,~/bin/exe.py` runs `~/bin/exe.py` to get properties about windows-binaries only if file is not audio (`an`) and file extension is exe or dll

|

||||

|

||||

|

||||

## complete examples

|

||||

@@ -401,13 +421,31 @@ quick outline of the up2k protocol, see [uploading](#uploading) for the web-clie

|

||||

|

||||

* `jinja2` (is built into the SFX)

|

||||

|

||||

**optional,** enables music tags:

|

||||

|

||||

## optional dependencies

|

||||

|

||||

enable music tags:

|

||||

* either `mutagen` (fast, pure-python, skips a few tags, makes copyparty GPL? idk)

|

||||

* or `FFprobe` (20x slower, more accurate, possibly dangerous depending on your distro and users)

|

||||

|

||||

**optional,** will eventually enable thumbnails:

|

||||

enable image thumbnails:

|

||||

* `Pillow` (requires py2.7 or py3.5+)

|

||||

|

||||

enable video thumbnails:

|

||||

* `ffmpeg` and `ffprobe` somewhere in `$PATH`

|

||||

|

||||

enable reading HEIF pictures:

|

||||

* `pyheif-pillow-opener` (requires Linux or a C compiler)

|

||||

|

||||

enable reading AVIF pictures:

|

||||

* `pillow-avif-plugin`

|

||||

|

||||

|

||||

## install recommended deps

|

||||

```

|

||||

python -m pip install --user -U jinja2 mutagen Pillow

|

||||

```

|

||||

|

||||

|

||||

## optional gpl stuff

|

||||

|

||||

@@ -487,7 +525,6 @@ roughly sorted by priority

|

||||

* start from a chunk index and just go

|

||||

* terminate client on bad data

|

||||

* `os.copy_file_range` for up2k cloning

|

||||

* support pillow-simd

|

||||

* single sha512 across all up2k chunks? maybe

|

||||

* figure out the deal with pixel3a not being connectable as hotspot

|

||||

* pixel3a having unpredictable 3sec latency in general :||||

|

||||

|

||||

96

bin/mtag/exe.py

Normal file

96

bin/mtag/exe.py

Normal file

@@ -0,0 +1,96 @@

|

||||

#!/usr/bin/env python

|

||||

|

||||

import sys

|

||||

import time

|

||||

import json

|

||||

import pefile

|

||||

|

||||

"""

|

||||

retrieve exe info,

|

||||

example for multivalue providers

|

||||

"""

|

||||

|

||||

|

||||

def unk(v):

|

||||

return "unk({:04x})".format(v)

|

||||

|

||||

|

||||

class PE2(pefile.PE):

|

||||

def __init__(self, *a, **ka):

|

||||

for k in [

|

||||

# -- parse_data_directories:

|

||||

"parse_import_directory",

|

||||

"parse_export_directory",

|

||||

# "parse_resources_directory",

|

||||

"parse_debug_directory",

|

||||

"parse_relocations_directory",

|

||||

"parse_directory_tls",

|

||||

"parse_directory_load_config",

|

||||

"parse_delay_import_directory",

|

||||

"parse_directory_bound_imports",

|

||||

# -- full_load:

|

||||

"parse_rich_header",

|

||||

]:

|

||||

setattr(self, k, self.noop)

|

||||

|

||||

super(PE2, self).__init__(*a, **ka)

|

||||

|

||||

def noop(*a, **ka):

|

||||

pass

|

||||

|

||||

|

||||

try:

|

||||

pe = PE2(sys.argv[1], fast_load=False)

|

||||

except:

|

||||

sys.exit(0)

|

||||

|

||||

arch = pe.FILE_HEADER.Machine

|

||||

if arch == 0x14C:

|

||||

arch = "x86"

|

||||

elif arch == 0x8664:

|

||||

arch = "x64"

|

||||

else:

|

||||

arch = unk(arch)

|

||||

|

||||

try:

|

||||

buildtime = time.gmtime(pe.FILE_HEADER.TimeDateStamp)

|

||||

buildtime = time.strftime("%Y-%m-%d_%H:%M:%S", buildtime)

|

||||

except:

|

||||

buildtime = "invalid"

|

||||

|

||||

ui = pe.OPTIONAL_HEADER.Subsystem

|

||||

if ui == 2:

|

||||

ui = "GUI"

|

||||

elif ui == 3:

|

||||

ui = "cmdline"

|

||||

else:

|

||||

ui = unk(ui)

|

||||

|

||||

extra = {}

|

||||

if hasattr(pe, "FileInfo"):

|

||||

for v1 in pe.FileInfo:

|

||||

for v2 in v1:

|

||||

if v2.name != "StringFileInfo":

|

||||

continue

|

||||

|

||||

for v3 in v2.StringTable:

|

||||

for k, v in v3.entries.items():

|

||||

v = v.decode("utf-8", "replace").strip()

|

||||

if not v:

|

||||

continue

|

||||

|

||||

if k in [b"FileVersion", b"ProductVersion"]:

|

||||

extra["ver"] = v

|

||||

|

||||

if k in [b"OriginalFilename", b"InternalName"]:

|

||||

extra["orig"] = v

|

||||

|

||||

r = {

|

||||

"arch": arch,

|

||||

"built": buildtime,

|

||||

"ui": ui,

|

||||

"cksum": "{:08x}".format(pe.OPTIONAL_HEADER.CheckSum),

|

||||

}

|

||||

r.update(extra)

|

||||

|

||||

print(json.dumps(r, indent=4))

|

||||

@@ -2,6 +2,7 @@

|

||||

from __future__ import print_function, unicode_literals

|

||||

|

||||

import platform

|

||||

import time

|

||||

import sys

|

||||

import os

|

||||

|

||||

@@ -23,6 +24,7 @@ MACOS = platform.system() == "Darwin"

|

||||

|

||||

class EnvParams(object):

|

||||

def __init__(self):

|

||||

self.t0 = time.time()

|

||||

self.mod = os.path.dirname(os.path.realpath(__file__))

|

||||

if self.mod.endswith("__init__"):

|

||||

self.mod = os.path.dirname(self.mod)

|

||||

|

||||

@@ -249,6 +249,17 @@ def run_argparse(argv, formatter):

|

||||

ap.add_argument("--urlform", metavar="MODE", type=str, default="print,get", help="how to handle url-forms")

|

||||

ap.add_argument("--salt", type=str, default="hunter2", help="up2k file-hash salt")

|

||||

|

||||

ap2 = ap.add_argument_group('thumbnail options')

|

||||

ap2.add_argument("--no-thumb", action="store_true", help="disable all thumbnails")

|

||||

ap2.add_argument("--no-vthumb", action="store_true", help="disable video thumbnails")

|

||||

ap2.add_argument("--th-size", metavar="WxH", default="320x256", help="thumbnail res")

|

||||

ap2.add_argument("--th-no-crop", action="store_true", help="dynamic height; show full image")

|

||||

ap2.add_argument("--th-no-jpg", action="store_true", help="disable jpg output")

|

||||

ap2.add_argument("--th-no-webp", action="store_true", help="disable webp output")

|

||||

ap2.add_argument("--th-poke", metavar="SEC", type=int, default=300, help="activity labeling cooldown")

|

||||

ap2.add_argument("--th-clean", metavar="SEC", type=int, default=1800, help="cleanup interval")

|

||||

ap2.add_argument("--th-maxage", metavar="SEC", type=int, default=604800, help="max folder age")

|

||||

|

||||

ap2 = ap.add_argument_group('database options')

|

||||

ap2.add_argument("-e2d", action="store_true", help="enable up2k database")

|

||||

ap2.add_argument("-e2ds", action="store_true", help="enable up2k db-scanner, sets -e2d")

|

||||

@@ -260,7 +271,7 @@ def run_argparse(argv, formatter):

|

||||

ap2.add_argument("--no-mtag-mt", action="store_true", help="disable tag-read parallelism")

|

||||

ap2.add_argument("-mtm", metavar="M=t,t,t", action="append", type=str, help="add/replace metadata mapping")

|

||||

ap2.add_argument("-mte", metavar="M,M,M", type=str, help="tags to index/display (comma-sep.)",

|

||||

default="circle,album,.tn,artist,title,.bpm,key,.dur,.q")

|

||||

default="circle,album,.tn,artist,title,.bpm,key,.dur,.q,.vq,.aq,ac,vc,res,.fps")

|

||||

ap2.add_argument("-mtp", metavar="M=[f,]bin", action="append", type=str, help="read tag M using bin")

|

||||

ap2.add_argument("--srch-time", metavar="SEC", type=int, default=30, help="search deadline")

|

||||

|

||||

@@ -277,7 +288,7 @@ def run_argparse(argv, formatter):

|

||||

ap2.add_argument("--no-sendfile", action="store_true", help="disable sendfile")

|

||||

ap2.add_argument("--no-scandir", action="store_true", help="disable scandir")

|

||||

ap2.add_argument("--ihead", metavar="HEADER", action='append', help="dump incoming header")

|

||||

ap2.add_argument("--lf-url", metavar="RE", type=str, default=r"^/\.cpr/", help="dont log URLs matching")

|

||||

ap2.add_argument("--lf-url", metavar="RE", type=str, default=r"^/\.cpr/|\?th=[wj]$", help="dont log URLs matching")

|

||||

|

||||

return ap.parse_args(args=argv[1:])

|

||||

# fmt: on

|

||||

|

||||

@@ -1,8 +1,8 @@

|

||||

# coding: utf-8

|

||||

|

||||

VERSION = (0, 10, 22)

|

||||

CODENAME = "zip it"

|

||||

BUILD_DT = (2021, 5, 18)

|

||||

VERSION = (0, 11, 0)

|

||||

CODENAME = "the grid"

|

||||

BUILD_DT = (2021, 5, 29)

|

||||

|

||||

S_VERSION = ".".join(map(str, VERSION))

|

||||

S_BUILD_DT = "{0:04d}-{1:02d}-{2:02d}".format(*BUILD_DT)

|

||||

|

||||

@@ -475,8 +475,10 @@ class AuthSrv(object):

|

||||

# verify tags mentioned by -mt[mp] are used by -mte

|

||||

local_mtp = {}

|

||||

local_only_mtp = {}

|

||||

for a in vol.flags.get("mtp", []) + vol.flags.get("mtm", []):

|

||||

a = a.split("=")[0]

|

||||

tags = vol.flags.get("mtp", []) + vol.flags.get("mtm", [])

|

||||

tags = [x.split("=")[0] for x in tags]

|

||||

tags = [y for x in tags for y in x.split(",")]

|

||||

for a in tags:

|

||||

local_mtp[a] = True

|

||||

local = True

|

||||

for b in self.args.mtp or []:

|

||||

@@ -505,8 +507,10 @@ class AuthSrv(object):

|

||||

self.log(m.format(vol.vpath, mtp), 1)

|

||||

errors = True

|

||||

|

||||

for mtp in self.args.mtp or []:

|

||||

mtp = mtp.split("=")[0]

|

||||

tags = self.args.mtp or []

|

||||

tags = [x.split("=")[0] for x in tags]

|

||||

tags = [y for x in tags for y in x.split(",")]

|

||||

for mtp in tags:

|

||||

if mtp not in all_mte:

|

||||

m = 'metadata tag "{}" is defined by "-mtm" or "-mtp", but is not used by "-mte" (or by any "cmte" volume-flag)'

|

||||

self.log(m.format(mtp), 1)

|

||||

|

||||

@@ -22,6 +22,10 @@ if not PY2:

|

||||

unicode = str

|

||||

|

||||

|

||||

NO_CACHE = {"Cache-Control": "no-cache"}

|

||||

NO_STORE = {"Cache-Control": "no-store; max-age=0"}

|

||||

|

||||

|

||||

class HttpCli(object):

|

||||

"""

|

||||

Spawned by HttpConn to process one http transaction

|

||||

@@ -36,6 +40,8 @@ class HttpCli(object):

|

||||

self.addr = conn.addr

|

||||

self.args = conn.args

|

||||

self.auth = conn.auth

|

||||

self.ico = conn.ico

|

||||

self.thumbcli = conn.thumbcli

|

||||

self.log_func = conn.log_func

|

||||

self.log_src = conn.log_src

|

||||

self.tls = hasattr(self.s, "cipher")

|

||||

@@ -158,7 +164,7 @@ class HttpCli(object):

|

||||

uparam["b"] = False

|

||||

cookies["b"] = False

|

||||

|

||||

self.do_log = not self.conn.lf_url or not self.conn.lf_url.match(self.req)

|

||||

self.do_log = not self.conn.lf_url or not self.conn.lf_url.search(self.req)

|

||||

|

||||

try:

|

||||

if self.mode in ["GET", "HEAD"]:

|

||||

@@ -283,6 +289,9 @@ class HttpCli(object):

|

||||

|

||||

# "embedded" resources

|

||||

if self.vpath.startswith(".cpr"):

|

||||

if self.vpath.startswith(".cpr/ico/"):

|

||||

return self.tx_ico(self.vpath.split("/")[-1], exact=True)

|

||||

|

||||

static_path = os.path.join(E.mod, "web/", self.vpath[5:])

|

||||

return self.tx_file(static_path)

|

||||

|

||||

@@ -423,7 +432,7 @@ class HttpCli(object):

|

||||

fn = "put-{:.6f}-{}.bin".format(time.time(), addr)

|

||||

path = os.path.join(fdir, fn)

|

||||

|

||||

with open(path, "wb", 512 * 1024) as f:

|

||||

with open(fsenc(path), "wb", 512 * 1024) as f:

|

||||

post_sz, _, sha_b64 = hashcopy(self.conn, reader, f)

|

||||

|

||||

self.conn.hsrv.broker.put(

|

||||

@@ -543,9 +552,9 @@ class HttpCli(object):

|

||||

if sub:

|

||||

try:

|

||||

dst = os.path.join(vfs.realpath, rem)

|

||||

os.makedirs(dst)

|

||||

os.makedirs(fsenc(dst))

|

||||

except:

|

||||

if not os.path.isdir(dst):

|

||||

if not os.path.isdir(fsenc(dst)):

|

||||

raise Pebkac(400, "some file got your folder name")

|

||||

|

||||

x = self.conn.hsrv.broker.put(True, "up2k.handle_json", body)

|

||||

@@ -633,7 +642,7 @@ class HttpCli(object):

|

||||

|

||||

reader = read_socket(self.sr, remains)

|

||||

|

||||

with open(path, "rb+", 512 * 1024) as f:

|

||||

with open(fsenc(path), "rb+", 512 * 1024) as f:

|

||||

f.seek(cstart[0])

|

||||

post_sz, _, sha_b64 = hashcopy(self.conn, reader, f)

|

||||

|

||||

@@ -676,7 +685,7 @@ class HttpCli(object):

|

||||

times = (int(time.time()), int(lastmod))

|

||||

self.log("no more chunks, setting times {}".format(times))

|

||||

try:

|

||||

os.utime(path, times)

|

||||

os.utime(fsenc(path), times)

|

||||

except:

|

||||

self.log("failed to utime ({}, {})".format(path, times))

|

||||

|

||||

@@ -927,16 +936,16 @@ class HttpCli(object):

|

||||

mdir, mfile = os.path.split(fp)

|

||||

mfile2 = "{}.{:.3f}.md".format(mfile[:-3], srv_lastmod)

|

||||

try:

|

||||

os.mkdir(os.path.join(mdir, ".hist"))

|

||||

os.mkdir(fsenc(os.path.join(mdir, ".hist")))

|

||||

except:

|

||||

pass

|

||||

os.rename(fp, os.path.join(mdir, ".hist", mfile2))

|

||||

os.rename(fsenc(fp), fsenc(os.path.join(mdir, ".hist", mfile2)))

|

||||

|

||||

p_field, _, p_data = next(self.parser.gen)

|

||||

if p_field != "body":

|

||||

raise Pebkac(400, "expected body, got {}".format(p_field))

|

||||

|

||||

with open(fp, "wb", 512 * 1024) as f:

|

||||

with open(fsenc(fp), "wb", 512 * 1024) as f:

|

||||

sz, sha512, _ = hashcopy(self.conn, p_data, f)

|

||||

|

||||

new_lastmod = os.stat(fsenc(fp)).st_mtime

|

||||

@@ -952,14 +961,11 @@ class HttpCli(object):

|

||||

return True

|

||||

|

||||

def _chk_lastmod(self, file_ts):

|

||||

date_fmt = "%a, %d %b %Y %H:%M:%S GMT"

|

||||

file_dt = datetime.utcfromtimestamp(file_ts)

|

||||

file_lastmod = file_dt.strftime(date_fmt)

|

||||

|

||||

file_lastmod = http_ts(file_ts)

|

||||

cli_lastmod = self.headers.get("if-modified-since")

|

||||

if cli_lastmod:

|

||||

try:

|

||||

cli_dt = time.strptime(cli_lastmod, date_fmt)

|

||||

cli_dt = time.strptime(cli_lastmod, HTTP_TS_FMT)

|

||||

cli_ts = calendar.timegm(cli_dt)

|

||||

return file_lastmod, int(file_ts) > int(cli_ts)

|

||||

except Exception as ex:

|

||||

@@ -1106,13 +1112,13 @@ class HttpCli(object):

|

||||

# send reply

|

||||

|

||||

if not is_compressed:

|

||||

self.out_headers["Cache-Control"] = "no-cache"

|

||||

self.out_headers.update(NO_CACHE)

|

||||

|

||||

self.out_headers["Accept-Ranges"] = "bytes"

|

||||

self.send_headers(

|

||||

length=upper - lower,

|

||||

status=status,

|

||||

mime=guess_mime(req_path)[0] or "application/octet-stream",

|

||||

mime=guess_mime(req_path),

|

||||

)

|

||||

|

||||

logmsg += unicode(status) + logtail

|

||||

@@ -1202,6 +1208,34 @@ class HttpCli(object):

|

||||

self.log("{}, {}".format(logmsg, spd))

|

||||

return True

|

||||

|

||||

def tx_ico(self, ext, exact=False):

|

||||

if ext.endswith("/"):

|

||||

ext = "folder"

|

||||

exact = True

|

||||

|

||||

bad = re.compile(r"[](){}/[]|^[0-9_-]*$")

|

||||

n = ext.split(".")[::-1]

|

||||

if not exact:

|

||||

n = n[:-1]

|

||||

|

||||

ext = ""

|

||||

for v in n:

|

||||

if len(v) > 7 or bad.search(v):

|

||||

break

|

||||

|

||||

ext = "{}.{}".format(v, ext)

|

||||

|

||||

ext = ext.rstrip(".") or "unk"

|

||||

if len(ext) > 11:

|

||||

ext = "⋯" + ext[-9:]

|

||||

|

||||

mime, ico = self.ico.get(ext, not exact)

|

||||

|

||||

dt = datetime.utcfromtimestamp(E.t0)

|

||||

lm = dt.strftime("%a, %d %b %Y %H:%M:%S GMT")

|

||||

self.reply(ico, mime=mime, headers={"Last-Modified": lm})

|

||||

return True

|

||||

|

||||

def tx_md(self, fs_path):

|

||||

logmsg = "{:4} {} ".format("", self.req)

|

||||

|

||||

@@ -1224,7 +1258,7 @@ class HttpCli(object):

|

||||

file_ts = max(ts_md, ts_html)

|

||||

file_lastmod, do_send = self._chk_lastmod(file_ts)

|

||||

self.out_headers["Last-Modified"] = file_lastmod

|

||||

self.out_headers["Cache-Control"] = "no-cache"

|

||||

self.out_headers.update(NO_CACHE)

|

||||

status = 200 if do_send else 304

|

||||

|

||||

boundary = "\roll\tide"

|

||||

@@ -1236,7 +1270,7 @@ class HttpCli(object):

|

||||

"md_chk_rate": self.args.mcr,

|

||||

"md": boundary,

|

||||

}

|

||||

html = template.render(**targs).encode("utf-8")

|

||||

html = template.render(**targs).encode("utf-8", "replace")

|

||||

html = html.split(boundary.encode("utf-8"))

|

||||

if len(html) != 2:

|

||||

raise Exception("boundary appears in " + html_path)

|

||||

@@ -1271,7 +1305,7 @@ class HttpCli(object):

|

||||

rvol = [x + "/" if x else x for x in self.rvol]

|

||||

wvol = [x + "/" if x else x for x in self.wvol]

|

||||

html = self.j2("splash", this=self, rvol=rvol, wvol=wvol, url_suf=suf)

|

||||

self.reply(html.encode("utf-8"))

|

||||

self.reply(html.encode("utf-8"), headers=NO_STORE)

|

||||

return True

|

||||

|

||||

def tx_tree(self):

|

||||

@@ -1346,10 +1380,31 @@ class HttpCli(object):

|

||||

)

|

||||

abspath = vn.canonical(rem)

|

||||

|

||||

if not os.path.exists(fsenc(abspath)):

|

||||

# print(abspath)

|

||||

try:

|

||||

st = os.stat(fsenc(abspath))

|

||||

except:

|

||||

raise Pebkac(404)

|

||||

|

||||

if self.readable and not stat.S_ISDIR(st.st_mode):

|

||||

if rem.startswith(".hist/up2k."):

|

||||

raise Pebkac(403)

|

||||

|

||||

th_fmt = self.uparam.get("th")

|

||||

if th_fmt is not None:

|

||||

thp = None

|

||||

if self.thumbcli:

|

||||

thp = self.thumbcli.get(vn.realpath, rem, int(st.st_mtime), th_fmt)

|

||||

|

||||

if thp:

|

||||

return self.tx_file(thp)

|

||||

|

||||

return self.tx_ico(rem)

|

||||

|

||||

if abspath.endswith(".md") and "raw" not in self.uparam:

|

||||

return self.tx_md(abspath)

|

||||

|

||||

return self.tx_file(abspath)

|

||||

|

||||

srv_info = []

|

||||

|

||||

try:

|

||||

@@ -1368,7 +1423,7 @@ class HttpCli(object):

|

||||

)

|

||||

srv_info.append(humansize(bfree.value) + " free")

|

||||

else:

|

||||

sv = os.statvfs(abspath)

|

||||

sv = os.statvfs(fsenc(abspath))

|

||||

free = humansize(sv.f_frsize * sv.f_bfree, True)

|

||||

total = humansize(sv.f_frsize * sv.f_blocks, True)

|

||||

|

||||

@@ -1428,25 +1483,20 @@ class HttpCli(object):

|

||||

if not self.readable:

|

||||

if is_ls:

|

||||

ret = json.dumps(ls_ret)

|

||||

self.reply(ret.encode("utf-8", "replace"), mime="application/json")

|

||||

self.reply(

|

||||

ret.encode("utf-8", "replace"),

|

||||

mime="application/json",

|

||||

headers=NO_STORE,

|

||||

)

|

||||

return True

|

||||

|

||||

if not os.path.isdir(fsenc(abspath)):

|

||||

if not stat.S_ISDIR(st.st_mode):

|

||||

raise Pebkac(404)

|

||||

|

||||

html = self.j2(tpl, **j2a)

|

||||

self.reply(html.encode("utf-8", "replace"))

|

||||

self.reply(html.encode("utf-8", "replace"), headers=NO_STORE)

|

||||

return True

|

||||

|

||||

if not os.path.isdir(fsenc(abspath)):

|

||||

if abspath.endswith(".md") and "raw" not in self.uparam:

|

||||

return self.tx_md(abspath)

|

||||

|

||||

if rem.startswith(".hist/up2k."):

|

||||

raise Pebkac(403)

|

||||

|

||||

return self.tx_file(abspath)

|

||||

|

||||

for k in ["zip", "tar"]:

|

||||

v = self.uparam.get(k)

|

||||

if v is not None:

|

||||

@@ -1582,7 +1632,11 @@ class HttpCli(object):

|

||||

ls_ret["files"] = files

|

||||

ls_ret["taglist"] = taglist

|

||||

ret = json.dumps(ls_ret)

|

||||

self.reply(ret.encode("utf-8", "replace"), mime="application/json")

|

||||

self.reply(

|

||||

ret.encode("utf-8", "replace"),

|

||||

mime="application/json",

|

||||

headers=NO_STORE,

|

||||

)

|

||||

return True

|

||||

|

||||

j2a["files"] = dirs + files

|

||||

@@ -1592,5 +1646,5 @@ class HttpCli(object):

|

||||

j2a["tag_order"] = json.dumps(vn.flags["mte"].split(","))

|

||||

|

||||

html = self.j2(tpl, **j2a)

|

||||

self.reply(html.encode("utf-8", "replace"))

|

||||

self.reply(html.encode("utf-8", "replace"), headers=NO_STORE)

|

||||

return True

|

||||

|

||||

@@ -17,6 +17,9 @@ from .__init__ import E

|

||||

from .util import Unrecv

|

||||

from .httpcli import HttpCli

|

||||

from .u2idx import U2idx

|

||||

from .th_cli import ThumbCli

|

||||

from .th_srv import HAVE_PIL

|

||||

from .ico import Ico

|

||||

|

||||

|

||||

class HttpConn(object):

|

||||

@@ -34,6 +37,10 @@ class HttpConn(object):

|

||||

self.auth = hsrv.auth

|

||||

self.cert_path = hsrv.cert_path

|

||||

|

||||

enth = HAVE_PIL and not self.args.no_thumb

|

||||

self.thumbcli = ThumbCli(hsrv.broker) if enth else None

|

||||

self.ico = Ico(self.args)

|

||||

|

||||

self.t0 = time.time()

|

||||

self.nbyte = 0

|

||||

self.workload = 0

|

||||

|

||||

39

copyparty/ico.py

Normal file

39

copyparty/ico.py

Normal file

@@ -0,0 +1,39 @@

|

||||

import hashlib

|

||||

import colorsys

|

||||

|

||||

from .__init__ import PY2

|

||||

|

||||

|

||||

class Ico(object):

|

||||

def __init__(self, args):

|

||||

self.args = args

|

||||

|

||||

def get(self, ext, as_thumb):

|

||||

"""placeholder to make thumbnails not break"""

|

||||

|

||||

h = hashlib.md5(ext.encode("utf-8")).digest()[:2]

|

||||

if PY2:

|

||||

h = [ord(x) for x in h]

|

||||

|

||||

c1 = colorsys.hsv_to_rgb(h[0] / 256.0, 1, 0.3)

|

||||

c2 = colorsys.hsv_to_rgb(h[0] / 256.0, 1, 1)

|

||||

c = list(c1) + list(c2)

|

||||

c = [int(x * 255) for x in c]

|

||||

c = "".join(["{:02x}".format(x) for x in c])

|

||||

|

||||

h = 30

|

||||

if not self.args.th_no_crop and as_thumb:

|

||||

w, h = self.args.th_size.split("x")

|

||||

h = int(100 / (float(w) / float(h)))

|

||||

|

||||

svg = """\

|

||||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<svg version="1.1" viewBox="0 0 100 {}" xmlns="http://www.w3.org/2000/svg"><g>

|

||||

<rect width="100%" height="100%" fill="#{}" />

|

||||

<text x="50%" y="50%" dominant-baseline="middle" text-anchor="middle" xml:space="preserve"

|

||||

fill="#{}" font-family="monospace" font-size="14px" style="letter-spacing:.5px">{}</text>

|

||||

</g></svg>

|

||||

"""

|

||||

svg = svg.format(h, c[:6], c[6:], ext).encode("utf-8")

|

||||

|

||||

return ["image/svg+xml", svg]

|

||||

@@ -4,6 +4,7 @@ from __future__ import print_function, unicode_literals

|

||||

import re

|

||||

import os

|

||||

import sys

|

||||

import json

|

||||

import shutil

|

||||

import subprocess as sp

|

||||

|

||||

@@ -14,6 +15,204 @@ if not PY2:

|

||||

unicode = str

|

||||

|

||||

|

||||

def have_ff(cmd):

|

||||

if PY2:

|

||||

cmd = (cmd + " -version").encode("ascii").split(b" ")

|

||||

try:

|

||||

sp.Popen(cmd, stdout=sp.PIPE, stderr=sp.PIPE).communicate()

|

||||

return True

|

||||

except:

|

||||

return False

|

||||

else:

|

||||

return bool(shutil.which(cmd))

|

||||

|

||||

|

||||

HAVE_FFMPEG = have_ff("ffmpeg")

|

||||

HAVE_FFPROBE = have_ff("ffprobe")

|

||||

|

||||

|

||||

class MParser(object):

|

||||

def __init__(self, cmdline):

|

||||

self.tag, args = cmdline.split("=", 1)

|

||||

self.tags = self.tag.split(",")

|

||||

|

||||

self.timeout = 30

|

||||

self.force = False

|

||||

self.audio = "y"

|

||||

self.ext = []

|

||||

|

||||

while True:

|

||||

try:

|

||||

bp = os.path.expanduser(args)

|

||||

if os.path.exists(bp):

|

||||

self.bin = bp

|

||||

return

|

||||

except:

|

||||

pass

|

||||

|

||||

arg, args = args.split(",", 1)

|

||||

arg = arg.lower()

|

||||

|

||||

if arg.startswith("a"):

|

||||

self.audio = arg[1:] # [r]equire [n]ot [d]ontcare

|

||||

continue

|

||||

|

||||

if arg == "f":

|

||||

self.force = True

|

||||

continue

|

||||

|

||||

if arg.startswith("t"):

|

||||

self.timeout = int(arg[1:])

|

||||

continue

|

||||

|

||||

if arg.startswith("e"):

|

||||

self.ext.append(arg[1:])

|

||||

continue

|

||||

|

||||

raise Exception()

|

||||

|

||||

|

||||

def ffprobe(abspath):

|

||||

cmd = [

|

||||

b"ffprobe",

|

||||

b"-hide_banner",

|

||||

b"-show_streams",

|

||||

b"-show_format",

|

||||

b"--",

|

||||

fsenc(abspath),

|

||||

]

|

||||

p = sp.Popen(cmd, stdout=sp.PIPE, stderr=sp.PIPE)

|

||||

r = p.communicate()

|

||||

txt = r[0].decode("utf-8", "replace")

|

||||

return parse_ffprobe(txt)

|

||||

|

||||

|

||||

def parse_ffprobe(txt):

|

||||

"""ffprobe -show_format -show_streams"""

|

||||

streams = []

|

||||

fmt = {}

|

||||

g = None

|

||||

for ln in [x.rstrip("\r") for x in txt.split("\n")]:

|

||||

try:

|

||||

k, v = ln.split("=", 1)

|

||||

g[k] = v

|

||||

continue

|

||||

except:

|

||||

pass

|

||||

|

||||

if ln == "[STREAM]":

|

||||

g = {}

|

||||

streams.append(g)

|

||||

|

||||

if ln == "[FORMAT]":

|

||||

g = {"codec_type": "format"} # heh

|

||||

fmt = g

|

||||

|

||||

streams = [fmt] + streams

|

||||

ret = {} # processed

|

||||

md = {} # raw tags

|

||||

|

||||

have = {}

|

||||

for strm in streams:

|

||||

typ = strm.get("codec_type")

|

||||

if typ in have:

|

||||

continue

|

||||

|

||||

have[typ] = True

|

||||

kvm = []

|

||||

|

||||

if typ == "audio":

|

||||

kvm = [

|

||||

["codec_name", "ac"],

|

||||

["channel_layout", "chs"],

|

||||

["sample_rate", ".hz"],

|

||||

["bit_rate", ".aq"],

|

||||

["duration", ".dur"],

|

||||

]

|

||||

|

||||

if typ == "video":

|

||||

if strm.get("DISPOSITION:attached_pic") == "1" or fmt.get(

|

||||

"format_name"

|

||||

) in ["mp3", "ogg", "flac"]:

|

||||

continue

|

||||

|

||||

kvm = [

|

||||

["codec_name", "vc"],

|

||||

["pix_fmt", "pixfmt"],

|

||||

["r_frame_rate", ".fps"],

|

||||

["bit_rate", ".vq"],

|

||||

["width", ".resw"],

|

||||

["height", ".resh"],

|

||||

["duration", ".dur"],

|

||||

]

|

||||

|

||||

if typ == "format":

|

||||

kvm = [["duration", ".dur"], ["bit_rate", ".q"]]

|

||||

|

||||

for sk, rk in kvm:

|

||||

v = strm.get(sk)

|

||||

if v is None:

|

||||

continue

|

||||

|

||||

if rk.startswith("."):

|

||||

try:

|

||||

v = float(v)

|

||||

v2 = ret.get(rk)

|

||||

if v2 is None or v > v2:

|

||||

ret[rk] = v

|

||||

except:

|

||||

# sqlite doesnt care but the code below does

|

||||

if v not in ["N/A"]:

|

||||

ret[rk] = v

|

||||

else:

|

||||

ret[rk] = v

|

||||

|

||||

if ret.get("vc") == "ansi": # shellscript

|

||||

return {}, {}

|

||||

|

||||

for strm in streams:

|

||||

for k, v in strm.items():

|

||||

if not k.startswith("TAG:"):

|

||||

continue

|

||||

|

||||

k = k[4:].strip()

|

||||

v = v.strip()

|

||||

if k and v:

|

||||

md[k] = [v]

|

||||

|

||||

for k in [".q", ".vq", ".aq"]:

|

||||

if k in ret:

|

||||

ret[k] /= 1000 # bit_rate=320000

|

||||

|

||||

for k in [".q", ".vq", ".aq", ".resw", ".resh"]:

|

||||

if k in ret:

|

||||

ret[k] = int(ret[k])

|

||||

|

||||

if ".fps" in ret:

|

||||

fps = ret[".fps"]

|

||||

if "/" in fps:

|

||||

fa, fb = fps.split("/")

|

||||

fps = int(fa) * 1.0 / int(fb)

|

||||

|

||||

if fps < 1000 and fmt.get("format_name") not in ["image2", "png_pipe"]:

|

||||

ret[".fps"] = round(fps, 3)

|

||||

else:

|

||||

del ret[".fps"]

|

||||

|

||||

if ".dur" in ret:

|

||||

if ret[".dur"] < 0.1:

|

||||

del ret[".dur"]

|

||||

if ".q" in ret:

|

||||

del ret[".q"]

|

||||

|

||||

if ".resw" in ret and ".resh" in ret:

|

||||

ret["res"] = "{}x{}".format(ret[".resw"], ret[".resh"])

|

||||

|

||||

ret = {k: [0, v] for k, v in ret.items()}

|

||||

|

||||

return ret, md

|

||||

|

||||

|

||||

class MTag(object):

|

||||

def __init__(self, log_func, args):

|

||||

self.log_func = log_func

|

||||

@@ -35,15 +234,7 @@ class MTag(object):

|

||||

self.get = self.get_ffprobe

|

||||

self.prefer_mt = True

|

||||

# about 20x slower

|

||||

if PY2:

|

||||

cmd = [b"ffprobe", b"-version"]

|

||||

try:

|

||||

sp.Popen(cmd, stdout=sp.PIPE, stderr=sp.PIPE)

|

||||

except:

|

||||

self.usable = False

|

||||

else:

|

||||

if not shutil.which("ffprobe"):

|

||||

self.usable = False

|

||||

self.usable = HAVE_FFPROBE

|

||||

|

||||

if self.usable and WINDOWS and sys.version_info < (3, 8):

|

||||

self.usable = False

|

||||

@@ -52,8 +243,10 @@ class MTag(object):

|

||||

self.log(msg, c=1)

|

||||

|

||||

if not self.usable:

|

||||

msg = "need mutagen{} to read media tags so please run this:\n {} -m pip install --user mutagen"

|

||||

self.log(msg.format(or_ffprobe, os.path.basename(sys.executable)), c=1)

|

||||

msg = "need mutagen{} to read media tags so please run this:\n{}{} -m pip install --user mutagen\n"

|

||||

self.log(

|

||||

msg.format(or_ffprobe, " " * 37, os.path.basename(sys.executable)), c=1

|

||||

)

|

||||

return

|

||||

|

||||

# https://picard-docs.musicbrainz.org/downloads/MusicBrainz_Picard_Tag_Map.html

|

||||

@@ -201,7 +394,7 @@ class MTag(object):

|

||||

import mutagen

|

||||

|

||||

try:

|

||||

md = mutagen.File(abspath, easy=True)

|

||||

md = mutagen.File(fsenc(abspath), easy=True)

|

||||

x = md.info.length

|

||||

except Exception as ex:

|

||||

return {}

|

||||

@@ -212,7 +405,7 @@ class MTag(object):

|

||||

try:

|

||||

q = int(md.info.bitrate / 1024)

|

||||

except:

|

||||

q = int((os.path.getsize(abspath) / dur) / 128)

|

||||

q = int((os.path.getsize(fsenc(abspath)) / dur) / 128)

|

||||

|

||||

ret[".dur"] = [0, dur]

|

||||

ret[".q"] = [0, q]

|

||||

@@ -222,101 +415,7 @@ class MTag(object):

|

||||

return self.normalize_tags(ret, md)

|

||||

|

||||

def get_ffprobe(self, abspath):

|

||||

cmd = [b"ffprobe", b"-hide_banner", b"--", fsenc(abspath)]

|

||||

p = sp.Popen(cmd, stdout=sp.PIPE, stderr=sp.PIPE)

|

||||

r = p.communicate()

|

||||

txt = r[1].decode("utf-8", "replace")

|

||||

txt = [x.rstrip("\r") for x in txt.split("\n")]

|

||||

|

||||

"""

|

||||

note:

|

||||

tags which contain newline will be truncated on first \n,

|

||||

ffprobe emits \n and spacepads the : to align visually

|

||||

note:

|

||||

the Stream ln always mentions Audio: if audio

|

||||

the Stream ln usually has kb/s, is more accurate

|

||||

the Duration ln always has kb/s

|

||||

the Metadata: after Chapter may contain BPM info,

|

||||

title : Tempo: 126.0

|

||||

|

||||

Input #0, wav,

|

||||

Metadata:

|

||||

date : <OK>

|

||||

Duration:

|

||||

Chapter #

|

||||

Metadata:

|

||||

title : <NG>

|

||||

|

||||

Input #0, mp3,

|

||||

Metadata:

|

||||

album : <OK>

|

||||

Duration:

|

||||

Stream #0:0: Audio:

|

||||

Stream #0:1: Video:

|

||||

Metadata:

|

||||

comment : <NG>

|

||||

"""

|

||||

|

||||

ptn_md_beg = re.compile("^( +)Metadata:$")

|

||||

ptn_md_kv = re.compile("^( +)([^:]+) *: (.*)")

|

||||

ptn_dur = re.compile("^ *Duration: ([^ ]+)(, |$)")

|

||||

ptn_br1 = re.compile("^ *Duration: .*, bitrate: ([0-9]+) kb/s(, |$)")

|

||||

ptn_br2 = re.compile("^ *Stream.*: Audio:.* ([0-9]+) kb/s(, |$)")

|

||||

ptn_audio = re.compile("^ *Stream .*: Audio: ")

|

||||

ptn_au_parent = re.compile("^ *(Input #|Stream .*: Audio: )")

|

||||

|

||||

ret = {}

|

||||

md = {}

|

||||

in_md = False

|

||||

is_audio = False

|

||||

au_parent = False

|

||||

for ln in txt:

|

||||

m = ptn_md_kv.match(ln)

|

||||

if m and in_md and len(m.group(1)) == in_md:

|

||||

_, k, v = [x.strip() for x in m.groups()]

|

||||

if k != "" and v != "":

|

||||

md[k] = [v]

|

||||

continue

|

||||

else:

|

||||

in_md = False

|

||||

|

||||

m = ptn_md_beg.match(ln)

|

||||

if m and au_parent:

|

||||

in_md = len(m.group(1)) + 2

|

||||

continue

|

||||

|

||||

au_parent = bool(ptn_au_parent.search(ln))

|

||||

|

||||

if ptn_audio.search(ln):

|

||||

is_audio = True

|

||||

|

||||

m = ptn_dur.search(ln)

|

||||

if m:

|

||||

sec = 0

|

||||

tstr = m.group(1)

|

||||

if tstr.lower() != "n/a":

|

||||

try:

|

||||

tf = tstr.split(",")[0].split(".")[0].split(":")

|

||||

for f in tf:

|

||||

sec *= 60

|

||||

sec += int(f)

|

||||

except:

|

||||

self.log("invalid timestr from ffprobe: [{}]".format(tstr), c=3)

|

||||

|

||||

ret[".dur"] = sec

|

||||

m = ptn_br1.search(ln)

|

||||

if m:

|

||||

ret[".q"] = m.group(1)

|

||||

|

||||

m = ptn_br2.search(ln)

|

||||

if m:

|

||||

ret[".q"] = m.group(1)

|

||||

|

||||

if not is_audio:

|

||||

return {}

|

||||

|

||||

ret = {k: [0, v] for k, v in ret.items()}

|

||||

|

||||

ret, md = ffprobe(abspath)

|

||||

return self.normalize_tags(ret, md)

|

||||

|

||||

def get_bin(self, parsers, abspath):

|

||||

@@ -327,10 +426,10 @@ class MTag(object):

|

||||

env["PYTHONPATH"] = pypath

|

||||

|

||||

ret = {}

|

||||

for tagname, (binpath, timeout) in parsers.items():

|

||||

for tagname, mp in parsers.items():

|

||||

try:

|

||||

cmd = [sys.executable, binpath, abspath]

|

||||

args = {"env": env, "timeout": timeout}

|

||||

cmd = [sys.executable, mp.bin, abspath]

|

||||

args = {"env": env, "timeout": mp.timeout}

|

||||

|

||||

if WINDOWS:

|

||||

args["creationflags"] = 0x4000

|

||||

@@ -339,8 +438,16 @@ class MTag(object):

|

||||

|

||||

cmd = [fsenc(x) for x in cmd]

|

||||

v = sp.check_output(cmd, **args).strip()

|

||||

if v:

|

||||

if not v:

|

||||

continue

|

||||

|

||||

if "," not in tagname:

|

||||

ret[tagname] = v.decode("utf-8")

|

||||

else:

|

||||

v = json.loads(v)

|

||||

for tag in tagname.split(","):

|

||||

if tag and tag in v:

|

||||

ret[tag] = v[tag]

|

||||

except:

|

||||

pass

|

||||

|

||||

|

||||

@@ -2,6 +2,7 @@

|

||||

from __future__ import print_function, unicode_literals

|

||||

|

||||

import re

|

||||

import os

|

||||

import sys

|

||||

import time

|

||||

import threading

|

||||

@@ -9,9 +10,11 @@ from datetime import datetime, timedelta

|

||||

import calendar

|

||||

|

||||

from .__init__ import PY2, WINDOWS, MACOS, VT100

|

||||

from .util import mp

|

||||

from .authsrv import AuthSrv

|

||||

from .tcpsrv import TcpSrv

|

||||

from .up2k import Up2k

|

||||

from .util import mp

|

||||

from .th_srv import ThumbSrv, HAVE_PIL, HAVE_WEBP

|

||||

|

||||

|

||||

class SvcHub(object):

|

||||

@@ -34,9 +37,27 @@ class SvcHub(object):

|

||||

|

||||

self.log = self._log_disabled if args.q else self._log_enabled

|

||||

|

||||

# jank goes here

|

||||

auth = AuthSrv(self.args, self.log, False)

|

||||

|

||||

# initiate all services to manage

|

||||

self.tcpsrv = TcpSrv(self)

|

||||

self.up2k = Up2k(self)

|

||||

self.up2k = Up2k(self, auth.vfs.all_vols)

|

||||

|

||||

self.thumbsrv = None

|

||||

if not args.no_thumb:

|

||||

if HAVE_PIL:

|

||||

if not HAVE_WEBP:

|

||||

args.th_no_webp = True

|

||||

msg = "setting --th-no-webp because either libwebp is not available or your Pillow is too old"

|

||||

self.log("thumb", msg, c=3)

|

||||

|

||||

self.thumbsrv = ThumbSrv(self, auth.vfs.all_vols)

|

||||

else:

|

||||

msg = "need Pillow to create thumbnails; for example:\n{}{} -m pip install --user Pillow\n"

|

||||

self.log(

|

||||

"thumb", msg.format(" " * 37, os.path.basename(sys.executable)), c=3

|

||||

)

|

||||

|

||||

# decide which worker impl to use

|

||||

if self.check_mp_enable():

|

||||

@@ -63,6 +84,17 @@ class SvcHub(object):

|

||||

|

||||

self.tcpsrv.shutdown()

|

||||

self.broker.shutdown()

|

||||

if self.thumbsrv:

|

||||

self.thumbsrv.shutdown()

|

||||

|

||||

for n in range(200): # 10s

|

||||

time.sleep(0.05)

|

||||

if self.thumbsrv.stopped():

|

||||

break

|

||||

|

||||

if n == 3:

|

||||

print("waiting for thumbsrv...")

|

||||

|

||||

print("nailed it")

|

||||

|

||||

def _log_disabled(self, src, msg, c=0):

|

||||

|

||||

49

copyparty/th_cli.py

Normal file

49

copyparty/th_cli.py

Normal file

@@ -0,0 +1,49 @@

|

||||

import os

|

||||

import time

|

||||

|

||||

from .util import Cooldown

|

||||

from .th_srv import thumb_path, THUMBABLE, FMT_FF

|

||||

|

||||

|

||||

class ThumbCli(object):

|

||||

def __init__(self, broker):

|

||||

self.broker = broker

|

||||

self.args = broker.args

|

||||

|

||||

# cache on both sides for less broker spam

|

||||

self.cooldown = Cooldown(self.args.th_poke)

|

||||

|

||||

def get(self, ptop, rem, mtime, fmt):

|

||||

ext = rem.rsplit(".")[-1].lower()

|

||||

if ext not in THUMBABLE:

|

||||

return None

|

||||

|

||||

if self.args.no_vthumb and ext in FMT_FF:

|

||||

return None

|

||||

|

||||

if fmt == "j" and self.args.th_no_jpg:

|

||||

fmt = "w"

|

||||

|

||||

if fmt == "w" and self.args.th_no_webp:

|

||||

fmt = "j"

|

||||

|

||||

tpath = thumb_path(ptop, rem, mtime, fmt)

|

||||

ret = None

|

||||

try:

|

||||

st = os.stat(tpath)

|

||||

if st.st_size:

|

||||

ret = tpath

|

||||

else:

|

||||

return None

|

||||

except:

|

||||

pass

|

||||

|

||||

if ret:

|

||||

tdir = os.path.dirname(tpath)

|

||||

if self.cooldown.poke(tdir):

|

||||

self.broker.put(False, "thumbsrv.poke", tdir)

|

||||

|

||||

return ret

|

||||

|

||||

x = self.broker.put(True, "thumbsrv.get", ptop, rem, mtime, fmt)

|

||||

return x.get()

|

||||

375

copyparty/th_srv.py

Normal file

375

copyparty/th_srv.py

Normal file

@@ -0,0 +1,375 @@

|

||||

import os

|

||||

import sys

|

||||

import time

|

||||

import shutil

|

||||

import base64

|

||||

import hashlib

|

||||

import threading

|

||||

import subprocess as sp

|

||||

|

||||

from .__init__ import PY2

|

||||

from .util import fsenc, mchkcmd, Queue, Cooldown, BytesIO

|

||||

from .mtag import HAVE_FFMPEG, HAVE_FFPROBE, ffprobe

|

||||

|

||||

|

||||

if not PY2:

|

||||

unicode = str

|

||||

|

||||

|

||||

HAVE_PIL = False

|

||||

HAVE_HEIF = False

|

||||

HAVE_AVIF = False

|

||||

HAVE_WEBP = False

|

||||

|

||||

try:

|

||||

from PIL import Image, ImageOps

|

||||

|

||||

HAVE_PIL = True

|

||||

try:

|

||||

Image.new("RGB", (2, 2)).save(BytesIO(), format="webp")

|

||||

HAVE_WEBP = True

|

||||

except:

|

||||

pass

|

||||

|

||||

try:

|

||||

from pyheif_pillow_opener import register_heif_opener

|

||||

|

||||

register_heif_opener()

|

||||

HAVE_HEIF = True

|

||||

except:

|

||||

pass

|

||||

|

||||

try:

|

||||

import pillow_avif

|

||||

|

||||

HAVE_AVIF = True

|

||||

except:

|

||||

pass

|

||||

except:

|

||||

pass

|

||||

|

||||

# https://pillow.readthedocs.io/en/stable/handbook/image-file-formats.html

|

||||

# ffmpeg -formats

|

||||

FMT_PIL = "bmp dib gif icns ico jpg jpeg jp2 jpx pcx png pbm pgm ppm pnm sgi tga tif tiff webp xbm dds xpm"

|

||||

FMT_FF = "av1 asf avi flv m4v mkv mjpeg mjpg mpg mpeg mpg2 mpeg2 mov 3gp mp4 ts mpegts nut ogv ogm rm vob webm wmv"

|

||||

|

||||

if HAVE_HEIF:

|

||||

FMT_PIL += " heif heifs heic heics"

|

||||

|

||||

if HAVE_AVIF:

|

||||

FMT_PIL += " avif avifs"

|

||||

|

||||

FMT_PIL, FMT_FF = [{x: True for x in y.split(" ") if x} for y in [FMT_PIL, FMT_FF]]

|

||||

|

||||

|

||||

THUMBABLE = {}

|

||||

|

||||

if HAVE_PIL:

|

||||

THUMBABLE.update(FMT_PIL)

|

||||

|

||||

if HAVE_FFMPEG and HAVE_FFPROBE:

|

||||

THUMBABLE.update(FMT_FF)

|

||||

|

||||

|

||||

def thumb_path(ptop, rem, mtime, fmt):

|

||||

# base16 = 16 = 256

|

||||

# b64-lc = 38 = 1444

|

||||

# base64 = 64 = 4096

|

||||

try:

|

||||

rd, fn = rem.rsplit("/", 1)

|

||||

except:

|

||||

rd = ""

|

||||

fn = rem

|

||||

|

||||

if rd:

|

||||

h = hashlib.sha512(fsenc(rd)).digest()[:24]

|

||||

b64 = base64.urlsafe_b64encode(h).decode("ascii")[:24]

|

||||

rd = "{}/{}/".format(b64[:2], b64[2:4]).lower() + b64

|

||||

else:

|

||||

rd = "top"

|

||||

|

||||

# could keep original filenames but this is safer re pathlen

|

||||

h = hashlib.sha512(fsenc(fn)).digest()[:24]

|

||||

fn = base64.urlsafe_b64encode(h).decode("ascii")[:24]

|

||||

|

||||

return "{}/.hist/th/{}/{}.{:x}.{}".format(

|

||||

ptop, rd, fn, int(mtime), "webp" if fmt == "w" else "jpg"

|

||||

)

|

||||

|

||||

|

||||

class ThumbSrv(object):

|

||||

def __init__(self, hub, vols):

|

||||

self.hub = hub

|

||||

self.vols = [v.realpath for v in vols.values()]

|

||||

|

||||

self.args = hub.args

|

||||

self.log_func = hub.log

|

||||

|

||||

res = hub.args.th_size.split("x")

|

||||

self.res = tuple([int(x) for x in res])

|

||||

self.poke_cd = Cooldown(self.args.th_poke)

|

||||

|

||||

self.mutex = threading.Lock()

|

||||

self.busy = {}

|

||||

self.stopping = False

|

||||

self.nthr = os.cpu_count() if hasattr(os, "cpu_count") else 4

|

||||

self.q = Queue(self.nthr * 4)

|

||||

for _ in range(self.nthr):

|

||||

t = threading.Thread(target=self.worker)

|

||||

t.daemon = True

|

||||

t.start()

|

||||

|

||||

if not self.args.no_vthumb and (not HAVE_FFMPEG or not HAVE_FFPROBE):

|

||||

missing = []

|

||||

if not HAVE_FFMPEG:

|

||||

missing.append("ffmpeg")

|

||||

|

||||

if not HAVE_FFPROBE:

|

||||

missing.append("ffprobe")

|

||||

|

||||

msg = "cannot create video thumbnails because some of the required programs are not available: "

|

||||

msg += ", ".join(missing)

|

||||

self.log(msg, c=1)

|

||||

|

||||

t = threading.Thread(target=self.cleaner)

|

||||

t.daemon = True

|

||||

t.start()

|

||||

|

||||

def log(self, msg, c=0):

|

||||

self.log_func("thumb", msg, c)

|

||||

|

||||

def shutdown(self):

|

||||

self.stopping = True

|

||||

for _ in range(self.nthr):

|

||||

self.q.put(None)

|

||||

|

||||

def stopped(self):

|

||||

with self.mutex:

|

||||

return not self.nthr

|

||||

|

||||

def get(self, ptop, rem, mtime, fmt):

|

||||

tpath = thumb_path(ptop, rem, mtime, fmt)

|

||||

abspath = os.path.join(ptop, rem)

|

||||

cond = threading.Condition()

|

||||

with self.mutex:

|

||||

try:

|

||||

self.busy[tpath].append(cond)

|

||||

self.log("wait {}".format(tpath))

|

||||

except:

|

||||

thdir = os.path.dirname(tpath)

|

||||

try:

|

||||

os.makedirs(thdir)

|

||||

except:

|

||||

pass

|

||||

|

||||

inf_path = os.path.join(thdir, "dir.txt")

|

||||

if not os.path.exists(inf_path):

|

||||

with open(inf_path, "wb") as f:

|

||||

f.write(fsenc(os.path.dirname(abspath)))

|

||||

|

||||

self.busy[tpath] = [cond]

|

||||

self.q.put([abspath, tpath])

|

||||

self.log("conv {} \033[0m{}".format(tpath, abspath), c=6)

|

||||

|

||||

while not self.stopping:

|

||||

with self.mutex:

|

||||

if tpath not in self.busy:

|

||||

break

|

||||

|

||||

with cond:

|

||||

cond.wait()

|

||||

|

||||

try:

|

||||

st = os.stat(tpath)

|

||||

if st.st_size:

|

||||

return tpath

|

||||

except:

|

||||

pass

|

||||

|

||||

return None

|

||||

|

||||

def worker(self):

|

||||

while not self.stopping:

|

||||

task = self.q.get()

|

||||

if not task:

|

||||

break

|

||||

|

||||

abspath, tpath = task

|

||||

ext = abspath.split(".")[-1].lower()

|

||||

fun = None

|

||||

if not os.path.exists(tpath):

|

||||

if ext in FMT_PIL:

|

||||

fun = self.conv_pil

|

||||

elif ext in FMT_FF:

|

||||

fun = self.conv_ffmpeg

|

||||

|

||||

if fun:

|

||||

try:

|

||||

fun(abspath, tpath)

|

||||

except Exception as ex:

|

||||

msg = "{} failed on {}\n {!r}"

|

||||

self.log(msg.format(fun.__name__, abspath, ex), 3)

|

||||

with open(tpath, "wb") as _:

|

||||

pass

|

||||

|

||||

with self.mutex:

|

||||

subs = self.busy[tpath]

|

||||

del self.busy[tpath]

|

||||

|

||||

for x in subs:

|

||||

with x:

|

||||

x.notify_all()

|

||||

|

||||

with self.mutex:

|

||||

self.nthr -= 1

|

||||

|

||||

def conv_pil(self, abspath, tpath):

|

||||

with Image.open(fsenc(abspath)) as im:

|

||||

crop = not self.args.th_no_crop

|

||||

res2 = self.res

|

||||

if crop:

|

||||

res2 = (res2[0] * 2, res2[1] * 2)

|

||||

|

||||

try:

|

||||

im.thumbnail(res2, resample=Image.LANCZOS)

|

||||

if crop:

|

||||

iw, ih = im.size

|

||||

dw, dh = self.res

|

||||

res = (min(iw, dw), min(ih, dh))

|

||||

im = ImageOps.fit(im, res, method=Image.LANCZOS)

|

||||

except:

|

||||

im.thumbnail(self.res)

|

||||

|

||||

if im.mode not in ("RGB", "L"):

|

||||

im = im.convert("RGB")

|

||||

|

||||

if tpath.endswith(".webp"):

|

||||

# quality 80 = pillow-default

|

||||

# quality 75 = ffmpeg-default

|

||||

# method 0 = pillow-default, fast

|

||||

# method 4 = ffmpeg-default

|

||||

# method 6 = max, slow

|

||||

im.save(tpath, quality=40, method=6)

|

||||

else:

|

||||

im.save(tpath, quality=40) # default=75

|

||||

|

||||

def conv_ffmpeg(self, abspath, tpath):

|

||||

ret, _ = ffprobe(abspath)

|

||||

|

||||

dur = ret[".dur"][1] if ".dur" in ret else 4

|

||||

seek = "{:.0f}".format(dur / 3)

|

||||

|

||||

scale = "scale={0}:{1}:force_original_aspect_ratio="

|

||||

if self.args.th_no_crop:

|

||||

scale += "decrease,setsar=1:1"

|

||||

else:

|

||||

scale += "increase,crop={0}:{1},setsar=1:1"

|

||||

|

||||

scale = scale.format(*list(self.res)).encode("utf-8")

|

||||

cmd = [

|

||||

b"ffmpeg",

|

||||

b"-nostdin",

|

||||

b"-hide_banner",

|

||||

b"-ss",

|

||||

seek,

|

||||

b"-i",

|

||||

fsenc(abspath),

|

||||

b"-vf",

|

||||

scale,

|

||||

b"-vframes",

|

||||

b"1",

|

||||

]

|

||||

|

||||

if tpath.endswith(".jpg"):

|

||||

cmd += [

|

||||

b"-q:v",

|

||||

b"6", # default=??

|

||||

]

|

||||

else:

|

||||

cmd += [

|

||||

b"-q:v",

|

||||

b"50", # default=75

|

||||

b"-compression_level:v",

|

||||

b"6", # default=4, 0=fast, 6=max

|

||||

]

|

||||

|

||||

cmd += [fsenc(tpath)]

|

||||

|

||||

mchkcmd(cmd)

|

||||

|

||||

def poke(self, tdir):

|

||||

if not self.poke_cd.poke(tdir):

|

||||

return

|

||||

|

||||

ts = int(time.time())

|

||||

try:

|

||||

p1 = os.path.dirname(tdir)

|

||||

p2 = os.path.dirname(p1)

|

||||

for dp in [tdir, p1, p2]:

|

||||

os.utime(fsenc(dp), (ts, ts))

|

||||

except:

|

||||

pass

|

||||

|

||||

def cleaner(self):

|

||||

interval = self.args.th_clean

|

||||

while True:

|

||||

time.sleep(interval)

|

||||

for vol in self.vols:

|

||||

vol += "/.hist/th"

|

||||

self.log("cln {}/".format(vol))

|

||||

self.clean(vol)

|

||||

|

||||

self.log("cln ok")

|

||||

|

||||

def clean(self, vol):

|

||||

# self.log("cln {}".format(vol))

|

||||

maxage = self.args.th_maxage

|

||||

now = time.time()

|

||||

prev_b64 = None

|

||||

prev_fp = None

|

||||

try:

|

||||

ents = os.listdir(vol)

|

||||

except:

|

||||

return

|

||||

|

||||

for f in sorted(ents):

|

||||

fp = os.path.join(vol, f)

|

||||

cmp = fp.lower().replace("\\", "/")

|

||||

|

||||

# "top" or b64 prefix/full (a folder)

|

||||

if len(f) <= 3 or len(f) == 24:

|

||||

age = now - os.path.getmtime(fp)

|

||||

if age > maxage:

|

||||

with self.mutex:

|

||||

safe = True

|

||||

for k in self.busy.keys():

|

||||

if k.lower().replace("\\", "/").startswith(cmp):

|

||||

safe = False

|

||||

break

|

||||

|

||||

if safe:

|

||||

self.log("rm -rf [{}]".format(fp))

|

||||

shutil.rmtree(fp, ignore_errors=True)

|

||||

else:

|

||||

self.clean(fp)

|

||||

continue

|

||||

|

||||

# thumb file

|

||||

try:

|

||||

b64, ts, ext = f.split(".")

|

||||

if len(b64) != 24 or len(ts) != 8 or ext not in ["jpg", "webp"]:

|

||||