mirror of

https://github.com/9001/copyparty.git

synced 2025-10-24 00:24:04 +00:00

Compare commits

315 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

633b1f0a78 | ||

|

|

6136b9bf9c | ||

|

|

524a3ba566 | ||

|

|

58580320f9 | ||

|

|

759b0a994d | ||

|

|

d2800473e4 | ||

|

|

f5b1a2065e | ||

|

|

5e62532295 | ||

|

|

c1bee96c40 | ||

|

|

f273253a2b | ||

|

|

012bbcf770 | ||

|

|

b54cb47b2e | ||

|

|

1b15f43745 | ||

|

|

96771bf1bd | ||

|

|

580078bddb | ||

|

|

c5c7080ec6 | ||

|

|

408339b51d | ||

|

|

02e3d44998 | ||

|

|

156f13ded1 | ||

|

|

d288467cb7 | ||

|

|

21662c9f3f | ||

|

|

9149fe6cdd | ||

|

|

9a146192b7 | ||

|

|

3a9d3b7b61 | ||

|

|

f03f0973ab | ||

|

|

7ec0881e8c | ||

|

|

59e1ab42ff | ||

|

|

722216b901 | ||

|

|

bd8f3dc368 | ||

|

|

33cd94a141 | ||

|

|

053ac74734 | ||

|

|

cced99fafa | ||

|

|

a009ff53f7 | ||

|

|

ca16c4108d | ||

|

|

d1b6c67dc3 | ||

|

|

a61f8133d5 | ||

|

|

38d797a544 | ||

|

|

16c1877f50 | ||

|

|

da5f15a778 | ||

|

|

396c64ecf7 | ||

|

|

252c3a7985 | ||

|

|

a3ecbf0ae7 | ||

|

|

314327d8f2 | ||

|

|

bfacd06929 | ||

|

|

4f5e8f8cf5 | ||

|

|

1fbb4c09cc | ||

|

|

b332e1992b | ||

|

|

5955940b82 | ||

|

|

231a03bcfd | ||

|

|

bc85723657 | ||

|

|

be32b743c6 | ||

|

|

83c9843059 | ||

|

|

11cf43626d | ||

|

|

a6dc5e2ce3 | ||

|

|

38593a0394 | ||

|

|

95309afeea | ||

|

|

c2bf6fe2a3 | ||

|

|

99ac324fbd | ||

|

|

5562de330f | ||

|

|

95014236ac | ||

|

|

6aa7386138 | ||

|

|

3226a1f588 | ||

|

|

b4cf890cd8 | ||

|

|

ce09e323af | ||

|

|

941aedb177 | ||

|

|

87a0d502a3 | ||

|

|

cab7c1b0b8 | ||

|

|

d5892341b6 | ||

|

|

646557a43e | ||

|

|

ed8d34ab43 | ||

|

|

5e34463c77 | ||

|

|

1b14eb7959 | ||

|

|

ed48c2d0ed | ||

|

|

26fe84b660 | ||

|

|

5938230270 | ||

|

|

1a33a047fa | ||

|

|

43a8bcefb9 | ||

|

|

2e740e513f | ||

|

|

8a21a86b61 | ||

|

|

f600116205 | ||

|

|

1c03705de8 | ||

|

|

f7e461fac6 | ||

|

|

03ce6c97ff | ||

|

|

ffd9e76e07 | ||

|

|

fc49cb1e67 | ||

|

|

f5712d9f25 | ||

|

|

161d57bdda | ||

|

|

bae0d440bf | ||

|

|

fff052dde1 | ||

|

|

73b06eaa02 | ||

|

|

08a8ebed17 | ||

|

|

74d07426b3 | ||

|

|

69a2bba99a | ||

|

|

4d685d78ee | ||

|

|

5845ec3f49 | ||

|

|

13373426fe | ||

|

|

8e55551a06 | ||

|

|

12a3f0ac31 | ||

|

|

18e33edc88 | ||

|

|

c72c5ad4ee | ||

|

|

0fbc81ab2f | ||

|

|

af0a34cf82 | ||

|

|

b4590c5398 | ||

|

|

f787a66230 | ||

|

|

b21a99fd62 | ||

|

|

eb16306cde | ||

|

|

7bc23687e3 | ||

|

|

e1eaa057f2 | ||

|

|

97c264ca3e | ||

|

|

cf848ab1f7 | ||

|

|

cf83f9b0fd | ||

|

|

d98e361083 | ||

|

|

ce7f5309c7 | ||

|

|

75c485ced7 | ||

|

|

9c6e2ec012 | ||

|

|

1a02948a61 | ||

|

|

8b05ba4ba1 | ||

|

|

21e2874cb7 | ||

|

|

360ed5c46c | ||

|

|

5099bc365d | ||

|

|

12986da147 | ||

|

|

23e72797bc | ||

|

|

ac7b6f8f55 | ||

|

|

981b9ff11e | ||

|

|

4186906f4c | ||

|

|

0850d24e0c | ||

|

|

7ab8334c96 | ||

|

|

a4d7329ab7 | ||

|

|

3f4eae6bce | ||

|

|

518cf4be57 | ||

|

|

71096182be | ||

|

|

6452e927ea | ||

|

|

bc70cfa6f0 | ||

|

|

2b6e5ebd2d | ||

|

|

c761bd799a | ||

|

|

2f7c2fdee4 | ||

|

|

70a76ec343 | ||

|

|

7c3f64abf2 | ||

|

|

f5f38f195c | ||

|

|

7e84f4f015 | ||

|

|

4802f8cf07 | ||

|

|

cc05e67d8f | ||

|

|

2b6b174517 | ||

|

|

a1d05e6e12 | ||

|

|

f95ceb6a9b | ||

|

|

8f91b0726d | ||

|

|

97807f4383 | ||

|

|

5f42237f2c | ||

|

|

68289cfa54 | ||

|

|

42ea30270f | ||

|

|

ebbbbf3d82 | ||

|

|

27516e2d16 | ||

|

|

84bb6f915e | ||

|

|

46752f758a | ||

|

|

34c4c22e61 | ||

|

|

af2d0b8421 | ||

|

|

638b05a49a | ||

|

|

7a13e8a7fc | ||

|

|

d9fa74711d | ||

|

|

41867f578f | ||

|

|

0bf41ed4ef | ||

|

|

d080b4a731 | ||

|

|

ca4232ada9 | ||

|

|

ad348f91c9 | ||

|

|

990f915f42 | ||

|

|

53d720217b | ||

|

|

7a06ff480d | ||

|

|

3ef551f788 | ||

|

|

f0125cdc36 | ||

|

|

ed5f6736df | ||

|

|

15d8be0fae | ||

|

|

46f3e61360 | ||

|

|

87ad8c98d4 | ||

|

|

9bbdc4100f | ||

|

|

c80307e8ff | ||

|

|

c1d77e1041 | ||

|

|

d9e83650dc | ||

|

|

f6d635acd9 | ||

|

|

0dbd8a01ff | ||

|

|

8d755d41e0 | ||

|

|

190473bd32 | ||

|

|

030d1ec254 | ||

|

|

5a2b91a084 | ||

|

|

a50a05e4e7 | ||

|

|

6cb5a87c79 | ||

|

|

b9f89ca552 | ||

|

|

26c9fd5dea | ||

|

|

e81a9b6fe0 | ||

|

|

452450e451 | ||

|

|

419dd2d1c7 | ||

|

|

ee86b06676 | ||

|

|

953183f16d | ||

|

|

228f71708b | ||

|

|

621471a7cb | ||

|

|

8b58e951e3 | ||

|

|

1db489a0aa | ||

|

|

be65c3c6cf | ||

|

|

46e7fa31fe | ||

|

|

66e21bd499 | ||

|

|

8cab4c01fd | ||

|

|

d52038366b | ||

|

|

4fcfd87f5b | ||

|

|

f893c6baa4 | ||

|

|

9a45549b66 | ||

|

|

ae3a01038b | ||

|

|

e47a2a4ca2 | ||

|

|

95ea6d5f78 | ||

|

|

7d290f6b8f | ||

|

|

9db617ed5a | ||

|

|

514456940a | ||

|

|

33feefd9cd | ||

|

|

65e14cf348 | ||

|

|

1d61bcc4f3 | ||

|

|

c38bbaca3c | ||

|

|

246d245ebc | ||

|

|

f269a710e2 | ||

|

|

051998429c | ||

|

|

432cdd640f | ||

|

|

9ed9b0964e | ||

|

|

6a97b3526d | ||

|

|

451d757996 | ||

|

|

f9e9eba3b1 | ||

|

|

2a9a6aebd9 | ||

|

|

adbb6c449e | ||

|

|

3993605324 | ||

|

|

0ae574ec2c | ||

|

|

c56ded828c | ||

|

|

02c7061945 | ||

|

|

9209e44cd3 | ||

|

|

ebed37394e | ||

|

|

4c7a2a7ec3 | ||

|

|

0a25a88a34 | ||

|

|

6aa9025347 | ||

|

|

a918cc67eb | ||

|

|

08f4695283 | ||

|

|

44e76d5eeb | ||

|

|

cfa36fd279 | ||

|

|

3d4166e006 | ||

|

|

07bac1c592 | ||

|

|

755f2ce1ba | ||

|

|

cca2844deb | ||

|

|

24a2f760b7 | ||

|

|

79bbd8fe38 | ||

|

|

35dce1e3e4 | ||

|

|

f886fdf913 | ||

|

|

4476f2f0da | ||

|

|

160f161700 | ||

|

|

c164fc58a2 | ||

|

|

0c625a4e62 | ||

|

|

bf3941cf7a | ||

|

|

3649e8288a | ||

|

|

9a45e26026 | ||

|

|

e65f127571 | ||

|

|

3bfc699787 | ||

|

|

955318428a | ||

|

|

f6279b356a | ||

|

|

4cc3cdc989 | ||

|

|

f9aa20a3ad | ||

|

|

129d33f1a0 | ||

|

|

1ad7a3f378 | ||

|

|

b533be8818 | ||

|

|

fb729e5166 | ||

|

|

d337ecdb20 | ||

|

|

5f1f0a48b0 | ||

|

|

e0f1cb94a5 | ||

|

|

a362ee2246 | ||

|

|

19f23c686e | ||

|

|

23b20ff4a6 | ||

|

|

72574da834 | ||

|

|

d5a79455d1 | ||

|

|

070d4b9da9 | ||

|

|

0ace22fffe | ||

|

|

9e483d7694 | ||

|

|

26458b7a06 | ||

|

|

b6a4604952 | ||

|

|

af752fbbc2 | ||

|

|

279c9d706a | ||

|

|

806e7b5530 | ||

|

|

f3dc6a217b | ||

|

|

7671d791fa | ||

|

|

8cd84608a5 | ||

|

|

980c6fc810 | ||

|

|

fb40a484c5 | ||

|

|

daa9dedcaa | ||

|

|

0d634345ac | ||

|

|

e648252479 | ||

|

|

179d7a9ad8 | ||

|

|

19bc962ad5 | ||

|

|

27cce086c6 | ||

|

|

fec0c620d4 | ||

|

|

05a1a31cab | ||

|

|

d020527c6f | ||

|

|

4451485664 | ||

|

|

a4e1a3738a | ||

|

|

4339dbeb8d | ||

|

|

5b0605774c | ||

|

|

e3684e25f8 | ||

|

|

1359213196 | ||

|

|

03efc6a169 | ||

|

|

15b5982211 | ||

|

|

0eb3a5d387 | ||

|

|

7f8777389c | ||

|

|

4eb20f10ad | ||

|

|

daa11df558 | ||

|

|

1bb0db30a0 | ||

|

|

02910b0020 | ||

|

|

23b8901c9c | ||

|

|

99f6ed0cd7 | ||

|

|

890c310880 | ||

|

|

0194eeb31f | ||

|

|

f9be4c62b1 | ||

|

|

027e8c18f1 | ||

|

|

4a3bb35a95 | ||

|

|

4bfb0d4494 | ||

|

|

7e0ef03a1e |

40

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

40

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

@@ -0,0 +1,40 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: ''

|

||||

labels: bug

|

||||

assignees: '9001'

|

||||

|

||||

---

|

||||

|

||||

NOTE:

|

||||

all of the below are optional, consider them as inspiration, delete and rewrite at will, thx md

|

||||

|

||||

|

||||

**Describe the bug**

|

||||

a description of what the bug is

|

||||

|

||||

**To Reproduce**

|

||||

List of steps to reproduce the issue, or, if it's hard to reproduce, then at least a detailed explanation of what you did to run into it

|

||||

|

||||

**Expected behavior**

|

||||

a description of what you expected to happen

|

||||

|

||||

**Screenshots**

|

||||

if applicable, add screenshots to help explain your problem, such as the kickass crashpage :^)

|

||||

|

||||

**Server details**

|

||||

if the issue is possibly on the server-side, then mention some of the following:

|

||||

* server OS / version:

|

||||

* python version:

|

||||

* copyparty arguments:

|

||||

* filesystem (`lsblk -f` on linux):

|

||||

|

||||

**Client details**

|

||||

if the issue is possibly on the client-side, then mention some of the following:

|

||||

* the device type and model:

|

||||

* OS version:

|

||||

* browser version:

|

||||

|

||||

**Additional context**

|

||||

any other context about the problem here

|

||||

22

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

22

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

@@ -0,0 +1,22 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: enhancement

|

||||

assignees: '9001'

|

||||

|

||||

---

|

||||

|

||||

all of the below are optional, consider them as inspiration, delete and rewrite at will

|

||||

|

||||

**is your feature request related to a problem? Please describe.**

|

||||

a description of what the problem is, for example, `I'm always frustrated when [...]` or `Why is it not possible to [...]`

|

||||

|

||||

**Describe the idea / solution you'd like**

|

||||

a description of what you want to happen

|

||||

|

||||

**Describe any alternatives you've considered**

|

||||

a description of any alternative solutions or features you've considered

|

||||

|

||||

**Additional context**

|

||||

add any other context or screenshots about the feature request here

|

||||

10

.github/ISSUE_TEMPLATE/something-else.md

vendored

Normal file

10

.github/ISSUE_TEMPLATE/something-else.md

vendored

Normal file

@@ -0,0 +1,10 @@

|

||||

---

|

||||

name: Something else

|

||||

about: "┐(゚∀゚)┌"

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

|

||||

7

.github/branch-rename.md

vendored

Normal file

7

.github/branch-rename.md

vendored

Normal file

@@ -0,0 +1,7 @@

|

||||

modernize your local checkout of the repo like so,

|

||||

```sh

|

||||

git branch -m master hovudstraum

|

||||

git fetch origin

|

||||

git branch -u origin/hovudstraum hovudstraum

|

||||

git remote set-head origin -a

|

||||

```

|

||||

24

CODE_OF_CONDUCT.md

Normal file

24

CODE_OF_CONDUCT.md

Normal file

@@ -0,0 +1,24 @@

|

||||

in the words of Abraham Lincoln:

|

||||

|

||||

> Be excellent to each other... and... PARTY ON, DUDES!

|

||||

|

||||

more specifically I'll paraphrase some examples from a german automotive corporation as they cover all the bases without being too wordy

|

||||

|

||||

## Examples of unacceptable behavior

|

||||

* intimidation, harassment, trolling

|

||||

* insulting, derogatory, harmful or prejudicial comments

|

||||

* posting private information without permission

|

||||

* political or personal attacks

|

||||

|

||||

## Examples of expected behavior

|

||||

* being nice, friendly, welcoming, inclusive, mindful and empathetic

|

||||

* acting considerate, modest, respectful

|

||||

* using polite and inclusive language

|

||||

* criticize constructively and accept constructive criticism

|

||||

* respect different points of view

|

||||

|

||||

## finally and even more specifically,

|

||||

* parse opinions and feedback objectively without prejudice

|

||||

* it's the message that matters, not who said it

|

||||

|

||||

aaand that's how you say `be nice` in a way that fills half a floppy w

|

||||

3

CONTRIBUTING.md

Normal file

3

CONTRIBUTING.md

Normal file

@@ -0,0 +1,3 @@

|

||||

* do something cool

|

||||

|

||||

really tho, send a PR or an issue or whatever, all appreciated, anything goes, just behave aight

|

||||

555

README.md

555

README.md

@@ -6,85 +6,100 @@

|

||||

|

||||

## summary

|

||||

|

||||

turn your phone or raspi into a portable file server with resumable uploads/downloads using IE6 or any other browser

|

||||

turn your phone or raspi into a portable file server with resumable uploads/downloads using *any* web browser

|

||||

|

||||

* server runs on anything with `py2.7` or `py3.3+`

|

||||

* server only needs `py2.7` or `py3.3+`, all dependencies optional

|

||||

* browse/upload with IE4 / netscape4.0 on win3.11 (heh)

|

||||

* *resumable* uploads need `firefox 34+` / `chrome 41+` / `safari 7+` for full speed

|

||||

* code standard: `black`

|

||||

|

||||

📷 **screenshots:** [browser](#the-browser) // [upload](#uploading) // [thumbnails](#thumbnails) // [md-viewer](#markdown-viewer) // [search](#searching) // [fsearch](#file-search) // [zip-DL](#zip-downloads) // [ie4](#browser-support)

|

||||

📷 **screenshots:** [browser](#the-browser) // [upload](#uploading) // [unpost](#unpost) // [thumbnails](#thumbnails) // [search](#searching) // [fsearch](#file-search) // [zip-DL](#zip-downloads) // [md-viewer](#markdown-viewer) // [ie4](#browser-support)

|

||||

|

||||

|

||||

## readme toc

|

||||

|

||||

* top

|

||||

* [quickstart](#quickstart)

|

||||

* [on debian](#on-debian)

|

||||

* [notes](#notes)

|

||||

* [status](#status)

|

||||

* [testimonials](#testimonials)

|

||||

* **[quickstart](#quickstart)** - download **[copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py)** and you're all set!

|

||||

* [on servers](#on-servers) - you may also want these, especially on servers

|

||||

* [on debian](#on-debian) - recommended additional steps on debian

|

||||

* [notes](#notes) - general notes

|

||||

* [status](#status) - feature summary

|

||||

* [testimonials](#testimonials) - small collection of user feedback

|

||||

* [bugs](#bugs)

|

||||

* [general bugs](#general-bugs)

|

||||

* [not my bugs](#not-my-bugs)

|

||||

* [the browser](#the-browser)

|

||||

* [tabs](#tabs)

|

||||

* [hotkeys](#hotkeys)

|

||||

* [tree-mode](#tree-mode)

|

||||

* [thumbnails](#thumbnails)

|

||||

* [zip downloads](#zip-downloads)

|

||||

* [uploading](#uploading)

|

||||

* [file-search](#file-search)

|

||||

* [markdown viewer](#markdown-viewer)

|

||||

* [accounts and volumes](#accounts-and-volumes) - per-folder, per-user permissions

|

||||

* [the browser](#the-browser) - accessing a copyparty server using a web-browser

|

||||

* [tabs](#tabs) - the main tabs in the ui

|

||||

* [hotkeys](#hotkeys) - the browser has the following hotkeys

|

||||

* [navpane](#navpane) - switching between breadcrumbs or navpane

|

||||

* [thumbnails](#thumbnails) - press `g` to toggle grid-view instead of the file listing

|

||||

* [zip downloads](#zip-downloads) - download folders (or file selections) as `zip` or `tar` files

|

||||

* [uploading](#uploading) - drag files/folders into the web-browser to upload

|

||||

* [file-search](#file-search) - dropping files into the browser also lets you see if they exist on the server

|

||||

* [unpost](#unpost) - undo/delete accidental uploads

|

||||

* [file manager](#file-manager) - cut/paste, rename, and delete files/folders (if you have permission)

|

||||

* [batch rename](#batch-rename) - select some files and press `F2` to bring up the rename UI

|

||||

* [markdown viewer](#markdown-viewer) - and there are *two* editors

|

||||

* [other tricks](#other-tricks)

|

||||

* [searching](#searching)

|

||||

* [search configuration](#search-configuration)

|

||||

* [database location](#database-location)

|

||||

* [metadata from audio files](#metadata-from-audio-files)

|

||||

* [file parser plugins](#file-parser-plugins)

|

||||

* [searching](#searching) - search by size, date, path/name, mp3-tags, ...

|

||||

* [server config](#server-config)

|

||||

* [file indexing](#file-indexing)

|

||||

* [upload rules](#upload-rules) - set upload rules using volume flags

|

||||

* [compress uploads](#compress-uploads) - files can be autocompressed on upload

|

||||

* [database location](#database-location) - in-volume (`.hist/up2k.db`, default) or somewhere else

|

||||

* [metadata from audio files](#metadata-from-audio-files) - set `-e2t` to index tags on upload

|

||||

* [file parser plugins](#file-parser-plugins) - provide custom parsers to index additional tags

|

||||

* [complete examples](#complete-examples)

|

||||

* [browser support](#browser-support)

|

||||

* [client examples](#client-examples)

|

||||

* [up2k](#up2k)

|

||||

* [performance](#performance)

|

||||

* [dependencies](#dependencies)

|

||||

* [optional dependencies](#optional-dependencies)

|

||||

* [browser support](#browser-support) - TLDR: yes

|

||||

* [client examples](#client-examples) - interact with copyparty using non-browser clients

|

||||

* [up2k](#up2k) - quick outline of the up2k protocol, see [uploading](#uploading) for the web-client

|

||||

* [why chunk-hashes](#why-chunk-hashes) - a single sha512 would be better, right?

|

||||

* [performance](#performance) - defaults are usually fine - expect `8 GiB/s` download, `1 GiB/s` upload

|

||||

* [security](#security) - some notes on hardening

|

||||

* [gotchas](#gotchas) - behavior that might be unexpected

|

||||

* [dependencies](#dependencies) - mandatory deps

|

||||

* [optional dependencies](#optional-dependencies) - install these to enable bonus features

|

||||

* [install recommended deps](#install-recommended-deps)

|

||||

* [optional gpl stuff](#optional-gpl-stuff)

|

||||

* [sfx](#sfx)

|

||||

* [sfx repack](#sfx-repack)

|

||||

* [sfx](#sfx) - there are two self-contained "binaries"

|

||||

* [sfx repack](#sfx-repack) - reduce the size of an sfx by removing features

|

||||

* [install on android](#install-on-android)

|

||||

* [building](#building)

|

||||

* [dev env setup](#dev-env-setup)

|

||||

* [just the sfx](#just-the-sfx)

|

||||

* [complete release](#complete-release)

|

||||

* [todo](#todo)

|

||||

* [todo](#todo) - roughly sorted by priority

|

||||

* [discarded ideas](#discarded-ideas)

|

||||

|

||||

|

||||

## quickstart

|

||||

|

||||

download [copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py) and you're all set!

|

||||

download **[copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py)** and you're all set!

|

||||

|

||||

running the sfx without arguments (for example doubleclicking it on Windows) will give everyone full access to the current folder; see `-h` for help if you want accounts and volumes etc

|

||||

running the sfx without arguments (for example doubleclicking it on Windows) will give everyone read/write access to the current folder; see `-h` for help if you want [accounts and volumes](#accounts-and-volumes) etc

|

||||

|

||||

some recommended options:

|

||||

* `-e2dsa` enables general file indexing, see [search configuration](#search-configuration)

|

||||

* `-e2dsa` enables general [file indexing](#file-indexing)

|

||||

* `-e2ts` enables audio metadata indexing (needs either FFprobe or Mutagen), see [optional dependencies](#optional-dependencies)

|

||||

* `-v /mnt/music:/music:r:afoo -a foo:bar` shares `/mnt/music` as `/music`, `r`eadable by anyone, with user `foo` as `a`dmin (read/write), password `bar`

|

||||

* the syntax is `-v src:dst:perm:perm:...` so local-path, url-path, and one or more permissions to set

|

||||

* replace `:r:afoo` with `:rfoo` to only make the folder readable by `foo` and nobody else

|

||||

* in addition to `r`ead and `a`dmin, `w`rite makes a folder write-only, so cannot list/access files in it

|

||||

* `-v /mnt/music:/music:r:rw,foo -a foo:bar` shares `/mnt/music` as `/music`, `r`eadable by anyone, and read-write for user `foo`, password `bar`

|

||||

* replace `:r:rw,foo` with `:r,foo` to only make the folder readable by `foo` and nobody else

|

||||

* see [accounts and volumes](#accounts-and-volumes) for the syntax and other access levels (`r`ead, `w`rite, `m`ove, `d`elete)

|

||||

* `--ls '**,*,ln,p,r'` to crash on startup if any of the volumes contain a symlink which point outside the volume, as that could give users unintended access

|

||||

|

||||

|

||||

### on servers

|

||||

|

||||

you may also want these, especially on servers:

|

||||

|

||||

* [contrib/systemd/copyparty.service](contrib/systemd/copyparty.service) to run copyparty as a systemd service

|

||||

* [contrib/systemd/prisonparty.service](contrib/systemd/prisonparty.service) to run it in a chroot (for extra security)

|

||||

* [contrib/nginx/copyparty.conf](contrib/nginx/copyparty.conf) to reverse-proxy behind nginx (for better https)

|

||||

|

||||

|

||||

### on debian

|

||||

|

||||

recommended steps to enable audio metadata and thumbnails (from images and videos):

|

||||

recommended additional steps on debian which enable audio metadata and thumbnails (from images and videos):

|

||||

|

||||

* as root, run the following:

|

||||

`apt install python3 python3-pip python3-dev ffmpeg`

|

||||

@@ -97,7 +112,7 @@ recommended steps to enable audio metadata and thumbnails (from images and video

|

||||

|

||||

## notes

|

||||

|

||||

general:

|

||||

general notes:

|

||||

* paper-printing is affected by dark/light-mode! use lightmode for color, darkmode for grayscale

|

||||

* because no browsers currently implement the media-query to do this properly orz

|

||||

|

||||

@@ -106,43 +121,45 @@ browser-specific:

|

||||

* Android-Chrome: increase "parallel uploads" for higher speed (android bug)

|

||||

* Android-Firefox: takes a while to select files (their fix for ☝️)

|

||||

* Desktop-Firefox: ~~may use gigabytes of RAM if your files are massive~~ *seems to be OK now*

|

||||

* Desktop-Firefox: may stop you from deleting folders you've uploaded until you visit `about:memory` and click `Minimize memory usage`

|

||||

* Desktop-Firefox: may stop you from deleting files you've uploaded until you visit `about:memory` and click `Minimize memory usage`

|

||||

|

||||

|

||||

## status

|

||||

|

||||

summary: all planned features work! now please enjoy the bloatening

|

||||

feature summary

|

||||

|

||||

* backend stuff

|

||||

* ☑ sanic multipart parser

|

||||

* ☑ multiprocessing (actual multithreading)

|

||||

* ☑ volumes (mountpoints)

|

||||

* ☑ accounts

|

||||

* ☑ [accounts](#accounts-and-volumes)

|

||||

* upload

|

||||

* ☑ basic: plain multipart, ie6 support

|

||||

* ☑ up2k: js, resumable, multithreaded

|

||||

* ☑ [up2k](#uploading): js, resumable, multithreaded

|

||||

* ☑ stash: simple PUT filedropper

|

||||

* ☑ [unpost](#unpost): undo/delete accidental uploads

|

||||

* ☑ symlink/discard existing files (content-matching)

|

||||

* download

|

||||

* ☑ single files in browser

|

||||

* ☑ folders as zip / tar files

|

||||

* ☑ [folders as zip / tar files](#zip-downloads)

|

||||

* ☑ FUSE client (read-only)

|

||||

* browser

|

||||

* ☑ tree-view

|

||||

* ☑ [navpane](#navpane) (directory tree sidebar)

|

||||

* ☑ file manager (cut/paste, delete, [batch-rename](#batch-rename))

|

||||

* ☑ audio player (with OS media controls)

|

||||

* ☑ thumbnails

|

||||

* ☑ image gallery with webm player

|

||||

* ☑ [thumbnails](#thumbnails)

|

||||

* ☑ ...of images using Pillow

|

||||

* ☑ ...of videos using FFmpeg

|

||||

* ☑ cache eviction (max-age; maybe max-size eventually)

|

||||

* ☑ image gallery with webm player

|

||||

* ☑ SPA (browse while uploading)

|

||||

* if you use the file-tree on the left only, not folders in the file list

|

||||

* if you use the navpane to navigate, not folders in the file list

|

||||

* server indexing

|

||||

* ☑ locate files by contents

|

||||

* ☑ [locate files by contents](#file-search)

|

||||

* ☑ search by name/path/date/size

|

||||

* ☑ search by ID3-tags etc.

|

||||

* ☑ [search by ID3-tags etc.](#searching)

|

||||

* markdown

|

||||

* ☑ viewer

|

||||

* ☑ [viewer](#markdown-viewer)

|

||||

* ☑ editor (sure why not)

|

||||

|

||||

|

||||

@@ -158,14 +175,11 @@ small collection of user feedback

|

||||

* Windows: python 3.7 and older cannot read tags with FFprobe, so use Mutagen or upgrade

|

||||

* Windows: python 2.7 cannot index non-ascii filenames with `-e2d`

|

||||

* Windows: python 2.7 cannot handle filenames with mojibake

|

||||

* `--th-ff-jpg` may fix video thumbnails on some FFmpeg versions

|

||||

* `--th-ff-jpg` may fix video thumbnails on some FFmpeg versions (macos, some linux)

|

||||

|

||||

## general bugs

|

||||

|

||||

* all volumes must exist / be available on startup; up2k (mtp especially) gets funky otherwise

|

||||

* cannot mount something at `/d1/d2/d3` unless `d2` exists inside `d1`

|

||||

* dupe files will not have metadata (audio tags etc) displayed in the file listing

|

||||

* because they don't get `up` entries in the db (probably best fix) and `tx_browser` does not `lstat`

|

||||

* probably more, pls let me know

|

||||

|

||||

## not my bugs

|

||||

@@ -177,33 +191,72 @@ small collection of user feedback

|

||||

* this is an msys2 bug, the regular windows edition of python is fine

|

||||

|

||||

* VirtualBox: sqlite throws `Disk I/O Error` when running in a VM and the up2k database is in a vboxsf

|

||||

* use `--hist` or the `hist` volflag (`-v [...]:chist=/tmp/foo`) to place the db inside the vm instead

|

||||

* use `--hist` or the `hist` volflag (`-v [...]:c,hist=/tmp/foo`) to place the db inside the vm instead

|

||||

|

||||

|

||||

# accounts and volumes

|

||||

|

||||

per-folder, per-user permissions

|

||||

* `-a usr:pwd` adds account `usr` with password `pwd`

|

||||

* `-v .::r` adds current-folder `.` as the webroot, `r`eadable by anyone

|

||||

* the syntax is `-v src:dst:perm:perm:...` so local-path, url-path, and one or more permissions to set

|

||||

* granting the same permissions to multiple accounts:

|

||||

`-v .::r,usr1,usr2:rw,usr3,usr4` = usr1/2 read-only, 3/4 read-write

|

||||

|

||||

permissions:

|

||||

* `r` (read): browse folder contents, download files, download as zip/tar

|

||||

* `w` (write): upload files, move files *into* this folder

|

||||

* `m` (move): move files/folders *from* this folder

|

||||

* `d` (delete): delete files/folders

|

||||

|

||||

examples:

|

||||

* add accounts named u1, u2, u3 with passwords p1, p2, p3: `-a u1:p1 -a u2:p2 -a u3:p3`

|

||||

* make folder `/srv` the root of the filesystem, read-only by anyone: `-v /srv::r`

|

||||

* make folder `/mnt/music` available at `/music`, read-only for u1 and u2, read-write for u3: `-v /mnt/music:music:r,u1,u2:rw,u3`

|

||||

* unauthorized users accessing the webroot can see that the `music` folder exists, but cannot open it

|

||||

* make folder `/mnt/incoming` available at `/inc`, write-only for u1, read-move for u2: `-v /mnt/incoming:inc:w,u1:rm,u2`

|

||||

* unauthorized users accessing the webroot can see that the `inc` folder exists, but cannot open it

|

||||

* `u1` can open the `inc` folder, but cannot see the contents, only upload new files to it

|

||||

* `u2` can browse it and move files *from* `/inc` into any folder where `u2` has write-access

|

||||

|

||||

|

||||

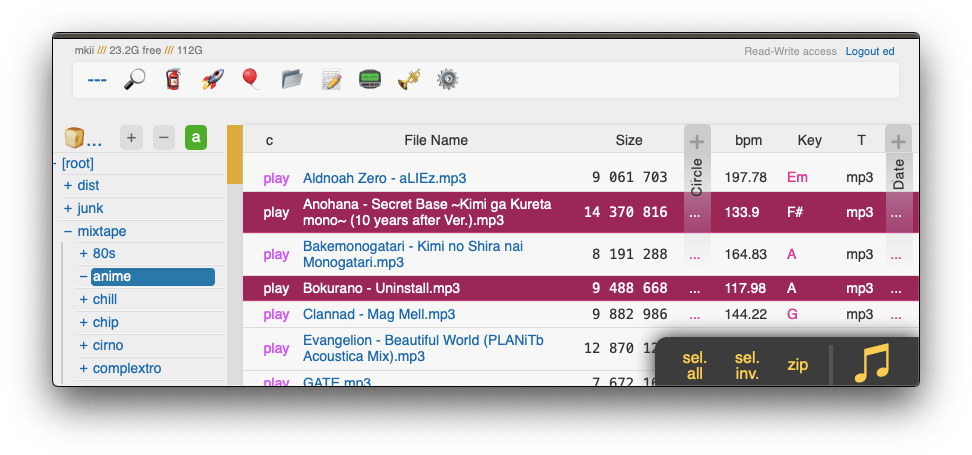

# the browser

|

||||

|

||||

|

||||

accessing a copyparty server using a web-browser

|

||||

|

||||

|

||||

|

||||

|

||||

## tabs

|

||||

|

||||

* `[🔎]` search by size, date, path/name, mp3-tags ... see [searching](#searching)

|

||||

* `[🚀]` and `[🎈]` are the uploaders, see [uploading](#uploading)

|

||||

* `[📂]` mkdir, create directories

|

||||

* `[📝]` new-md, create a new markdown document

|

||||

* `[📟]` send-msg, either to server-log or into textfiles if `--urlform save`

|

||||

the main tabs in the ui

|

||||

* `[🔎]` [search](#searching) by size, date, path/name, mp3-tags ...

|

||||

* `[🧯]` [unpost](#unpost): undo/delete accidental uploads

|

||||

* `[🚀]` and `[🎈]` are the [uploaders](#uploading)

|

||||

* `[📂]` mkdir: create directories

|

||||

* `[📝]` new-md: create a new markdown document

|

||||

* `[📟]` send-msg: either to server-log or into textfiles if `--urlform save`

|

||||

* `[🎺]` audio-player config options

|

||||

* `[⚙️]` general client config options

|

||||

|

||||

|

||||

## hotkeys

|

||||

|

||||

the browser has the following hotkeys (assumes qwerty, ignores actual layout)

|

||||

* `B` toggle breadcrumbs / directory tree

|

||||

the browser has the following hotkeys (always qwerty)

|

||||

* `B` toggle breadcrumbs / [navpane](#navpane)

|

||||

* `I/K` prev/next folder

|

||||

* `M` parent folder (or unexpand current)

|

||||

* `G` toggle list / grid view

|

||||

* `G` toggle list / [grid view](#thumbnails)

|

||||

* `T` toggle thumbnails / icons

|

||||

* `ctrl-X` cut selected files/folders

|

||||

* `ctrl-V` paste

|

||||

* `F2` [rename](#batch-rename) selected file/folder

|

||||

* when a file/folder is selected (in not-grid-view):

|

||||

* `Up/Down` move cursor

|

||||

* shift+`Up/Down` select and move cursor

|

||||

* ctrl+`Up/Down` move cursor and scroll viewport

|

||||

* `Space` toggle file selection

|

||||

* `Ctrl-A` toggle select all

|

||||

* when playing audio:

|

||||

* `J/L` prev/next song

|

||||

* `U/O` skip 10sec back/forward

|

||||

@@ -212,17 +265,19 @@ the browser has the following hotkeys (assumes qwerty, ignores actual layout)

|

||||

* when viewing images / playing videos:

|

||||

* `J/L, Left/Right` prev/next file

|

||||

* `Home/End` first/last file

|

||||

* `S` toggle selection

|

||||

* `R` rotate clockwise (shift=ccw)

|

||||

* `Esc` close viewer

|

||||

* videos:

|

||||

* `U/O` skip 10sec back/forward

|

||||

* `P/K/Space` play/pause

|

||||

* `F` fullscreen

|

||||

* `C` continue playing next video

|

||||

* `R` loop

|

||||

* `V` loop

|

||||

* `M` mute

|

||||

* when tree-sidebar is open:

|

||||

* when the navpane is open:

|

||||

* `A/D` adjust tree width

|

||||

* in the grid view:

|

||||

* in the [grid view](#thumbnails):

|

||||

* `S` toggle multiselect

|

||||

* shift+`A/D` zoom

|

||||

* in the markdown editor:

|

||||

@@ -233,16 +288,23 @@ the browser has the following hotkeys (assumes qwerty, ignores actual layout)

|

||||

* `^e` toggle editor / preview

|

||||

* `^up, ^down` jump paragraphs

|

||||

|

||||

## tree-mode

|

||||

|

||||

by default there's a breadcrumbs path; you can replace this with a tree-browser sidebar thing by clicking the `🌲` or pressing the `B` hotkey

|

||||

## navpane

|

||||

|

||||

click `[-]` and `[+]` (or hotkeys `A`/`D`) to adjust the size, and the `[a]` toggles if the tree should widen dynamically as you go deeper or stay fixed-size

|

||||

switching between breadcrumbs or navpane

|

||||

|

||||

click the `🌲` or pressing the `B` hotkey to toggle between breadcrumbs path (default), or a navpane (tree-browser sidebar thing)

|

||||

|

||||

* `[-]` and `[+]` (or hotkeys `A`/`D`) adjust the size

|

||||

* `[v]` jumps to the currently open folder

|

||||

* `[a]` toggles automatic widening as you go deeper

|

||||

|

||||

|

||||

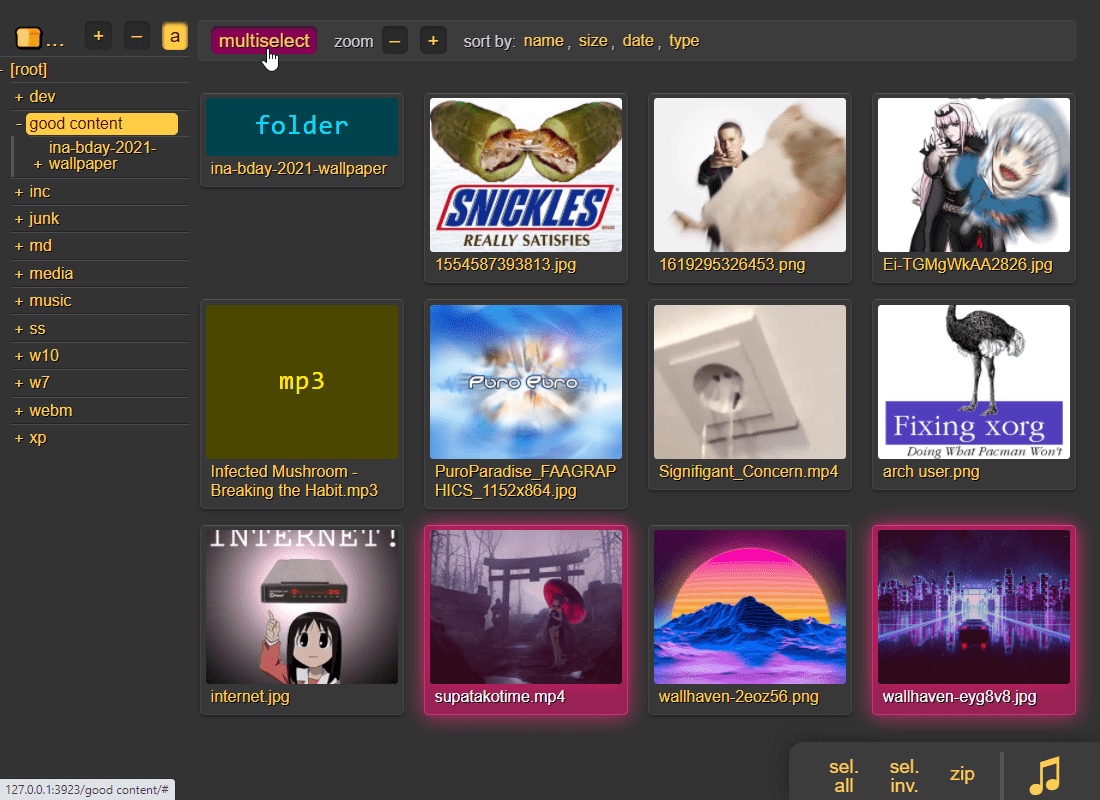

## thumbnails

|

||||

|

||||

|

||||

press `g` to toggle grid-view instead of the file listing, and `t` toggles icons / thumbnails

|

||||

|

||||

|

||||

|

||||

it does static images with Pillow and uses FFmpeg for video files, so you may want to `--no-thumb` or maybe just `--no-vthumb` depending on how dangerous your users are

|

||||

|

||||

@@ -253,7 +315,9 @@ in the grid/thumbnail view, if the audio player panel is open, songs will start

|

||||

|

||||

## zip downloads

|

||||

|

||||

the `zip` link next to folders can produce various types of zip/tar files using these alternatives in the browser settings tab:

|

||||

download folders (or file selections) as `zip` or `tar` files

|

||||

|

||||

select which type of archive you want in the `[⚙️] config` tab:

|

||||

|

||||

| name | url-suffix | description |

|

||||

|--|--|--|

|

||||

@@ -270,13 +334,18 @@ the `zip` link next to folders can produce various types of zip/tar files using

|

||||

|

||||

you can also zip a selection of files or folders by clicking them in the browser, that brings up a selection editor and zip button in the bottom right

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

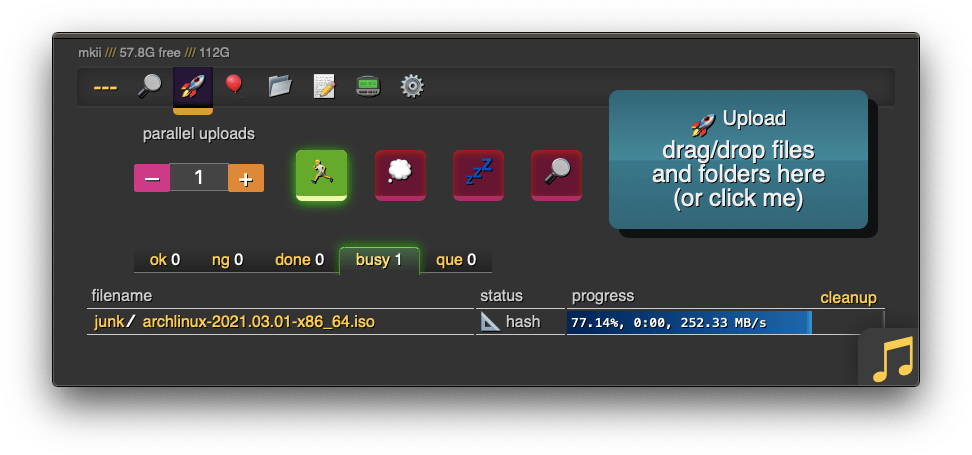

## uploading

|

||||

|

||||

two upload methods are available in the html client:

|

||||

* `🎈 bup`, the basic uploader, supports almost every browser since netscape 4.0

|

||||

* `🚀 up2k`, the fancy one

|

||||

drag files/folders into the web-browser to upload

|

||||

|

||||

this initiates an upload using `up2k`; there are two uploaders available:

|

||||

* `[🎈] bup`, the basic uploader, supports almost every browser since netscape 4.0

|

||||

* `[🚀] up2k`, the fancy one

|

||||

|

||||

you can also undo/delete uploads by using `[🧯]` [unpost](#unpost)

|

||||

|

||||

up2k has several advantages:

|

||||

* you can drop folders into the browser (files are added recursively)

|

||||

@@ -290,43 +359,126 @@ up2k has several advantages:

|

||||

|

||||

see [up2k](#up2k) for details on how it works

|

||||

|

||||

|

||||

|

||||

|

||||

**protip:** you can avoid scaring away users with [docs/minimal-up2k.html](docs/minimal-up2k.html) which makes it look [much simpler](https://user-images.githubusercontent.com/241032/118311195-dd6ca380-b4ef-11eb-86f3-75a3ff2e1332.png)

|

||||

|

||||

the up2k UI is the epitome of polished inutitive experiences:

|

||||

* "parallel uploads" specifies how many chunks to upload at the same time

|

||||

* `[🏃]` analysis of other files should continue while one is uploading

|

||||

* `[💭]` ask for confirmation before files are added to the list

|

||||

* `[💤]` sync uploading between other copyparty tabs so only one is active

|

||||

* `[🔎]` switch between upload and file-search mode

|

||||

* `[💭]` ask for confirmation before files are added to the queue

|

||||

* `[💤]` sync uploading between other copyparty browser-tabs so only one is active

|

||||

* `[🔎]` switch between upload and [file-search](#file-search) mode

|

||||

* ignore `[🔎]` if you add files by dragging them into the browser

|

||||

|

||||

and then theres the tabs below it,

|

||||

* `[ok]` is uploads which completed successfully

|

||||

* `[ng]` is the uploads which failed / got rejected (already exists, ...)

|

||||

* `[ok]` is the files which completed successfully

|

||||

* `[ng]` is the ones that failed / got rejected (already exists, ...)

|

||||

* `[done]` shows a combined list of `[ok]` and `[ng]`, chronological order

|

||||

* `[busy]` files which are currently hashing, pending-upload, or uploading

|

||||

* plus up to 3 entries each from `[done]` and `[que]` for context

|

||||

* `[que]` is all the files that are still queued

|

||||

|

||||

note that since up2k has to read each file twice, `[🎈 bup]` can *theoretically* be up to 2x faster in some extreme cases (files bigger than your ram, combined with an internet connection faster than the read-speed of your HDD)

|

||||

|

||||

if you are resuming a massive upload and want to skip hashing the files which already finished, you can enable `turbo` in the `[⚙️] config` tab, but please read the tooltip on that button

|

||||

|

||||

|

||||

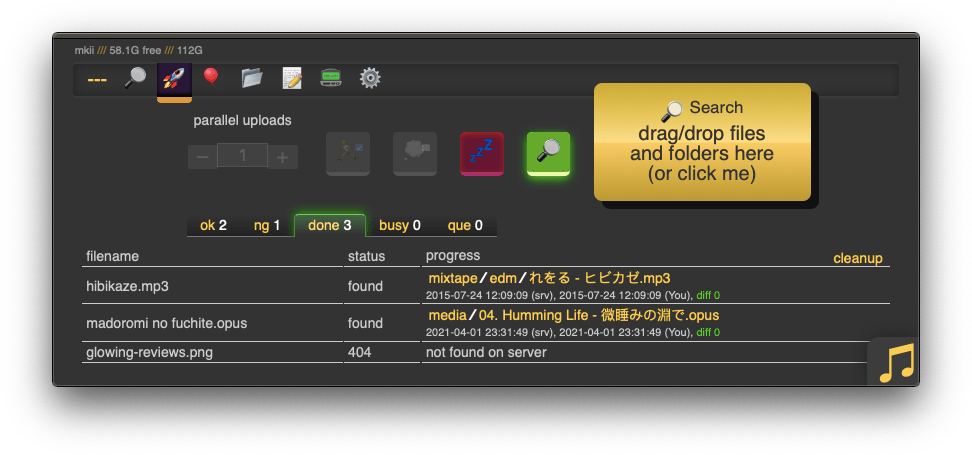

### file-search

|

||||

|

||||

|

||||

dropping files into the browser also lets you see if they exist on the server

|

||||

|

||||

in the `[🚀 up2k]` tab, after toggling the `[🔎]` switch green, any files/folders you drop onto the dropzone will be hashed on the client-side. Each hash is sent to the server which checks if that file exists somewhere already

|

||||

|

||||

|

||||

when you drag/drop files into the browser, you will see two dropzones: `Upload` and `Search`

|

||||

|

||||

> on a phone? toggle the `[🔎]` switch green before tapping the big yellow Search button to select your files

|

||||

|

||||

the files will be hashed on the client-side, and each hash is sent to the server, which checks if that file exists somewhere

|

||||

|

||||

files go into `[ok]` if they exist (and you get a link to where it is), otherwise they land in `[ng]`

|

||||

* the main reason filesearch is combined with the uploader is cause the code was too spaghetti to separate it out somewhere else, this is no longer the case but now i've warmed up to the idea too much

|

||||

|

||||

adding the same file multiple times is blocked, so if you first search for a file and then decide to upload it, you have to click the `[cleanup]` button to discard `[done]` files (or just refresh the page)

|

||||

|

||||

note that since up2k has to read the file twice, `[🎈 bup]` can be up to 2x faster in extreme cases (if your internet connection is faster than the read-speed of your HDD)

|

||||

|

||||

up2k has saved a few uploads from becoming corrupted in-transfer already; caught an android phone on wifi redhanded in wireshark with a bitflip, however bup with https would *probably* have noticed as well (thanks to tls also functioning as an integrity check)

|

||||

### unpost

|

||||

|

||||

undo/delete accidental uploads

|

||||

|

||||

|

||||

|

||||

you can unpost even if you don't have regular move/delete access, however only for files uploaded within the past `--unpost` seconds (default 12 hours) and the server must be running with `-e2d`

|

||||

|

||||

|

||||

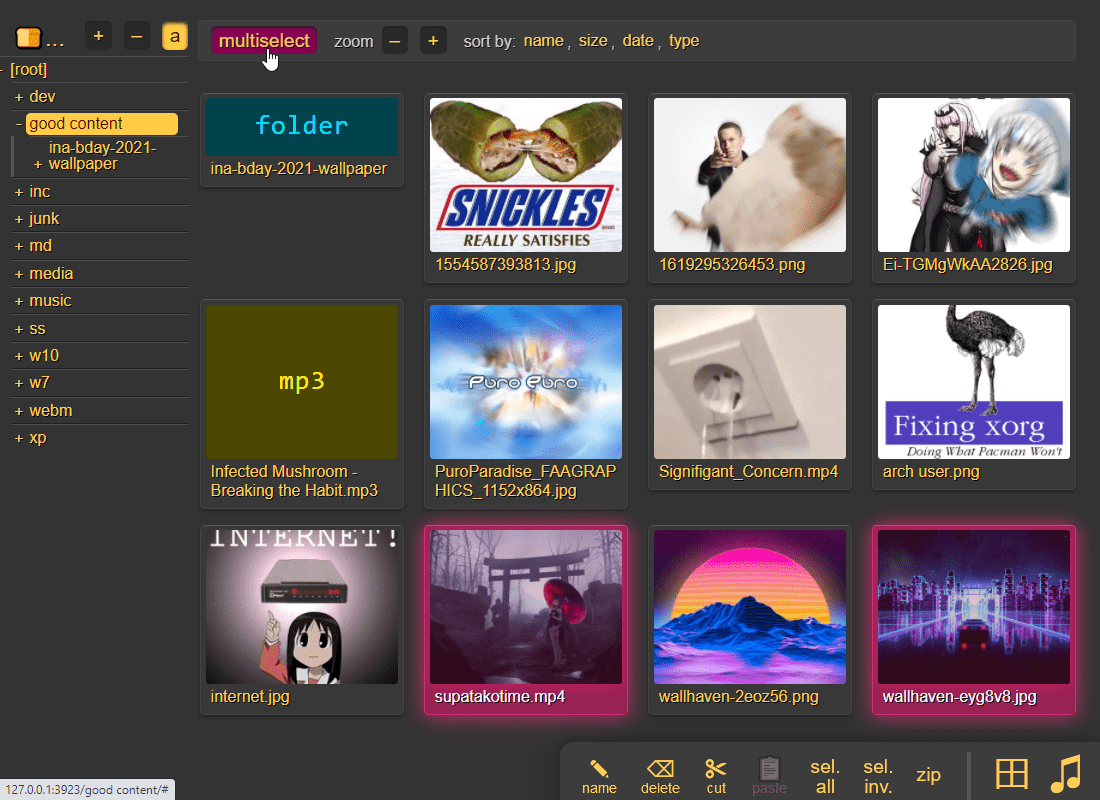

## file manager

|

||||

|

||||

cut/paste, rename, and delete files/folders (if you have permission)

|

||||

|

||||

file selection: click somewhere on the line (not the link itsef), then:

|

||||

* `space` to toggle

|

||||

* `up/down` to move

|

||||

* `shift-up/down` to move-and-select

|

||||

* `ctrl-shift-up/down` to also scroll

|

||||

|

||||

* cut: select some files and `ctrl-x`

|

||||

* paste: `ctrl-v` in another folder

|

||||

* rename: `F2`

|

||||

|

||||

you can move files across browser tabs (cut in one tab, paste in another)

|

||||

|

||||

|

||||

## batch rename

|

||||

|

||||

select some files and press `F2` to bring up the rename UI

|

||||

|

||||

|

||||

|

||||

quick explanation of the buttons,

|

||||

* `[✅ apply rename]` confirms and begins renaming

|

||||

* `[❌ cancel]` aborts and closes the rename window

|

||||

* `[↺ reset]` reverts any filename changes back to the original name

|

||||

* `[decode]` does a URL-decode on the filename, fixing stuff like `&` and `%20`

|

||||

* `[advanced]` toggles advanced mode

|

||||

|

||||

advanced mode: rename files based on rules to decide the new names, based on the original name (regex), or based on the tags collected from the file (artist/title/...), or a mix of both

|

||||

|

||||

in advanced mode,

|

||||

* `[case]` toggles case-sensitive regex

|

||||

* `regex` is the regex pattern to apply to the original filename; any files which don't match will be skipped

|

||||

* `format` is the new filename, taking values from regex capturing groups and/or from file tags

|

||||

* very loosely based on foobar2000 syntax

|

||||

* `presets` lets you save rename rules for later

|

||||

|

||||

available functions:

|

||||

* `$lpad(text, length, pad_char)`

|

||||

* `$rpad(text, length, pad_char)`

|

||||

|

||||

so,

|

||||

|

||||

say you have a file named [`meganeko - Eclipse - 07 Sirius A.mp3`](https://www.youtube.com/watch?v=-dtb0vDPruI) (absolutely fantastic album btw) and the tags are: `Album:Eclipse`, `Artist:meganeko`, `Title:Sirius A`, `tn:7`

|

||||

|

||||

you could use just regex to rename it:

|

||||

* `regex` = `(.*) - (.*) - ([0-9]{2}) (.*)`

|

||||

* `format` = `(3). (1) - (4)`

|

||||

* `output` = `07. meganeko - Sirius A.mp3`

|

||||

|

||||

or you could use just tags:

|

||||

* `format` = `$lpad((tn),2,0). (artist) - (title).(ext)`

|

||||

* `output` = `7. meganeko - Sirius A.mp3`

|

||||

|

||||

or a mix of both:

|

||||

* `regex` = ` - ([0-9]{2}) `

|

||||

* `format` = `(1). (artist) - (title).(ext)`

|

||||

* `output` = `07. meganeko - Sirius A.mp3`

|

||||

|

||||

the metadata keys you can use in the format field are the ones in the file-browser table header (whatever is collected with `-mte` and `-mtp`)

|

||||

|

||||

|

||||

## markdown viewer

|

||||

|

||||

and there are *two* editors

|

||||

|

||||

|

||||

|

||||

* the document preview has a max-width which is the same as an A4 paper when printed

|

||||

@@ -338,10 +490,18 @@ up2k has saved a few uploads from becoming corrupted in-transfer already; caught

|

||||

|

||||

* if you are using media hotkeys to switch songs and are getting tired of seeing the OSD popup which Windows doesn't let you disable, consider https://ocv.me/dev/?media-osd-bgone.ps1

|

||||

|

||||

* click the bottom-left `π` to open a javascript prompt for debugging

|

||||

|

||||

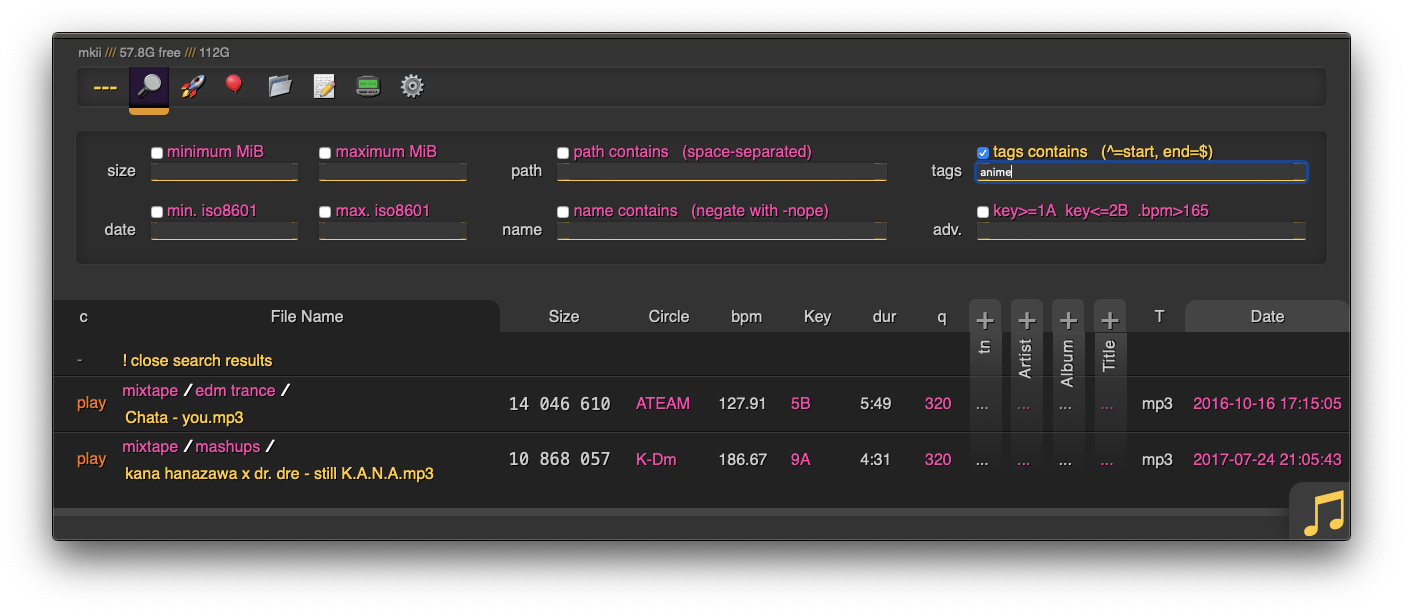

# searching

|

||||

* files named `.prologue.html` / `.epilogue.html` will be rendered before/after directory listings unless `--no-logues`

|

||||

|

||||

|

||||

* files named `README.md` / `readme.md` will be rendered after directory listings unless `--no-readme` (but `.epilogue.html` takes precedence)

|

||||

|

||||

|

||||

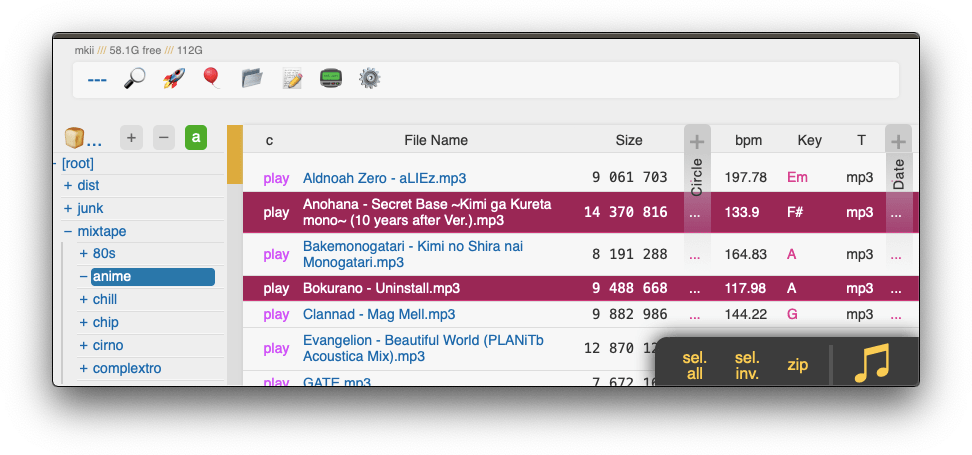

## searching

|

||||

|

||||

search by size, date, path/name, mp3-tags, ...

|

||||

|

||||

|

||||

|

||||

when started with `-e2dsa` copyparty will scan/index all your files. This avoids duplicates on upload, and also makes the volumes searchable through the web-ui:

|

||||

* make search queries by `size`/`date`/`directory-path`/`filename`, or...

|

||||

@@ -351,44 +511,89 @@ path/name queries are space-separated, AND'ed together, and words are negated wi

|

||||

* path: `shibayan -bossa` finds all files where one of the folders contain `shibayan` but filters out any results where `bossa` exists somewhere in the path

|

||||

* name: `demetori styx` gives you [good stuff](https://www.youtube.com/watch?v=zGh0g14ZJ8I&list=PL3A147BD151EE5218&index=9)

|

||||

|

||||

add `-e2ts` to also scan/index tags from music files:

|

||||

add the argument `-e2ts` to also scan/index tags from music files, which brings us over to:

|

||||

|

||||

|

||||

## search configuration

|

||||

# server config

|

||||

|

||||

searching relies on two databases, the up2k filetree (`-e2d`) and the metadata tags (`-e2t`). Configuration can be done through arguments, volume flags, or a mix of both.

|

||||

## file indexing

|

||||

|

||||

file indexing relies on two database tables, the up2k filetree (`-e2d`) and the metadata tags (`-e2t`), stored in `.hist/up2k.db`. Configuration can be done through arguments, volume flags, or a mix of both.

|

||||

|

||||

through arguments:

|

||||

* `-e2d` enables file indexing on upload

|

||||

* `-e2ds` scans writable folders for new files on startup

|

||||

* `-e2dsa` scans all mounted volumes (including readonly ones)

|

||||

* `-e2ds` also scans writable folders for new files on startup

|

||||

* `-e2dsa` also scans all mounted volumes (including readonly ones)

|

||||

* `-e2t` enables metadata indexing on upload

|

||||

* `-e2ts` scans for tags in all files that don't have tags yet

|

||||

* `-e2tsr` deletes all existing tags, does a full reindex

|

||||

* `-e2ts` also scans for tags in all files that don't have tags yet

|

||||

* `-e2tsr` also deletes all existing tags, doing a full reindex

|

||||

|

||||

the same arguments can be set as volume flags, in addition to `d2d` and `d2t` for disabling:

|

||||

* `-v ~/music::r:ce2dsa:ce2tsr` does a full reindex of everything on startup

|

||||

* `-v ~/music::r:cd2d` disables **all** indexing, even if any `-e2*` are on

|

||||

* `-v ~/music::r:cd2t` disables all `-e2t*` (tags), does not affect `-e2d*`

|

||||

* `-v ~/music::r:c,e2dsa:c,e2tsr` does a full reindex of everything on startup

|

||||

* `-v ~/music::r:c,d2d` disables **all** indexing, even if any `-e2*` are on

|

||||

* `-v ~/music::r:c,d2t` disables all `-e2t*` (tags), does not affect `-e2d*`

|

||||

|

||||

note:

|

||||

* the parser currently can't handle `c,e2dsa,e2tsr` so you have to `c,e2dsa:c,e2tsr`

|

||||

* `e2tsr` is probably always overkill, since `e2ds`/`e2dsa` would pick up any file modifications and `e2ts` would then reindex those, unless there is a new copyparty version with new parsers and the release note says otherwise

|

||||

* the rescan button in the admin panel has no effect unless the volume has `-e2ds` or higher

|

||||

|

||||

you can choose to only index filename/path/size/last-modified (and not the hash of the file contents) by setting `--no-hash` or the volume-flag `cdhash`, this has the following consequences:

|

||||

to save some time, you can choose to only index filename/path/size/last-modified (and not the hash of the file contents) by setting `--no-hash` or the volume-flag `:c,dhash`, this has the following consequences:

|

||||

* initial indexing is way faster, especially when the volume is on a network disk

|

||||

* makes it impossible to [file-search](#file-search)

|

||||

* if someone uploads the same file contents, the upload will not be detected as a dupe, so it will not get symlinked or rejected

|

||||

|

||||

if you set `--no-hash`, you can enable hashing for specific volumes using flag `cehash`

|

||||

if you set `--no-hash`, you can enable hashing for specific volumes using flag `:c,ehash`

|

||||

|

||||

|

||||

## upload rules

|

||||

|

||||

set upload rules using volume flags, some examples:

|

||||

|

||||

* `:c,sz=1k-3m` sets allowed filesize between 1 KiB and 3 MiB inclusive (suffixes: b, k, m, g)

|

||||

* `:c,nosub` disallow uploading into subdirectories; goes well with `rotn` and `rotf`:

|

||||

* `:c,rotn=1000,2` moves uploads into subfolders, up to 1000 files in each folder before making a new one, two levels deep (must be at least 1)

|

||||

* `:c,rotf=%Y/%m/%d/%H` enforces files to be uploaded into a structure of subfolders according to that date format

|

||||

* if someone uploads to `/foo/bar` the path would be rewritten to `/foo/bar/2021/08/06/23` for example

|

||||

* but the actual value is not verified, just the structure, so the uploader can choose any values which conform to the format string

|

||||

* just to avoid additional complexity in up2k which is enough of a mess already

|

||||

* `:c,lifetime=300` delete uploaded files when they become 5 minutes old

|

||||

|

||||

you can also set transaction limits which apply per-IP and per-volume, but these assume `-j 1` (default) otherwise the limits will be off, for example `-j 4` would allow anywhere between 1x and 4x the limits you set depending on which processing node the client gets routed to

|

||||

|

||||

* `:c,maxn=250,3600` allows 250 files over 1 hour from each IP (tracked per-volume)

|

||||

* `:c,maxb=1g,300` allows 1 GiB total over 5 minutes from each IP (tracked per-volume)

|

||||

|

||||

|

||||

## compress uploads

|

||||

|

||||

files can be autocompressed on upload, either on user-request (if config allows) or forced by server-config

|

||||

|

||||

* volume flag `gz` allows gz compression

|

||||

* volume flag `xz` allows lzma compression

|

||||

* volume flag `pk` **forces** compression on all files

|

||||

* url parameter `pk` requests compression with server-default algorithm

|

||||

* url parameter `gz` or `xz` requests compression with a specific algorithm

|

||||

* url parameter `xz` requests xz compression

|

||||

|

||||

things to note,

|

||||

* the `gz` and `xz` arguments take a single optional argument, the compression level (range 0 to 9)

|

||||

* the `pk` volume flag takes the optional argument `ALGORITHM,LEVEL` which will then be forced for all uploads, for example `gz,9` or `xz,0`

|

||||

* default compression is gzip level 9

|

||||

* all upload methods except up2k are supported

|

||||

* the files will be indexed after compression, so dupe-detection and file-search will not work as expected

|

||||

|

||||

some examples,

|

||||

|

||||

|

||||

## database location

|

||||

|

||||

in-volume (`.hist/up2k.db`, default) or somewhere else

|

||||

|

||||

copyparty creates a subfolder named `.hist` inside each volume where it stores the database, thumbnails, and some other stuff

|

||||

|

||||

this can instead be kept in a single place using the `--hist` argument, or the `hist=` volume flag, or a mix of both:

|

||||

* `--hist ~/.cache/copyparty -v ~/music::r:chist=-` sets `~/.cache/copyparty` as the default place to put volume info, but `~/music` gets the regular `.hist` subfolder (`-` restores default behavior)

|

||||

* `--hist ~/.cache/copyparty -v ~/music::r:c,hist=-` sets `~/.cache/copyparty` as the default place to put volume info, but `~/music` gets the regular `.hist` subfolder (`-` restores default behavior)

|

||||

|

||||

note:

|

||||

* markdown edits are always stored in a local `.hist` subdirectory

|

||||

@@ -398,16 +603,20 @@ note:

|

||||

|

||||

## metadata from audio files

|

||||

|

||||

set `-e2t` to index tags on upload

|

||||

|

||||

`-mte` decides which tags to index and display in the browser (and also the display order), this can be changed per-volume:

|

||||

* `-v ~/music::r:cmte=title,artist` indexes and displays *title* followed by *artist*

|

||||

* `-v ~/music::r:c,mte=title,artist` indexes and displays *title* followed by *artist*

|

||||

|

||||

if you add/remove a tag from `mte` you will need to run with `-e2tsr` once to rebuild the database, otherwise only new files will be affected

|

||||

|

||||

but instead of using `-mte`, `-mth` is a better way to hide tags in the browser: these tags will not be displayed by default, but they still get indexed and become searchable, and users can choose to unhide them in the `[⚙️] config` pane

|

||||

|

||||

`-mtm` can be used to add or redefine a metadata mapping, say you have media files with `foo` and `bar` tags and you want them to display as `qux` in the browser (preferring `foo` if both are present), then do `-mtm qux=foo,bar` and now you can `-mte artist,title,qux`

|

||||

|

||||

tags that start with a `.` such as `.bpm` and `.dur`(ation) indicate numeric value

|

||||

|

||||

see the beautiful mess of a dictionary in [mtag.py](https://github.com/9001/copyparty/blob/master/copyparty/mtag.py) for the default mappings (should cover mp3,opus,flac,m4a,wav,aif,)

|

||||

see the beautiful mess of a dictionary in [mtag.py](https://github.com/9001/copyparty/blob/hovudstraum/copyparty/mtag.py) for the default mappings (should cover mp3,opus,flac,m4a,wav,aif,)

|

||||

|

||||

`--no-mutagen` disables Mutagen and uses FFprobe instead, which...

|

||||

* is about 20x slower than Mutagen

|

||||

@@ -419,11 +628,13 @@ see the beautiful mess of a dictionary in [mtag.py](https://github.com/9001/copy

|

||||

|

||||

## file parser plugins

|

||||

|

||||

provide custom parsers to index additional tags

|

||||

|

||||

copyparty can invoke external programs to collect additional metadata for files using `mtp` (either as argument or volume flag), there is a default timeout of 30sec

|

||||

|

||||

* `-mtp .bpm=~/bin/audio-bpm.py` will execute `~/bin/audio-bpm.py` with the audio file as argument 1 to provide the `.bpm` tag, if that does not exist in the audio metadata

|

||||

* `-mtp key=f,t5,~/bin/audio-key.py` uses `~/bin/audio-key.py` to get the `key` tag, replacing any existing metadata tag (`f,`), aborting if it takes longer than 5sec (`t5,`)

|

||||

* `-v ~/music::r:cmtp=.bpm=~/bin/audio-bpm.py:cmtp=key=f,t5,~/bin/audio-key.py` both as a per-volume config wow this is getting ugly

|

||||

* `-v ~/music::r:c,mtp=.bpm=~/bin/audio-bpm.py:c,mtp=key=f,t5,~/bin/audio-key.py` both as a per-volume config wow this is getting ugly

|

||||

|

||||

*but wait, there's more!* `-mtp` can be used for non-audio files as well using the `a` flag: `ay` only do audio files, `an` only do non-audio files, or `ad` do all files (d as in dontcare)

|

||||

|

||||

@@ -439,32 +650,38 @@ copyparty can invoke external programs to collect additional metadata for files

|

||||

|

||||

# browser support

|

||||

|

||||

TLDR: yes

|

||||

|

||||

|

||||

|

||||

`ie` = internet-explorer, `ff` = firefox, `c` = chrome, `iOS` = iPhone/iPad, `Andr` = Android

|

||||

|

||||

| feature | ie6 | ie9 | ie10 | ie11 | ff 52 | c 49 | iOS | Andr |

|

||||

| --------------- | --- | --- | ---- | ---- | ----- | ---- | --- | ---- |

|

||||

| browse files | yep | yep | yep | yep | yep | yep | yep | yep |

|

||||

| basic uploader | yep | yep | yep | yep | yep | yep | yep | yep |

|

||||

| make directory | yep | yep | yep | yep | yep | yep | yep | yep |

|

||||

| send message | yep | yep | yep | yep | yep | yep | yep | yep |

|

||||

| set sort order | - | yep | yep | yep | yep | yep | yep | yep |

|

||||

| zip selection | - | yep | yep | yep | yep | yep | yep | yep |

|

||||

| directory tree | - | - | `*1` | yep | yep | yep | yep | yep |

|

||||

| up2k | - | - | yep | yep | yep | yep | yep | yep |

|

||||

| markdown editor | - | - | yep | yep | yep | yep | yep | yep |

|

||||

| markdown viewer | - | - | yep | yep | yep | yep | yep | yep |

|

||||

| play mp3/m4a | - | yep | yep | yep | yep | yep | yep | yep |

|

||||

| play ogg/opus | - | - | - | - | yep | yep | `*2` | yep |

|

||||

| thumbnail view | - | - | - | - | yep | yep | yep | yep |

|

||||

| image viewer | - | - | - | - | yep | yep | yep | yep |

|

||||

| **= feature =** | ie6 | ie9 | ie10 | ie11 | ff 52 | c 49 | iOS | Andr |

|

||||

| feature | ie6 | ie9 | ie10 | ie11 | ff 52 | c 49 | iOS | Andr |

|

||||

| --------------- | --- | ---- | ---- | ---- | ----- | ---- | --- | ---- |

|

||||

| browse files | yep | yep | yep | yep | yep | yep | yep | yep |

|

||||

| thumbnail view | - | yep | yep | yep | yep | yep | yep | yep |

|

||||

| basic uploader | yep | yep | yep | yep | yep | yep | yep | yep |

|

||||

| up2k | - | - | `*1` | `*1` | yep | yep | yep | yep |

|

||||

| make directory | yep | yep | yep | yep | yep | yep | yep | yep |

|

||||

| send message | yep | yep | yep | yep | yep | yep | yep | yep |

|

||||

| set sort order | - | yep | yep | yep | yep | yep | yep | yep |

|

||||

| zip selection | - | yep | yep | yep | yep | yep | yep | yep |

|

||||

| file rename | - | yep | yep | yep | yep | yep | yep | yep |

|

||||

| file cut/paste | - | yep | yep | yep | yep | yep | yep | yep |

|

||||

| navpane | - | `*2` | yep | yep | yep | yep | yep | yep |

|

||||

| image viewer | - | yep | yep | yep | yep | yep | yep | yep |

|

||||

| video player | - | yep | yep | yep | yep | yep | yep | yep |

|

||||

| markdown editor | - | - | yep | yep | yep | yep | yep | yep |

|

||||

| markdown viewer | - | - | yep | yep | yep | yep | yep | yep |

|

||||

| play mp3/m4a | - | yep | yep | yep | yep | yep | yep | yep |

|

||||

| play ogg/opus | - | - | - | - | yep | yep | `*3` | yep |

|

||||

| **= feature =** | ie6 | ie9 | ie10 | ie11 | ff 52 | c 49 | iOS | Andr |

|

||||

|

||||

* internet explorer 6 to 8 behave the same

|

||||

* firefox 52 and chrome 49 are the last winxp versions

|

||||

* `*1` only public folders (login session is dropped) and no history / back-button

|

||||

* `*2` using a wasm decoder which can sometimes get stuck and consumes a bit more power

|

||||

* firefox 52 and chrome 49 are the final winxp versions

|

||||

* `*1` yes, but extremely slow (ie10: `1 MiB/s`, ie11: `270 KiB/s`)

|

||||

* `*2` causes a full-page refresh on each navigation

|

||||

* `*3` using a wasm decoder which consumes a bit more power

|

||||

|

||||

quick summary of more eccentric web-browsers trying to view a directory index:

|

||||

|

||||

@@ -476,22 +693,25 @@ quick summary of more eccentric web-browsers trying to view a directory index:

|

||||

| **lynx** (2.8.9/macports) | can browse, login, upload/mkdir/msg |

|

||||

| **w3m** (0.5.3/macports) | can browse, login, upload at 100kB/s, mkdir/msg |

|

||||

| **netsurf** (3.10/arch) | is basically ie6 with much better css (javascript has almost no effect) |

|

||||

| **opera** (11.60/winxp) | OK: thumbnails, image-viewer, zip-selection, rename/cut/paste. NG: up2k, navpane, markdown, audio |

|

||||

| **ie4** and **netscape** 4.0 | can browse (text is yellow on white), upload with `?b=u` |

|

||||

| **SerenityOS** (7e98457) | hits a page fault, works with `?b=u`, file upload not-impl |

|

||||

|

||||

|

||||

# client examples

|

||||

|

||||

interact with copyparty using non-browser clients

|

||||

|

||||

* javascript: dump some state into a file (two separate examples)

|

||||

* `await fetch('https://127.0.0.1:3923/', {method:"PUT", body: JSON.stringify(foo)});`

|

||||

* `var xhr = new XMLHttpRequest(); xhr.open('POST', 'https://127.0.0.1:3923/msgs?raw'); xhr.send('foo');`

|

||||

|

||||

* curl/wget: upload some files (post=file, chunk=stdin)

|

||||

* `post(){ curl -b cppwd=wark http://127.0.0.1:3923/ -F act=bput -F f=@"$1";}`

|

||||

* `post(){ curl -b cppwd=wark -F act=bput -F f=@"$1" http://127.0.0.1:3923/;}`

|

||||

`post movie.mkv`

|

||||

* `post(){ wget --header='Cookie: cppwd=wark' http://127.0.0.1:3923/?raw --post-file="$1" -O-;}`

|

||||

* `post(){ wget --header='Cookie: cppwd=wark' --post-file="$1" -O- http://127.0.0.1:3923/?raw;}`

|

||||

`post movie.mkv`

|

||||

* `chunk(){ curl -b cppwd=wark http://127.0.0.1:3923/ -T-;}`

|

||||

* `chunk(){ curl -b cppwd=wark -T- http://127.0.0.1:3923/;}`

|

||||

`chunk <movie.mkv`

|

||||

|

||||

* FUSE: mount a copyparty server as a local filesystem

|

||||

@@ -505,6 +725,8 @@ copyparty returns a truncated sha512sum of your PUT/POST as base64; you can gene

|

||||

b512(){ printf "$((sha512sum||shasum -a512)|sed -E 's/ .*//;s/(..)/\\x\1/g')"|base64|tr '+/' '-_'|head -c44;}

|

||||

b512 <movie.mkv

|

||||

|

||||

you can provide passwords using cookie 'cppwd=hunter2', as a url query `?pw=hunter2`, or with basic-authentication (either as the username or password)

|

||||

|

||||

|

||||

# up2k

|

||||

|

||||

@@ -521,10 +743,25 @@ quick outline of the up2k protocol, see [uploading](#uploading) for the web-clie

|

||||

* server writes chunks into place based on the hash

|

||||

* client does another handshake with the hashlist; server replies with OK or a list of chunks to reupload

|

||||

|

||||

up2k has saved a few uploads from becoming corrupted in-transfer already; caught an android phone on wifi redhanded in wireshark with a bitflip, however bup with https would *probably* have noticed as well (thanks to tls also functioning as an integrity check)

|

||||

|

||||

|

||||

## why chunk-hashes

|

||||

|

||||

a single sha512 would be better, right?

|

||||

|

||||

this is due to `crypto.subtle` not providing a streaming api (or the option to seed the sha512 hasher with a starting hash)

|

||||

|

||||