mirror of

https://github.com/9001/copyparty.git

synced 2025-10-30 19:43:37 +00:00

Compare commits

172 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

c4083a2942 | ||

|

|

36c20bbe53 | ||

|

|

e34634f5af | ||

|

|

cba9e5b669 | ||

|

|

1f3c46a6b0 | ||

|

|

799a5ffa47 | ||

|

|

b000707c10 | ||

|

|

feba4de1d6 | ||

|

|

951fdb27ca | ||

|

|

9697fb3d84 | ||

|

|

2dbed4500a | ||

|

|

fd9d0e433d | ||

|

|

f096f3ef81 | ||

|

|

cc4a063695 | ||

|

|

b64cabc3c9 | ||

|

|

3dd460717c | ||

|

|

bf658a522b | ||

|

|

e9be7e712d | ||

|

|

e40cd2a809 | ||

|

|

dbabeb9692 | ||

|

|

8dd37d76b0 | ||

|

|

fd475aa358 | ||

|

|

f0988c0e32 | ||

|

|

0632f09bff | ||

|

|

ba599aaca0 | ||

|

|

ff05919e89 | ||

|

|

52e63fa101 | ||

|

|

96ceccd12a | ||

|

|

87994fe006 | ||

|

|

fa12c81a03 | ||

|

|

344ce63455 | ||

|

|

ec4daacf9e | ||

|

|

f3e8308718 | ||

|

|

515ac5d941 | ||

|

|

954c7e7e50 | ||

|

|

67ff57f3a3 | ||

|

|

c10c70c1e5 | ||

|

|

04592a98d2 | ||

|

|

c9c4aac6cf | ||

|

|

8b2c7586ce | ||

|

|

32e22dfe84 | ||

|

|

d70b885722 | ||

|

|

ac6c4b13f5 | ||

|

|

ececdad22d | ||

|

|

bf659781b0 | ||

|

|

2c6bb195a4 | ||

|

|

c032cd08b3 | ||

|

|

39e7a7a231 | ||

|

|

6e14cd2c39 | ||

|

|

aab3baaea7 | ||

|

|

b8453c3b4f | ||

|

|

6ce0e2cd5b | ||

|

|

76beaae7f2 | ||

|

|

c1a7f9edbe | ||

|

|

b5f2fe2f0a | ||

|

|

98a90d49cb | ||

|

|

f55e982cb5 | ||

|

|

686c7defeb | ||

|

|

0b1e483c53 | ||

|

|

457d7df129 | ||

|

|

ce776a547c | ||

|

|

ded0567cbf | ||

|

|

c9cac83d09 | ||

|

|

4fbe6b01a8 | ||

|

|

ee9585264e | ||

|

|

c9ffead7bf | ||

|

|

ed69d42005 | ||

|

|

0b47ee306b | ||

|

|

e4e63619d4 | ||

|

|

f32cca292a | ||

|

|

e87ea19ff1 | ||

|

|

0214793740 | ||

|

|

fc9dd5d743 | ||

|

|

9e6d5dd2b9 | ||

|

|

bdad197e2c | ||

|

|

7e139288a6 | ||

|

|

6e7935abaf | ||

|

|

3ba0cc20f1 | ||

|

|

dd28de1796 | ||

|

|

9eecc9e19a | ||

|

|

6530cb6b05 | ||

|

|

41ce613379 | ||

|

|

5e2785caba | ||

|

|

d7cc000976 | ||

|

|

50d8ff95ae | ||

|

|

b2de1459b6 | ||

|

|

f0ffbea0b2 | ||

|

|

199ccca0fe | ||

|

|

1d9b355743 | ||

|

|

f0437fbb07 | ||

|

|

abc404a5b7 | ||

|

|

04b9e21330 | ||

|

|

1044aa071b | ||

|

|

4c3192c8cc | ||

|

|

689e77a025 | ||

|

|

3bd89403d2 | ||

|

|

b4800d9bcb | ||

|

|

05485e8539 | ||

|

|

0e03dc0868 | ||

|

|

352b1ed10a | ||

|

|

0db1244d04 | ||

|

|

ece08b8179 | ||

|

|

b8945ae233 | ||

|

|

dcaf7b0a20 | ||

|

|

f982cdc178 | ||

|

|

b265e59834 | ||

|

|

4a843a6624 | ||

|

|

241ef5b99d | ||

|

|

f39f575a9c | ||

|

|

1521307f1e | ||

|

|

dd122111e6 | ||

|

|

00c177fa74 | ||

|

|

f6c7e49eb8 | ||

|

|

1a8dc3d18a | ||

|

|

38a163a09a | ||

|

|

8f031246d2 | ||

|

|

8f3d97dde7 | ||

|

|

4acaf24d65 | ||

|

|

9a8dbbbcf8 | ||

|

|

a3efc4c726 | ||

|

|

0278bf328f | ||

|

|

17ddd96cc6 | ||

|

|

0e82e79aea | ||

|

|

30f124c061 | ||

|

|

e19d90fcfc | ||

|

|

184bbdd23d | ||

|

|

30b50aec95 | ||

|

|

c3c3d81db1 | ||

|

|

49b7231283 | ||

|

|

edbedcdad3 | ||

|

|

e4ae5f74e6 | ||

|

|

2c7ffe08d7 | ||

|

|

3ca46bae46 | ||

|

|

7e82aaf843 | ||

|

|

315bd71adf | ||

|

|

2c612c9aeb | ||

|

|

36aee085f7 | ||

|

|

d01bb69a9c | ||

|

|

c9b1c48c72 | ||

|

|

aea3843cf2 | ||

|

|

131b6f4b9a | ||

|

|

6efb8b735a | ||

|

|

223b7af2ce | ||

|

|

e72c2a6982 | ||

|

|

dd9b93970e | ||

|

|

e4c7cd81a9 | ||

|

|

12b3a62586 | ||

|

|

2da3bdcd47 | ||

|

|

c1dccbe0ba | ||

|

|

9629fcde68 | ||

|

|

cae436b566 | ||

|

|

01714700ae | ||

|

|

51e6c4852b | ||

|

|

b206c5d64e | ||

|

|

62c3272351 | ||

|

|

c5d822c70a | ||

|

|

9c09b4061a | ||

|

|

c26fb43ced | ||

|

|

deb8f20db6 | ||

|

|

50e18ed8ff | ||

|

|

31f3895f40 | ||

|

|

615929268a | ||

|

|

b8b15814cf | ||

|

|

7766fffe83 | ||

|

|

2a16c150d1 | ||

|

|

418c2166cc | ||

|

|

a4dd44f648 | ||

|

|

5352f7cda7 | ||

|

|

5533b47099 | ||

|

|

e9b14464ee | ||

|

|

4e986e5cd1 | ||

|

|

8a59b40c53 |

145

README.md

145

README.md

@@ -16,6 +16,13 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

||||

📷 **screenshots:** [browser](#the-browser) // [upload](#uploading) // [unpost](#unpost) // [thumbnails](#thumbnails) // [search](#searching) // [fsearch](#file-search) // [zip-DL](#zip-downloads) // [md-viewer](#markdown-viewer) // [ie4](#browser-support)

|

||||

|

||||

|

||||

## get the app

|

||||

|

||||

<a href="https://f-droid.org/packages/me.ocv.partyup/"><img src="https://ocv.me/fdroid.png" alt="Get it on F-Droid" height="50" /> '' <img src="https://img.shields.io/f-droid/v/me.ocv.partyup.svg" alt="f-droid version info" /></a> '' <a href="https://github.com/9001/party-up"><img src="https://img.shields.io/github/release/9001/party-up.svg?logo=github" alt="github version info" /></a>

|

||||

|

||||

(the app is **NOT** the full copyparty server! just a basic upload client, nothing fancy yet)

|

||||

|

||||

|

||||

## readme toc

|

||||

|

||||

* top

|

||||

@@ -47,13 +54,15 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

||||

* [other tricks](#other-tricks)

|

||||

* [searching](#searching) - search by size, date, path/name, mp3-tags, ...

|

||||

* [server config](#server-config) - using arguments or config files, or a mix of both

|

||||

* [ftp-server](#ftp-server) - an FTP server can be started using `--ftp 3921`

|

||||

* [file indexing](#file-indexing)

|

||||

* [upload rules](#upload-rules) - set upload rules using volume flags

|

||||

* [compress uploads](#compress-uploads) - files can be autocompressed on upload

|

||||

* [database location](#database-location) - in-volume (`.hist/up2k.db`, default) or somewhere else

|

||||

* [metadata from audio files](#metadata-from-audio-files) - set `-e2t` to index tags on upload

|

||||

* [file parser plugins](#file-parser-plugins) - provide custom parsers to index additional tags

|

||||

* [file parser plugins](#file-parser-plugins) - provide custom parsers to index additional tags, also see [./bin/mtag/README.md](./bin/mtag/README.md)

|

||||

* [upload events](#upload-events) - trigger a script/program on each upload

|

||||

* [hiding from google](#hiding-from-google) - tell search engines you dont wanna be indexed

|

||||

* [complete examples](#complete-examples)

|

||||

* [browser support](#browser-support) - TLDR: yes

|

||||

* [client examples](#client-examples) - interact with copyparty using non-browser clients

|

||||

@@ -75,9 +84,10 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

||||

* [optional dependencies](#optional-dependencies) - install these to enable bonus features

|

||||

* [install recommended deps](#install-recommended-deps)

|

||||

* [optional gpl stuff](#optional-gpl-stuff)

|

||||

* [sfx](#sfx) - there are two self-contained "binaries"

|

||||

* [sfx](#sfx) - the self-contained "binary"

|

||||

* [sfx repack](#sfx-repack) - reduce the size of an sfx by removing features

|

||||

* [install on android](#install-on-android)

|

||||

* [reporting bugs](#reporting-bugs) - ideas for context to include in bug reports

|

||||

* [building](#building)

|

||||

* [dev env setup](#dev-env-setup)

|

||||

* [just the sfx](#just-the-sfx)

|

||||

@@ -146,6 +156,7 @@ feature summary

|

||||

* ☑ multiprocessing (actual multithreading)

|

||||

* ☑ volumes (mountpoints)

|

||||

* ☑ [accounts](#accounts-and-volumes)

|

||||

* ☑ [ftp-server](#ftp-server)

|

||||

* upload

|

||||

* ☑ basic: plain multipart, ie6 support

|

||||

* ☑ [up2k](#uploading): js, resumable, multithreaded

|

||||

@@ -161,12 +172,13 @@ feature summary

|

||||

* ☑ file manager (cut/paste, delete, [batch-rename](#batch-rename))

|

||||

* ☑ audio player (with OS media controls and opus transcoding)

|

||||

* ☑ image gallery with webm player

|

||||

* ☑ textfile browser with syntax hilighting

|

||||

* ☑ [thumbnails](#thumbnails)

|

||||

* ☑ ...of images using Pillow

|

||||

* ☑ ...of images using Pillow, pyvips, or FFmpeg

|

||||

* ☑ ...of videos using FFmpeg

|

||||

* ☑ ...of audio (spectrograms) using FFmpeg

|

||||

* ☑ cache eviction (max-age; maybe max-size eventually)

|

||||

* ☑ SPA (browse while uploading)

|

||||

* if you use the navpane to navigate, not folders in the file list

|

||||

* server indexing

|

||||

* ☑ [locate files by contents](#file-search)

|

||||

* ☑ search by name/path/date/size

|

||||

@@ -228,11 +240,17 @@ some improvement ideas

|

||||

|

||||

## general bugs

|

||||

|

||||

* Windows: if the up2k db is on a samba-share or network disk, you'll get unpredictable behavior if the share is disconnected for a bit

|

||||

* use `--hist` or the `hist` volflag (`-v [...]:c,hist=/tmp/foo`) to place the db on a local disk instead

|

||||

* all volumes must exist / be available on startup; up2k (mtp especially) gets funky otherwise

|

||||

* probably more, pls let me know

|

||||

|

||||

## not my bugs

|

||||

|

||||

* iPhones: the volume control doesn't work because [apple doesn't want it to](https://developer.apple.com/library/archive/documentation/AudioVideo/Conceptual/Using_HTML5_Audio_Video/Device-SpecificConsiderations/Device-SpecificConsiderations.html#//apple_ref/doc/uid/TP40009523-CH5-SW11)

|

||||

* *future workaround:* enable the equalizer, make it all-zero, and set a negative boost to reduce the volume

|

||||

* "future" because `AudioContext` is broken in the current iOS version (15.1), maybe one day...

|

||||

|

||||

* Windows: folders cannot be accessed if the name ends with `.`

|

||||

* python or windows bug

|

||||

|

||||

@@ -249,6 +267,7 @@ some improvement ideas

|

||||

|

||||

* is it possible to block read-access to folders unless you know the exact URL for a particular file inside?

|

||||

* yes, using the [`g` permission](#accounts-and-volumes), see the examples there

|

||||

* you can also do this with linux filesystem permissions; `chmod 111 music` will make it possible to access files and folders inside the `music` folder but not list the immediate contents -- also works with other software, not just copyparty

|

||||

|

||||

* can I make copyparty download a file to my server if I give it a URL?

|

||||

* not officially, but there is a [terrible hack](https://github.com/9001/copyparty/blob/hovudstraum/bin/mtag/wget.py) which makes it possible

|

||||

@@ -256,7 +275,10 @@ some improvement ideas

|

||||

|

||||

# accounts and volumes

|

||||

|

||||

per-folder, per-user permissions

|

||||

per-folder, per-user permissions - if your setup is getting complex, consider making a [config file](./docs/example.conf) instead of using arguments

|

||||

* much easier to manage, and you can modify the config at runtime with `systemctl reload copyparty` or more conveniently using the `[reload cfg]` button in the control-panel (if logged in as admin)

|

||||

|

||||

configuring accounts/volumes with arguments:

|

||||

* `-a usr:pwd` adds account `usr` with password `pwd`

|

||||

* `-v .::r` adds current-folder `.` as the webroot, `r`eadable by anyone

|

||||

* the syntax is `-v src:dst:perm:perm:...` so local-path, url-path, and one or more permissions to set

|

||||

@@ -314,6 +336,7 @@ the browser has the following hotkeys (always qwerty)

|

||||

* `V` toggle folders / textfiles in the navpane

|

||||

* `G` toggle list / [grid view](#thumbnails)

|

||||

* `T` toggle thumbnails / icons

|

||||

* `ESC` close various things

|

||||

* `ctrl-X` cut selected files/folders

|

||||

* `ctrl-V` paste

|

||||

* `F2` [rename](#batch-rename) selected file/folder

|

||||

@@ -365,9 +388,13 @@ switching between breadcrumbs or navpane

|

||||

|

||||

click the `🌲` or pressing the `B` hotkey to toggle between breadcrumbs path (default), or a navpane (tree-browser sidebar thing)

|

||||

|

||||

* `[-]` and `[+]` (or hotkeys `A`/`D`) adjust the size

|

||||

* `[v]` jumps to the currently open folder

|

||||

* `[+]` and `[-]` (or hotkeys `A`/`D`) adjust the size

|

||||

* `[🎯]` jumps to the currently open folder

|

||||

* `[📃]` toggles between showing folders and textfiles

|

||||

* `[📌]` shows the name of all parent folders in a docked panel

|

||||

* `[a]` toggles automatic widening as you go deeper

|

||||

* `[↵]` toggles wordwrap

|

||||

* `[👀]` show full name on hover (if wordwrap is off)

|

||||

|

||||

|

||||

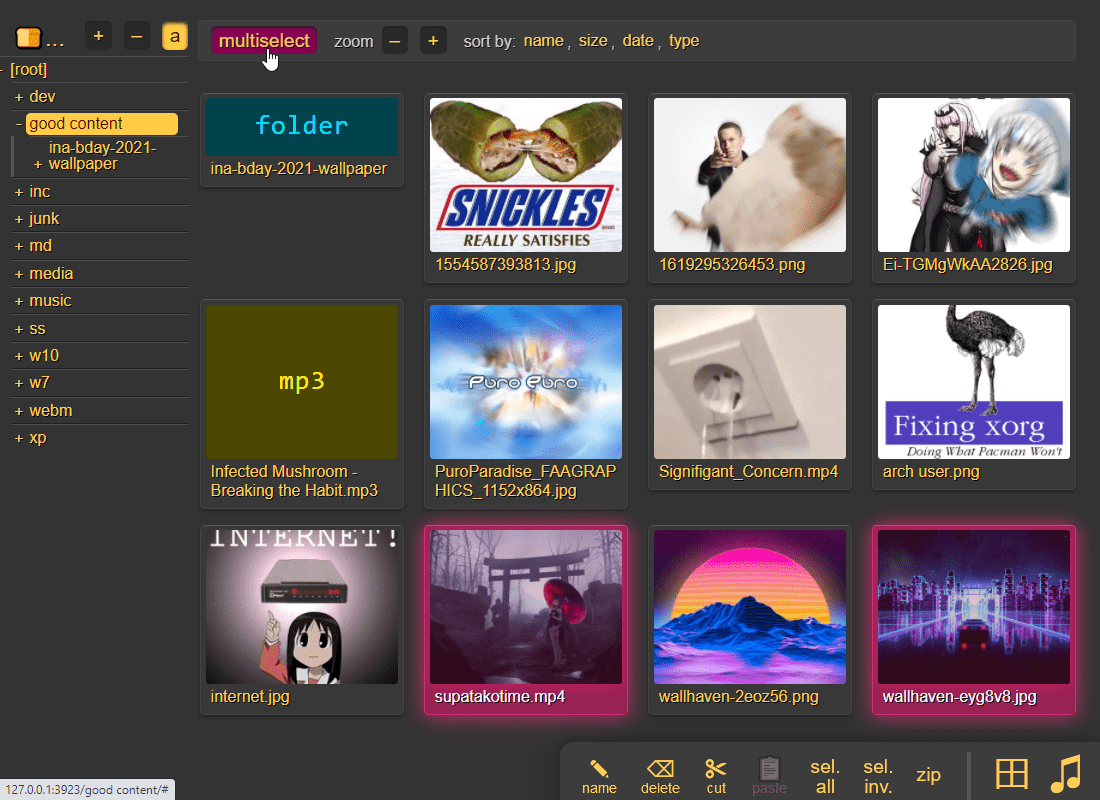

## thumbnails

|

||||

@@ -376,13 +403,16 @@ press `g` to toggle grid-view instead of the file listing, and `t` toggles icon

|

||||

|

||||

|

||||

|

||||

it does static images with Pillow and uses FFmpeg for video files, so you may want to `--no-thumb` or maybe just `--no-vthumb` depending on how dangerous your users are

|

||||

it does static images with Pillow / pyvips / FFmpeg, and uses FFmpeg for video files, so you may want to `--no-thumb` or maybe just `--no-vthumb` depending on how dangerous your users are

|

||||

* pyvips is 3x faster than Pillow, Pillow is 3x faster than FFmpeg

|

||||

* disable thumbnails for specific volumes with volflag `dthumb` for all, or `dvthumb` / `dathumb` / `dithumb` for video/audio/images only

|

||||

|

||||

audio files are covnerted into spectrograms using FFmpeg unless you `--no-athumb` (and some FFmpeg builds may need `--th-ff-swr`)

|

||||

|

||||

images with the following names (see `--th-covers`) become the thumbnail of the folder they're in: `folder.png`, `folder.jpg`, `cover.png`, `cover.jpg`

|

||||

|

||||

in the grid/thumbnail view, if the audio player panel is open, songs will start playing when clicked

|

||||

* indicated by the audio files having the ▶ icon instead of 💾

|

||||

|

||||

|

||||

## zip downloads

|

||||

@@ -433,7 +463,7 @@ see [up2k](#up2k) for details on how it works

|

||||

|

||||

|

||||

|

||||

**protip:** you can avoid scaring away users with [docs/minimal-up2k.html](docs/minimal-up2k.html) which makes it look [much simpler](https://user-images.githubusercontent.com/241032/118311195-dd6ca380-b4ef-11eb-86f3-75a3ff2e1332.png)

|

||||

**protip:** you can avoid scaring away users with [contrib/plugins/minimal-up2k.html](contrib/plugins/minimal-up2k.html) which makes it look [much simpler](https://user-images.githubusercontent.com/241032/118311195-dd6ca380-b4ef-11eb-86f3-75a3ff2e1332.png)

|

||||

|

||||

**protip:** if you enable `favicon` in the `[⚙️] settings` tab (by typing something into the textbox), the icon in the browser tab will indicate upload progress

|

||||

|

||||

@@ -473,8 +503,6 @@ the files will be hashed on the client-side, and each hash is sent to the server

|

||||

files go into `[ok]` if they exist (and you get a link to where it is), otherwise they land in `[ng]`

|

||||

* the main reason filesearch is combined with the uploader is cause the code was too spaghetti to separate it out somewhere else, this is no longer the case but now i've warmed up to the idea too much

|

||||

|

||||

adding the same file multiple times is blocked, so if you first search for a file and then decide to upload it, you have to click the `[cleanup]` button to discard `[done]` files (or just refresh the page)

|

||||

|

||||

|

||||

### unpost

|

||||

|

||||

@@ -562,6 +590,8 @@ and there are *two* editors

|

||||

|

||||

* you can link a particular timestamp in an audio file by adding it to the URL, such as `&20` / `&20s` / `&1m20` / `&t=1:20` after the `.../#af-c8960dab`

|

||||

|

||||

* enabling the audio equalizer can help make gapless albums fully gapless in some browsers (chrome), so consider leaving it on with all the values at zero

|

||||

|

||||

* get a plaintext file listing by adding `?ls=t` to a URL, or a compact colored one with `?ls=v` (for unix terminals)

|

||||

|

||||

* if you are using media hotkeys to switch songs and are getting tired of seeing the OSD popup which Windows doesn't let you disable, consider https://ocv.me/dev/?media-osd-bgone.ps1

|

||||

@@ -594,6 +624,20 @@ add the argument `-e2ts` to also scan/index tags from music files, which brings

|

||||

|

||||

using arguments or config files, or a mix of both:

|

||||

* config files (`-c some.conf`) can set additional commandline arguments; see [./docs/example.conf](docs/example.conf)

|

||||

* `kill -s USR1` (same as `systemctl reload copyparty`) to reload accounts and volumes from config files without restarting

|

||||

* or click the `[reload cfg]` button in the control-panel when logged in as admin

|

||||

|

||||

|

||||

## ftp-server

|

||||

|

||||

an FTP server can be started using `--ftp 3921`, and/or `--ftps` for explicit TLS (ftpes)

|

||||

|

||||

* based on [pyftpdlib](https://github.com/giampaolo/pyftpdlib)

|

||||

* needs a dedicated port (cannot share with the HTTP/HTTPS API)

|

||||

* uploads are not resumable -- delete and restart if necessary

|

||||

* runs in active mode by default, you probably want `--ftp-pr 12000-13000`

|

||||

* if you enable both `ftp` and `ftps`, the port-range will be divided in half

|

||||

* some older software (filezilla on debian-stable) cannot passive-mode with TLS

|

||||

|

||||

|

||||

## file indexing

|

||||

@@ -608,10 +652,12 @@ through arguments:

|

||||

* `-e2ts` also scans for tags in all files that don't have tags yet

|

||||

* `-e2tsr` also deletes all existing tags, doing a full reindex

|

||||

|

||||

the same arguments can be set as volume flags, in addition to `d2d` and `d2t` for disabling:

|

||||

the same arguments can be set as volume flags, in addition to `d2d`, `d2ds`, `d2t`, `d2ts` for disabling:

|

||||

* `-v ~/music::r:c,e2dsa,e2tsr` does a full reindex of everything on startup

|

||||

* `-v ~/music::r:c,d2d` disables **all** indexing, even if any `-e2*` are on

|

||||

* `-v ~/music::r:c,d2t` disables all `-e2t*` (tags), does not affect `-e2d*`

|

||||

* `-v ~/music::r:c,d2ds` disables on-boot scans; only index new uploads

|

||||

* `-v ~/music::r:c,d2ts` same except only affecting tags

|

||||

|

||||

note:

|

||||

* the parser can finally handle `c,e2dsa,e2tsr` so you no longer have to `c,e2dsa:c,e2tsr`

|

||||

@@ -632,7 +678,7 @@ if you set `--no-hash [...]` globally, you can enable hashing for specific volum

|

||||

|

||||

set upload rules using volume flags, some examples:

|

||||

|

||||

* `:c,sz=1k-3m` sets allowed filesize between 1 KiB and 3 MiB inclusive (suffixes: b, k, m, g)

|

||||

* `:c,sz=1k-3m` sets allowed filesize between 1 KiB and 3 MiB inclusive (suffixes: `b`, `k`, `m`, `g`)

|

||||

* `:c,nosub` disallow uploading into subdirectories; goes well with `rotn` and `rotf`:

|

||||

* `:c,rotn=1000,2` moves uploads into subfolders, up to 1000 files in each folder before making a new one, two levels deep (must be at least 1)

|

||||

* `:c,rotf=%Y/%m/%d/%H` enforces files to be uploaded into a structure of subfolders according to that date format

|

||||

@@ -666,6 +712,12 @@ things to note,

|

||||

* the files will be indexed after compression, so dupe-detection and file-search will not work as expected

|

||||

|

||||

some examples,

|

||||

* `-v inc:inc:w:c,pk=xz,0`

|

||||

folder named inc, shared at inc, write-only for everyone, forces xz compression at level 0

|

||||

* `-v inc:inc:w:c,pk`

|

||||

same write-only inc, but forces gz compression (default) instead of xz

|

||||

* `-v inc:inc:w:c,gz`

|

||||

allows (but does not force) gz compression if client uploads to `/inc?pk` or `/inc?gz` or `/inc?gz=4`

|

||||

|

||||

|

||||

## database location

|

||||

@@ -710,7 +762,7 @@ see the beautiful mess of a dictionary in [mtag.py](https://github.com/9001/copy

|

||||

|

||||

## file parser plugins

|

||||

|

||||

provide custom parsers to index additional tags

|

||||

provide custom parsers to index additional tags, also see [./bin/mtag/README.md](./bin/mtag/README.md)

|

||||

|

||||

copyparty can invoke external programs to collect additional metadata for files using `mtp` (either as argument or volume flag), there is a default timeout of 30sec

|

||||

|

||||

@@ -743,6 +795,17 @@ and it will occupy the parsing threads, so fork anything expensive, or if you wa

|

||||

if this becomes popular maybe there should be a less janky way to do it actually

|

||||

|

||||

|

||||

## hiding from google

|

||||

|

||||

tell search engines you dont wanna be indexed, either using the good old [robots.txt](https://www.robotstxt.org/robotstxt.html) or through copyparty settings:

|

||||

|

||||

* `--no-robots` adds HTTP (`X-Robots-Tag`) and HTML (`<meta>`) headers with `noindex, nofollow` globally

|

||||

* volume-flag `[...]:c,norobots` does the same thing for that single volume

|

||||

* volume-flag `[...]:c,robots` ALLOWS search-engine crawling for that volume, even if `--no-robots` is set globally

|

||||

|

||||

also, `--force-js` disables the plain HTML folder listing, making things harder to parse for search engines

|

||||

|

||||

|

||||

## complete examples

|

||||

|

||||

* read-only music server with bpm and key scanning

|

||||

@@ -781,7 +844,7 @@ TLDR: yes

|

||||

* internet explorer 6 to 8 behave the same

|

||||

* firefox 52 and chrome 49 are the final winxp versions

|

||||

* `*1` yes, but extremely slow (ie10: `1 MiB/s`, ie11: `270 KiB/s`)

|

||||

* `*3` using a wasm decoder which consumes a bit more power

|

||||

* `*3` iOS 11 and newer, opus only, and requires FFmpeg on the server

|

||||

|

||||

quick summary of more eccentric web-browsers trying to view a directory index:

|

||||

|

||||

@@ -801,8 +864,8 @@ quick summary of more eccentric web-browsers trying to view a directory index:

|

||||

interact with copyparty using non-browser clients

|

||||

|

||||

* javascript: dump some state into a file (two separate examples)

|

||||

* `await fetch('https://127.0.0.1:3923/', {method:"PUT", body: JSON.stringify(foo)});`

|

||||

* `var xhr = new XMLHttpRequest(); xhr.open('POST', 'https://127.0.0.1:3923/msgs?raw'); xhr.send('foo');`

|

||||

* `await fetch('//127.0.0.1:3923/', {method:"PUT", body: JSON.stringify(foo)});`

|

||||

* `var xhr = new XMLHttpRequest(); xhr.open('POST', '//127.0.0.1:3923/msgs?raw'); xhr.send('foo');`

|

||||

|

||||

* curl/wget: upload some files (post=file, chunk=stdin)

|

||||

* `post(){ curl -b cppwd=wark -F act=bput -F f=@"$1" http://127.0.0.1:3923/;}`

|

||||

@@ -831,7 +894,7 @@ copyparty returns a truncated sha512sum of your PUT/POST as base64; you can gene

|

||||

b512(){ printf "$((sha512sum||shasum -a512)|sed -E 's/ .*//;s/(..)/\\x\1/g')"|base64|tr '+/' '-_'|head -c44;}

|

||||

b512 <movie.mkv

|

||||

|

||||

you can provide passwords using cookie 'cppwd=hunter2', as a url query `?pw=hunter2`, or with basic-authentication (either as the username or password)

|

||||

you can provide passwords using cookie `cppwd=hunter2`, as a url query `?pw=hunter2`, or with basic-authentication (either as the username or password)

|

||||

|

||||

|

||||

# up2k

|

||||

@@ -970,6 +1033,7 @@ authenticate using header `Cookie: cppwd=foo` or url param `&pw=foo`

|

||||

| GET | `?txt=iso-8859-1` | ...with specific charset |

|

||||

| GET | `?th` | get image/video at URL as thumbnail |

|

||||

| GET | `?th=opus` | convert audio file to 128kbps opus |

|

||||

| GET | `?th=caf` | ...in the iOS-proprietary container |

|

||||

|

||||

| method | body | result |

|

||||

|--|--|--|

|

||||

@@ -1025,15 +1089,22 @@ mandatory deps:

|

||||

|

||||

install these to enable bonus features

|

||||

|

||||

enable ftp-server:

|

||||

* for just plaintext FTP, `pyftpdlib` (is built into the SFX)

|

||||

* with TLS encryption, `pyftpdlib pyopenssl`

|

||||

|

||||

enable music tags:

|

||||

* either `mutagen` (fast, pure-python, skips a few tags, makes copyparty GPL? idk)

|

||||

* or `ffprobe` (20x slower, more accurate, possibly dangerous depending on your distro and users)

|

||||

|

||||

enable [thumbnails](#thumbnails) of...

|

||||

* **images:** `Pillow` (requires py2.7 or py3.5+)

|

||||

* **images:** `Pillow` and/or `pyvips` and/or `ffmpeg` (requires py2.7 or py3.5+)

|

||||

* **videos/audio:** `ffmpeg` and `ffprobe` somewhere in `$PATH`

|

||||

* **HEIF pictures:** `pyheif-pillow-opener` (requires Linux or a C compiler)

|

||||

* **AVIF pictures:** `pillow-avif-plugin`

|

||||

* **HEIF pictures:** `pyvips` or `ffmpeg` or `pyheif-pillow-opener` (requires Linux or a C compiler)

|

||||

* **AVIF pictures:** `pyvips` or `ffmpeg` or `pillow-avif-plugin`

|

||||

* **JPEG XL pictures:** `pyvips` or `ffmpeg`

|

||||

|

||||

`pyvips` gives higher quality thumbnails than `Pillow` and is 320% faster, using 270% more ram: `sudo apt install libvips42 && python3 -m pip install --user -U pyvips`

|

||||

|

||||

|

||||

## install recommended deps

|

||||

@@ -1051,13 +1122,7 @@ these are standalone programs and will never be imported / evaluated by copypart

|

||||

|

||||

# sfx

|

||||

|

||||

there are two self-contained "binaries":

|

||||

* [copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py) -- pure python, works everywhere, **recommended**

|

||||

* [copyparty-sfx.sh](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.sh) -- smaller, but only for linux and macos, kinda deprecated

|

||||

|

||||

launch either of them (**use sfx.py on systemd**) and it'll unpack and run copyparty, assuming you have python installed of course

|

||||

|

||||

pls note that `copyparty-sfx.sh` will fail if you rename `copyparty-sfx.py` to `copyparty.py` and keep it in the same folder because `sys.path` is funky

|

||||

the self-contained "binary" [copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py) will unpack itself and run copyparty, assuming you have python installed of course

|

||||

|

||||

|

||||

## sfx repack

|

||||

@@ -1065,13 +1130,11 @@ pls note that `copyparty-sfx.sh` will fail if you rename `copyparty-sfx.py` to `

|

||||

reduce the size of an sfx by removing features

|

||||

|

||||

if you don't need all the features, you can repack the sfx and save a bunch of space; all you need is an sfx and a copy of this repo (nothing else to download or build, except if you're on windows then you need msys2 or WSL)

|

||||

* `584k` size of original sfx.py as of v1.1.0

|

||||

* `392k` after `./scripts/make-sfx.sh re no-ogv`

|

||||

* `310k` after `./scripts/make-sfx.sh re no-ogv no-cm`

|

||||

* `269k` after `./scripts/make-sfx.sh re no-ogv no-cm no-hl`

|

||||

* `393k` size of original sfx.py as of v1.1.3

|

||||

* `310k` after `./scripts/make-sfx.sh re no-cm`

|

||||

* `269k` after `./scripts/make-sfx.sh re no-cm no-hl`

|

||||

|

||||

the features you can opt to drop are

|

||||

* `ogv`.js, the opus/vorbis decoder which is needed by apple devices to play foss audio files, saves ~192k

|

||||

* `cm`/easymde, the "fancy" markdown editor, saves ~82k

|

||||

* `hl`, prism, the syntax hilighter, saves ~41k

|

||||

* `fnt`, source-code-pro, the monospace font, saves ~9k

|

||||

@@ -1079,7 +1142,7 @@ the features you can opt to drop are

|

||||

|

||||

for the `re`pack to work, first run one of the sfx'es once to unpack it

|

||||

|

||||

**note:** you can also just download and run [scripts/copyparty-repack.sh](scripts/copyparty-repack.sh) -- this will grab the latest copyparty release from github and do a `no-ogv no-cm` repack; works on linux/macos (and windows with msys2 or WSL)

|

||||

**note:** you can also just download and run [scripts/copyparty-repack.sh](scripts/copyparty-repack.sh) -- this will grab the latest copyparty release from github and do a few repacks; works on linux/macos (and windows with msys2 or WSL)

|

||||

|

||||

|

||||

# install on android

|

||||

@@ -1093,6 +1156,16 @@ echo $?

|

||||

after the initial setup, you can launch copyparty at any time by running `copyparty` anywhere in Termux

|

||||

|

||||

|

||||

# reporting bugs

|

||||

|

||||

ideas for context to include in bug reports

|

||||

|

||||

if something broke during an upload (replacing FILENAME with a part of the filename that broke):

|

||||

```

|

||||

journalctl -aS '48 hour ago' -u copyparty | grep -C10 FILENAME | tee bug.log

|

||||

```

|

||||

|

||||

|

||||

# building

|

||||

|

||||

## dev env setup

|

||||

@@ -1124,8 +1197,8 @@ mv /tmp/pe-copyparty/copyparty/web/deps/ copyparty/web/deps/

|

||||

then build the sfx using any of the following examples:

|

||||

|

||||

```sh

|

||||

./scripts/make-sfx.sh # both python and sh editions

|

||||

./scripts/make-sfx.sh no-sh gz # just python with gzip

|

||||

./scripts/make-sfx.sh # regular edition

|

||||

./scripts/make-sfx.sh gz no-cm # gzip-compressed + no fancy markdown editor

|

||||

```

|

||||

|

||||

|

||||

|

||||

@@ -2,9 +2,14 @@

|

||||

* command-line up2k client [(webm)](https://ocv.me/stuff/u2cli.webm)

|

||||

* file uploads, file-search, autoresume of aborted/broken uploads

|

||||

* faster than browsers

|

||||

* early beta, if something breaks just restart it

|

||||

* if something breaks just restart it

|

||||

|

||||

|

||||

# [`partyjournal.py`](partyjournal.py)

|

||||

produces a chronological list of all uploads by collecting info from up2k databases and the filesystem

|

||||

* outputs a standalone html file

|

||||

* optional mapping from IP-addresses to nicknames

|

||||

|

||||

|

||||

# [`copyparty-fuse.py`](copyparty-fuse.py)

|

||||

* mount a copyparty server as a local filesystem (read-only)

|

||||

|

||||

@@ -11,14 +11,18 @@ import re

|

||||

import os

|

||||

import sys

|

||||

import time

|

||||

import json

|

||||

import stat

|

||||

import errno

|

||||

import struct

|

||||

import codecs

|

||||

import platform

|

||||

import threading

|

||||

import http.client # py2: httplib

|

||||

import urllib.parse

|

||||

from datetime import datetime

|

||||

from urllib.parse import quote_from_bytes as quote

|

||||

from urllib.parse import unquote_to_bytes as unquote

|

||||

|

||||

try:

|

||||

import fuse

|

||||

@@ -38,7 +42,7 @@ except:

|

||||

mount a copyparty server (local or remote) as a filesystem

|

||||

|

||||

usage:

|

||||

python ./copyparty-fuseb.py -f -o allow_other,auto_unmount,nonempty,url=http://192.168.1.69:3923 /mnt/nas

|

||||

python ./copyparty-fuseb.py -f -o allow_other,auto_unmount,nonempty,pw=wark,url=http://192.168.1.69:3923 /mnt/nas

|

||||

|

||||

dependencies:

|

||||

sudo apk add fuse-dev python3-dev

|

||||

@@ -50,6 +54,10 @@ fork of copyparty-fuse.py based on fuse-python which

|

||||

"""

|

||||

|

||||

|

||||

WINDOWS = sys.platform == "win32"

|

||||

MACOS = platform.system() == "Darwin"

|

||||

|

||||

|

||||

def threadless_log(msg):

|

||||

print(msg + "\n", end="")

|

||||

|

||||

@@ -93,6 +101,41 @@ def html_dec(txt):

|

||||

)

|

||||

|

||||

|

||||

def register_wtf8():

|

||||

def wtf8_enc(text):

|

||||

return str(text).encode("utf-8", "surrogateescape"), len(text)

|

||||

|

||||

def wtf8_dec(binary):

|

||||

return bytes(binary).decode("utf-8", "surrogateescape"), len(binary)

|

||||

|

||||

def wtf8_search(encoding_name):

|

||||

return codecs.CodecInfo(wtf8_enc, wtf8_dec, name="wtf-8")

|

||||

|

||||

codecs.register(wtf8_search)

|

||||

|

||||

|

||||

bad_good = {}

|

||||

good_bad = {}

|

||||

|

||||

|

||||

def enwin(txt):

|

||||

return "".join([bad_good.get(x, x) for x in txt])

|

||||

|

||||

for bad, good in bad_good.items():

|

||||

txt = txt.replace(bad, good)

|

||||

|

||||

return txt

|

||||

|

||||

|

||||

def dewin(txt):

|

||||

return "".join([good_bad.get(x, x) for x in txt])

|

||||

|

||||

for bad, good in bad_good.items():

|

||||

txt = txt.replace(good, bad)

|

||||

|

||||

return txt

|

||||

|

||||

|

||||

class CacheNode(object):

|

||||

def __init__(self, tag, data):

|

||||

self.tag = tag

|

||||

@@ -115,8 +158,9 @@ class Stat(fuse.Stat):

|

||||

|

||||

|

||||

class Gateway(object):

|

||||

def __init__(self, base_url):

|

||||

def __init__(self, base_url, pw):

|

||||

self.base_url = base_url

|

||||

self.pw = pw

|

||||

|

||||

ui = urllib.parse.urlparse(base_url)

|

||||

self.web_root = ui.path.strip("/")

|

||||

@@ -135,8 +179,7 @@ class Gateway(object):

|

||||

self.conns = {}

|

||||

|

||||

def quotep(self, path):

|

||||

# TODO: mojibake support

|

||||

path = path.encode("utf-8", "ignore")

|

||||

path = path.encode("wtf-8")

|

||||

return quote(path, safe="/")

|

||||

|

||||

def getconn(self, tid=None):

|

||||

@@ -159,20 +202,29 @@ class Gateway(object):

|

||||

except:

|

||||

pass

|

||||

|

||||

def sendreq(self, *args, **kwargs):

|

||||

def sendreq(self, *args, **ka):

|

||||

tid = get_tid()

|

||||

if self.pw:

|

||||

ck = "cppwd=" + self.pw

|

||||

try:

|

||||

ka["headers"]["Cookie"] = ck

|

||||

except:

|

||||

ka["headers"] = {"Cookie": ck}

|

||||

try:

|

||||

c = self.getconn(tid)

|

||||

c.request(*list(args), **kwargs)

|

||||

c.request(*list(args), **ka)

|

||||

return c.getresponse()

|

||||

except:

|

||||

self.closeconn(tid)

|

||||

c = self.getconn(tid)

|

||||

c.request(*list(args), **kwargs)

|

||||

c.request(*list(args), **ka)

|

||||

return c.getresponse()

|

||||

|

||||

def listdir(self, path):

|

||||

web_path = self.quotep("/" + "/".join([self.web_root, path])) + "?dots"

|

||||

if bad_good:

|

||||

path = dewin(path)

|

||||

|

||||

web_path = self.quotep("/" + "/".join([self.web_root, path])) + "?dots&ls"

|

||||

r = self.sendreq("GET", web_path)

|

||||

if r.status != 200:

|

||||

self.closeconn()

|

||||

@@ -182,9 +234,12 @@ class Gateway(object):

|

||||

)

|

||||

)

|

||||

|

||||

return self.parse_html(r)

|

||||

return self.parse_jls(r)

|

||||

|

||||

def download_file_range(self, path, ofs1, ofs2):

|

||||

if bad_good:

|

||||

path = dewin(path)

|

||||

|

||||

web_path = self.quotep("/" + "/".join([self.web_root, path])) + "?raw"

|

||||

hdr_range = "bytes={}-{}".format(ofs1, ofs2 - 1)

|

||||

log("downloading {}".format(hdr_range))

|

||||

@@ -200,40 +255,27 @@ class Gateway(object):

|

||||

|

||||

return r.read()

|

||||

|

||||

def parse_html(self, datasrc):

|

||||

ret = []

|

||||

remainder = b""

|

||||

ptn = re.compile(

|

||||

r"^<tr><td>(-|DIR)</td><td><a [^>]+>([^<]+)</a></td><td>([^<]+)</td><td>([^<]+)</td></tr>$"

|

||||

)

|

||||

|

||||

def parse_jls(self, datasrc):

|

||||

rsp = b""

|

||||

while True:

|

||||

buf = remainder + datasrc.read(4096)

|

||||

# print('[{}]'.format(buf.decode('utf-8')))

|

||||

buf = datasrc.read(1024 * 32)

|

||||

if not buf:

|

||||

break

|

||||

|

||||

remainder = b""

|

||||

endpos = buf.rfind(b"\n")

|

||||

if endpos >= 0:

|

||||

remainder = buf[endpos + 1 :]

|

||||

buf = buf[:endpos]

|

||||

rsp += buf

|

||||

|

||||

lines = buf.decode("utf-8").split("\n")

|

||||

for line in lines:

|

||||

m = ptn.match(line)

|

||||

if not m:

|

||||

# print(line)

|

||||

continue

|

||||

rsp = json.loads(rsp.decode("utf-8"))

|

||||

ret = []

|

||||

for statfun, nodes in [

|

||||

[self.stat_dir, rsp["dirs"]],

|

||||

[self.stat_file, rsp["files"]],

|

||||

]:

|

||||

for n in nodes:

|

||||

fname = unquote(n["href"].split("?")[0]).rstrip(b"/").decode("wtf-8")

|

||||

if bad_good:

|

||||

fname = enwin(fname)

|

||||

|

||||

ftype, fname, fsize, fdate = m.groups()

|

||||

fname = html_dec(fname)

|

||||

ts = datetime.strptime(fdate, "%Y-%m-%d %H:%M:%S").timestamp()

|

||||

sz = int(fsize)

|

||||

if ftype == "-":

|

||||

ret.append([fname, self.stat_file(ts, sz), 0])

|

||||

else:

|

||||

ret.append([fname, self.stat_dir(ts, sz), 0])

|

||||

ret.append([fname, statfun(n["ts"], n["sz"]), 0])

|

||||

|

||||

return ret

|

||||

|

||||

@@ -262,6 +304,7 @@ class CPPF(Fuse):

|

||||

Fuse.__init__(self, *args, **kwargs)

|

||||

|

||||

self.url = None

|

||||

self.pw = None

|

||||

|

||||

self.dircache = []

|

||||

self.dircache_mtx = threading.Lock()

|

||||

@@ -271,7 +314,7 @@ class CPPF(Fuse):

|

||||

|

||||

def init2(self):

|

||||

# TODO figure out how python-fuse wanted this to go

|

||||

self.gw = Gateway(self.url) # .decode('utf-8'))

|

||||

self.gw = Gateway(self.url, self.pw) # .decode('utf-8'))

|

||||

info("up")

|

||||

|

||||

def clean_dircache(self):

|

||||

@@ -536,6 +579,8 @@ class CPPF(Fuse):

|

||||

|

||||

def getattr(self, path):

|

||||

log("getattr [{}]".format(path))

|

||||

if WINDOWS:

|

||||

path = enwin(path) # windows occasionally decodes f0xx to xx

|

||||

|

||||

path = path.strip("/")

|

||||

try:

|

||||

@@ -568,9 +613,25 @@ class CPPF(Fuse):

|

||||

|

||||

def main():

|

||||

time.strptime("19970815", "%Y%m%d") # python#7980

|

||||

register_wtf8()

|

||||

if WINDOWS:

|

||||

os.system("rem")

|

||||

|

||||

for ch in '<>:"\\|?*':

|

||||

# microsoft maps illegal characters to f0xx

|

||||

# (e000 to f8ff is basic-plane private-use)

|

||||

bad_good[ch] = chr(ord(ch) + 0xF000)

|

||||

|

||||

for n in range(0, 0x100):

|

||||

# map surrogateescape to another private-use area

|

||||

bad_good[chr(n + 0xDC00)] = chr(n + 0xF100)

|

||||

|

||||

for k, v in bad_good.items():

|

||||

good_bad[v] = k

|

||||

|

||||

server = CPPF()

|

||||

server.parser.add_option(mountopt="url", metavar="BASE_URL", default=None)

|

||||

server.parser.add_option(mountopt="pw", metavar="PASSWORD", default=None)

|

||||

server.parse(values=server, errex=1)

|

||||

if not server.url or not str(server.url).startswith("http"):

|

||||

print("\nerror:")

|

||||

@@ -578,7 +639,7 @@ def main():

|

||||

print(" need argument: mount-path")

|

||||

print("example:")

|

||||

print(

|

||||

" ./copyparty-fuseb.py -f -o allow_other,auto_unmount,nonempty,url=http://192.168.1.69:3923 /mnt/nas"

|

||||

" ./copyparty-fuseb.py -f -o allow_other,auto_unmount,nonempty,pw=wark,url=http://192.168.1.69:3923 /mnt/nas"

|

||||

)

|

||||

sys.exit(1)

|

||||

|

||||

|

||||

@@ -6,9 +6,13 @@ some of these rely on libraries which are not MIT-compatible

|

||||

|

||||

* [audio-bpm.py](./audio-bpm.py) detects the BPM of music using the BeatRoot Vamp Plugin; imports GPL2

|

||||

* [audio-key.py](./audio-key.py) detects the melodic key of music using the Mixxx fork of keyfinder; imports GPL3

|

||||

* [media-hash.py](./media-hash.py) generates checksums for audio and video streams; uses FFmpeg (LGPL or GPL)

|

||||

|

||||

these do not have any problematic dependencies:

|

||||

these invoke standalone programs which are GPL or similar, so is legally fine for most purposes:

|

||||

|

||||

* [media-hash.py](./media-hash.py) generates checksums for audio and video streams; uses FFmpeg (LGPL or GPL)

|

||||

* [image-noexif.py](./image-noexif.py) removes exif tags from images; uses exiftool (GPLv1 or artistic-license)

|

||||

|

||||

these do not have any problematic dependencies at all:

|

||||

|

||||

* [cksum.py](./cksum.py) computes various checksums

|

||||

* [exe.py](./exe.py) grabs metadata from .exe and .dll files (example for retrieving multiple tags with one parser)

|

||||

|

||||

@@ -19,18 +19,18 @@ dep: ffmpeg

|

||||

def det(tf):

|

||||

# fmt: off

|

||||

sp.check_call([

|

||||

"ffmpeg",

|

||||

"-nostdin",

|

||||

"-hide_banner",

|

||||

"-v", "fatal",

|

||||

"-ss", "13",

|

||||

"-y", "-i", fsenc(sys.argv[1]),

|

||||

"-map", "0:a:0",

|

||||

"-ac", "1",

|

||||

"-ar", "22050",

|

||||

"-t", "300",

|

||||

"-f", "f32le",

|

||||

tf

|

||||

b"ffmpeg",

|

||||

b"-nostdin",

|

||||

b"-hide_banner",

|

||||

b"-v", b"fatal",

|

||||

b"-ss", b"13",

|

||||

b"-y", b"-i", fsenc(sys.argv[1]),

|

||||

b"-map", b"0:a:0",

|

||||

b"-ac", b"1",

|

||||

b"-ar", b"22050",

|

||||

b"-t", b"300",

|

||||

b"-f", b"f32le",

|

||||

fsenc(tf)

|

||||

])

|

||||

# fmt: on

|

||||

|

||||

|

||||

@@ -23,15 +23,15 @@ dep: ffmpeg

|

||||

def det(tf):

|

||||

# fmt: off

|

||||

sp.check_call([

|

||||

"ffmpeg",

|

||||

"-nostdin",

|

||||

"-hide_banner",

|

||||

"-v", "fatal",

|

||||

"-y", "-i", fsenc(sys.argv[1]),

|

||||

"-map", "0:a:0",

|

||||

"-t", "300",

|

||||

"-sample_fmt", "s16",

|

||||

tf

|

||||

b"ffmpeg",

|

||||

b"-nostdin",

|

||||

b"-hide_banner",

|

||||

b"-v", b"fatal",

|

||||

b"-y", b"-i", fsenc(sys.argv[1]),

|

||||

b"-map", b"0:a:0",

|

||||

b"-t", b"300",

|

||||

b"-sample_fmt", b"s16",

|

||||

fsenc(tf)

|

||||

])

|

||||

# fmt: on

|

||||

|

||||

|

||||

93

bin/mtag/image-noexif.py

Normal file

93

bin/mtag/image-noexif.py

Normal file

@@ -0,0 +1,93 @@

|

||||

#!/usr/bin/env python3

|

||||

|

||||

"""

|

||||

remove exif tags from uploaded images

|

||||

|

||||

dependencies:

|

||||

exiftool

|

||||

|

||||

about:

|

||||

creates a "noexif" subfolder and puts exif-stripped copies of each image there,

|

||||

the reason for the subfolder is to avoid issues with the up2k.db / deduplication:

|

||||

|

||||

if the original image is modified in-place, then copyparty will keep the original

|

||||

hash in up2k.db for a while (until the next volume rescan), so if the image is

|

||||

reuploaded after a rescan then the upload will be renamed and kept as a dupe

|

||||

|

||||

alternatively you could switch the logic around, making a copy of the original

|

||||

image into a subfolder named "exif" and modify the original in-place, but then

|

||||

up2k.db will be out of sync until the next rescan, so any additional uploads

|

||||

of the same image will get symlinked (deduplicated) to the modified copy

|

||||

instead of the original in "exif"

|

||||

|

||||

or maybe delete the original image after processing, that would kinda work too

|

||||

|

||||

example copyparty config to use this:

|

||||

-v/mnt/nas/pics:pics:rwmd,ed:c,e2ts,mte=+noexif:c,mtp=noexif=ejpg,ejpeg,ad,bin/mtag/image-noexif.py

|

||||

|

||||

explained:

|

||||

for realpath /mnt/nas/pics (served at /pics) with read-write-modify-delete for ed,

|

||||

enable file analysis on upload (e2ts),

|

||||

append "noexif" to the list of known tags (mtp),

|

||||

and use mtp plugin "bin/mtag/image-noexif.py" to provide that tag,

|

||||

do this on all uploads with the file extension "jpg" or "jpeg",

|

||||

ad = parse file regardless if FFmpeg thinks it is audio or not

|

||||

|

||||

PS: this requires e2ts to be functional,

|

||||

meaning you need to do at least one of these:

|

||||

* apt install ffmpeg

|

||||

* pip3 install mutagen

|

||||

and your python must have sqlite3 support compiled in

|

||||

"""

|

||||

|

||||

|

||||

import os

|

||||

import sys

|

||||

import time

|

||||

import filecmp

|

||||

import subprocess as sp

|

||||

|

||||

try:

|

||||

from copyparty.util import fsenc

|

||||

except:

|

||||

|

||||

def fsenc(p):

|

||||

return p.encode("utf-8")

|

||||

|

||||

|

||||

def main():

|

||||

cwd, fn = os.path.split(sys.argv[1])

|

||||

if os.path.basename(cwd) == "noexif":

|

||||

return

|

||||

|

||||

os.chdir(cwd)

|

||||

f1 = fsenc(fn)

|

||||

f2 = os.path.join(b"noexif", f1)

|

||||

cmd = [

|

||||

b"exiftool",

|

||||

b"-exif:all=",

|

||||

b"-iptc:all=",

|

||||

b"-xmp:all=",

|

||||

b"-P",

|

||||

b"-o",

|

||||

b"noexif/",

|

||||

b"--",

|

||||

f1,

|

||||

]

|

||||

sp.check_output(cmd)

|

||||

if not os.path.exists(f2):

|

||||

print("failed")

|

||||

return

|

||||

|

||||

if filecmp.cmp(f1, f2, shallow=False):

|

||||

print("clean")

|

||||

else:

|

||||

print("exif")

|

||||

|

||||

# lastmod = os.path.getmtime(f1)

|

||||

# times = (int(time.time()), int(lastmod))

|

||||

# os.utime(f2, times)

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

main()

|

||||

@@ -4,8 +4,8 @@ set -e

|

||||

|

||||

# install dependencies for audio-*.py

|

||||

#

|

||||

# linux/alpine: requires {python3,ffmpeg,fftw}-dev py3-{wheel,pip} py3-numpy{,-dev} vamp-sdk-dev patchelf cmake

|

||||

# linux/debian: requires libav{codec,device,filter,format,resample,util}-dev {libfftw3,python3}-dev python3-{numpy,pip} vamp-{plugin-sdk,examples} patchelf cmake

|

||||

# linux/alpine: requires gcc g++ make cmake patchelf {python3,ffmpeg,fftw,libsndfile}-dev py3-{wheel,pip} py3-numpy{,-dev}

|

||||

# linux/debian: requires libav{codec,device,filter,format,resample,util}-dev {libfftw3,python3,libsndfile1}-dev python3-{numpy,pip} vamp-{plugin-sdk,examples} patchelf cmake

|

||||

# win64: requires msys2-mingw64 environment

|

||||

# macos: requires macports

|

||||

#

|

||||

@@ -101,8 +101,11 @@ export -f dl_files

|

||||

|

||||

|

||||

github_tarball() {

|

||||

rm -rf g

|

||||

mkdir g

|

||||

cd g

|

||||

dl_text "$1" |

|

||||

tee json |

|

||||

tee ../json |

|

||||

(

|

||||

# prefer jq if available

|

||||

jq -r '.tarball_url' ||

|

||||

@@ -111,8 +114,11 @@ github_tarball() {

|

||||

awk -F\" '/"tarball_url": "/ {print$4}'

|

||||

) |

|

||||

tee /dev/stderr |

|

||||

head -n 1 |

|

||||

tr -d '\r' | tr '\n' '\0' |

|

||||

xargs -0 bash -c 'dl_files "$@"' _

|

||||

mv * ../tgz

|

||||

cd ..

|

||||

}

|

||||

|

||||

|

||||

@@ -127,6 +133,7 @@ gitlab_tarball() {

|

||||

tr \" '\n' | grep -E '\.tar\.gz$' | head -n 1

|

||||

) |

|

||||

tee /dev/stderr |

|

||||

head -n 1 |

|

||||

tr -d '\r' | tr '\n' '\0' |

|

||||

tee links |

|

||||

xargs -0 bash -c 'dl_files "$@"' _

|

||||

@@ -138,10 +145,17 @@ install_keyfinder() {

|

||||

# use msys2 in mingw-w64 mode

|

||||

# pacman -S --needed mingw-w64-x86_64-{ffmpeg,python}

|

||||

|

||||

github_tarball https://api.github.com/repos/mixxxdj/libkeyfinder/releases/latest

|

||||

[ -e $HOME/pe/keyfinder ] && {

|

||||

echo found a keyfinder build in ~/pe, skipping

|

||||

return

|

||||

}

|

||||

|

||||

tar -xf mixxxdj-libkeyfinder-*

|

||||

rm -- *.tar.gz

|

||||

cd "$td"

|

||||

github_tarball https://api.github.com/repos/mixxxdj/libkeyfinder/releases/latest

|

||||

ls -al

|

||||

|

||||

tar -xf tgz

|

||||

rm tgz

|

||||

cd mixxxdj-libkeyfinder*

|

||||

|

||||

h="$HOME"

|

||||

@@ -208,6 +222,22 @@ install_vamp() {

|

||||

|

||||

$pybin -m pip install --user vamp

|

||||

|

||||

cd "$td"

|

||||

echo '#include <vamp-sdk/Plugin.h>' | gcc -x c -c -o /dev/null - || [ -e ~/pe/vamp-sdk ] || {

|

||||

printf '\033[33mcould not find the vamp-sdk, building from source\033[0m\n'

|

||||

(dl_files yolo https://code.soundsoftware.ac.uk/attachments/download/2588/vamp-plugin-sdk-2.9.0.tar.gz)

|

||||

sha512sum -c <(

|

||||

echo "7ef7f837d19a08048b059e0da408373a7964ced452b290fae40b85d6d70ca9000bcfb3302cd0b4dc76cf2a848528456f78c1ce1ee0c402228d812bd347b6983b -"

|

||||

) <vamp-plugin-sdk-2.9.0.tar.gz

|

||||

tar -xf vamp-plugin-sdk-2.9.0.tar.gz

|

||||

rm -- *.tar.gz

|

||||

ls -al

|

||||

cd vamp-plugin-sdk-*

|

||||

./configure --prefix=$HOME/pe/vamp-sdk

|

||||

make -j1 install

|

||||

}

|

||||

|

||||

cd "$td"

|

||||

have_beatroot || {

|

||||

printf '\033[33mcould not find the vamp beatroot plugin, building from source\033[0m\n'

|

||||

(dl_files yolo https://code.soundsoftware.ac.uk/attachments/download/885/beatroot-vamp-v1.0.tar.gz)

|

||||

@@ -215,8 +245,11 @@ install_vamp() {

|

||||

echo "1f444d1d58ccf565c0adfe99f1a1aa62789e19f5071e46857e2adfbc9d453037bc1c4dcb039b02c16240e9b97f444aaff3afb625c86aa2470233e711f55b6874 -"

|

||||

) <beatroot-vamp-v1.0.tar.gz

|

||||

tar -xf beatroot-vamp-v1.0.tar.gz

|

||||

rm -- *.tar.gz

|

||||

cd beatroot-vamp-v1.0

|

||||

make -f Makefile.linux -j4

|

||||

[ -e ~/pe/vamp-sdk ] &&

|

||||

sed -ri 's`^(CFLAGS :=.*)`\1 -I'$HOME'/pe/vamp-sdk/include`' Makefile.linux

|

||||

make -f Makefile.linux -j4 LDFLAGS=-L$HOME/pe/vamp-sdk/lib

|

||||

# /home/ed/vamp /home/ed/.vamp /usr/local/lib/vamp

|

||||

mkdir ~/vamp

|

||||

cp -pv beatroot-vamp.* ~/vamp/

|

||||

@@ -230,6 +263,7 @@ install_vamp() {

|

||||

|

||||

# not in use because it kinda segfaults, also no windows support

|

||||

install_soundtouch() {

|

||||

cd "$td"

|

||||

gitlab_tarball https://gitlab.com/api/v4/projects/soundtouch%2Fsoundtouch/releases

|

||||

|

||||

tar -xvf soundtouch-*

|

||||

|

||||

@@ -13,7 +13,7 @@ try:

|

||||

except:

|

||||

|

||||

def fsenc(p):

|

||||

return p

|

||||

return p.encode("utf-8")

|

||||

|

||||

|

||||

"""

|

||||

@@ -24,13 +24,13 @@ dep: ffmpeg

|

||||

def det():

|

||||

# fmt: off

|

||||

cmd = [

|

||||

"ffmpeg",

|

||||

"-nostdin",

|

||||

"-hide_banner",

|

||||

"-v", "fatal",

|

||||

"-i", fsenc(sys.argv[1]),

|

||||

"-f", "framemd5",

|

||||

"-"

|

||||

b"ffmpeg",

|

||||

b"-nostdin",

|

||||

b"-hide_banner",

|

||||

b"-v", b"fatal",

|

||||

b"-i", fsenc(sys.argv[1]),

|

||||

b"-f", b"framemd5",

|

||||

b"-"

|

||||

]

|

||||

# fmt: on

|

||||

|

||||

|

||||

21

bin/mtag/res/twitter-unmute.user.js

Normal file

21

bin/mtag/res/twitter-unmute.user.js

Normal file

@@ -0,0 +1,21 @@

|

||||

// ==UserScript==

|

||||

// @name twitter-unmute

|

||||

// @namespace http://ocv.me/

|

||||

// @version 0.1

|

||||

// @description memes

|

||||

// @author ed <irc.rizon.net>

|

||||

// @match https://twitter.com/*

|

||||

// @icon https://www.google.com/s2/favicons?domain=twitter.com

|

||||

// @grant GM_addStyle

|

||||

// ==/UserScript==

|

||||

|

||||

function grunnur() {

|

||||

setInterval(function () {

|

||||

//document.querySelector('div[aria-label="Unmute"]').click();

|

||||

document.querySelector('video').muted = false;

|

||||

}, 200);

|

||||

}

|

||||

|

||||

var scr = document.createElement('script');

|

||||

scr.textContent = '(' + grunnur.toString() + ')();';

|

||||

(document.head || document.getElementsByTagName('head')[0]).appendChild(scr);

|

||||

139

bin/mtag/very-bad-idea.py

Executable file

139

bin/mtag/very-bad-idea.py

Executable file

@@ -0,0 +1,139 @@

|

||||

#!/usr/bin/env python3

|

||||

|

||||

"""

|

||||

use copyparty as a chromecast replacement:

|

||||

* post a URL and it will open in the default browser

|

||||

* upload a file and it will open in the default application

|

||||

* the `key` command simulates keyboard input

|

||||

* the `x` command executes other xdotool commands

|

||||

* the `c` command executes arbitrary unix commands

|

||||

|

||||

the android app makes it a breeze to post pics and links:

|

||||

https://github.com/9001/party-up/releases

|

||||

(iOS devices have to rely on the web-UI)

|

||||

|

||||

goes without saying, but this is HELLA DANGEROUS,

|

||||

GIVES RCE TO ANYONE WHO HAVE UPLOAD PERMISSIONS

|

||||

|

||||

example copyparty config to use this:

|

||||

--urlform save,get -v.::w:c,e2d,e2t,mte=+a1:c,mtp=a1=ad,bin/mtag/very-bad-idea.py

|

||||

|

||||

recommended deps:

|

||||

apt install xdotool libnotify-bin

|

||||

https://github.com/9001/copyparty/blob/hovudstraum/contrib/plugins/meadup.js

|

||||

|

||||

and you probably want `twitter-unmute.user.js` from the res folder

|

||||

|

||||

|

||||

-----------------------------------------------------------------------

|

||||

-- startup script:

|

||||

-----------------------------------------------------------------------

|

||||

|

||||

#!/bin/bash

|

||||

set -e

|

||||

|

||||

# create qr code

|

||||

ip=$(ip r | awk '/^default/{print$(NF-2)}'); echo http://$ip:3923/ | qrencode -o - -s 4 >/dev/shm/cpp-qr.png

|

||||

/usr/bin/feh -x /dev/shm/cpp-qr.png &

|

||||

|

||||

# reposition and make topmost (with janky raspbian support)

|

||||

( sleep 0.5

|

||||

xdotool search --name cpp-qr.png windowactivate --sync windowmove 1780 0

|

||||

wmctrl -r :ACTIVE: -b toggle,above || true

|

||||

|

||||

ps aux | grep -E 'sleep[ ]7\.27' ||

|

||||

while true; do

|

||||

w=$(xdotool getactivewindow)

|

||||

xdotool search --name cpp-qr.png windowactivate windowraise windowfocus

|

||||

xdotool windowactivate $w

|

||||

xdotool windowfocus $w

|

||||

sleep 7.27 || break

|

||||

done &

|

||||

xeyes # distraction window to prevent ^w from closing the qr-code

|

||||

) &

|

||||

|

||||

# bail if copyparty is already running

|

||||

ps aux | grep -E '[3] copy[p]arty' && exit 0

|

||||

|

||||

# dumb chrome wrapper to allow autoplay

|

||||

cat >/usr/local/bin/chromium-browser <<'EOF'

|

||||

#!/bin/bash

|

||||

set -e

|

||||

/usr/bin/chromium-browser --autoplay-policy=no-user-gesture-required "$@"

|

||||

EOF

|

||||

chmod 755 /usr/local/bin/chromium-browser

|

||||

|

||||

# start the server (note: replace `-v.::rw:` with `-v.::r:` to disallow retrieving uploaded stuff)

|

||||

cd ~/Downloads; python3 copyparty-sfx.py --urlform save,get -v.::rw:c,e2d,e2t,mte=+a1:c,mtp=a1=ad,very-bad-idea.py

|

||||

|

||||

"""

|

||||

|

||||

|

||||

import os

|

||||

import sys

|

||||

import time

|

||||

import subprocess as sp

|

||||

from urllib.parse import unquote_to_bytes as unquote

|

||||

|

||||

|

||||

def main():

|

||||

fp = os.path.abspath(sys.argv[1])

|

||||

with open(fp, "rb") as f:

|

||||

txt = f.read(4096)

|

||||

|

||||

if txt.startswith(b"msg="):

|

||||

open_post(txt)

|

||||

else:

|

||||

open_url(fp)

|

||||

|

||||

|

||||

def open_post(txt):

|

||||

txt = unquote(txt.replace(b"+", b" ")).decode("utf-8")[4:]

|

||||

try:

|

||||

k, v = txt.split(" ", 1)

|

||||

except:

|

||||

open_url(txt)

|

||||

|

||||

if k == "key":

|

||||

sp.call(["xdotool", "key"] + v.split(" "))

|

||||

elif k == "x":

|

||||

sp.call(["xdotool"] + v.split(" "))

|

||||

elif k == "c":

|

||||

env = os.environ.copy()

|

||||

while " " in v:

|

||||

v1, v2 = v.split(" ", 1)

|

||||

if "=" not in v1:

|

||||

break

|

||||

|

||||

ek, ev = v1.split("=", 1)

|

||||

env[ek] = ev

|

||||

v = v2

|

||||

|

||||

sp.call(v.split(" "), env=env)

|

||||

else:

|

||||

open_url(txt)

|

||||

|

||||

|

||||

def open_url(txt):

|

||||

ext = txt.rsplit(".")[-1].lower()

|

||||

sp.call(["notify-send", "--", txt])

|

||||

if ext not in ["jpg", "jpeg", "png", "gif", "webp"]:

|

||||

# sp.call(["wmctrl", "-c", ":ACTIVE:"]) # closes the active window correctly

|

||||

sp.call(["killall", "vlc"])

|

||||

sp.call(["killall", "mpv"])

|

||||

sp.call(["killall", "feh"])

|

||||

time.sleep(0.5)

|

||||

for _ in range(20):

|

||||

sp.call(["xdotool", "key", "ctrl+w"]) # closes the open tab correctly

|

||||

# else:

|

||||

# sp.call(["xdotool", "getactivewindow", "windowminimize"]) # minimizes the focused windo

|

||||

|

||||

# close any error messages:

|

||||

sp.call(["xdotool", "search", "--name", "Error", "windowclose"])

|

||||

# sp.call(["xdotool", "key", "ctrl+alt+d"]) # doesnt work at all

|

||||

# sp.call(["xdotool", "keydown", "--delay", "100", "ctrl+alt+d"])

|

||||

# sp.call(["xdotool", "keyup", "ctrl+alt+d"])

|

||||

sp.call(["xdg-open", txt])

|

||||

|

||||

|

||||

main()

|

||||

177

bin/partyjournal.py

Executable file

177

bin/partyjournal.py

Executable file

@@ -0,0 +1,177 @@

|

||||

#!/usr/bin/env python3

|

||||

|

||||

"""

|

||||

partyjournal.py: chronological history of uploads

|

||||

2021-12-31, v0.1, ed <irc.rizon.net>, MIT-Licensed

|

||||

https://github.com/9001/copyparty/blob/hovudstraum/bin/partyjournal.py

|

||||

|

||||

produces a chronological list of all uploads,

|

||||

by collecting info from up2k databases and the filesystem

|

||||

|

||||

specify subnet `192.168.1.*` with argument `.=192.168.1.`,

|

||||

affecting all successive mappings

|

||||

|

||||

usage:

|

||||

./partyjournal.py > partyjournal.html .=192.168.1. cart=125 steen=114 steen=131 sleepy=121 fscarlet=144 ed=101 ed=123

|

||||

|

||||

"""

|

||||

|

||||

import sys

|

||||

import base64

|

||||

import sqlite3

|

||||

import argparse

|

||||

from datetime import datetime

|

||||

from urllib.parse import quote_from_bytes as quote

|

||||

from urllib.parse import unquote_to_bytes as unquote

|

||||

|

||||

|

||||

FS_ENCODING = sys.getfilesystemencoding()

|

||||

|

||||

|

||||

class APF(argparse.ArgumentDefaultsHelpFormatter, argparse.RawDescriptionHelpFormatter):

|

||||

pass

|

||||

|

||||

|

||||

##

|

||||

## snibbed from copyparty

|

||||

|

||||

|

||||

def s3dec(v):

|

||||

if not v.startswith("//"):

|

||||

return v

|

||||

|

||||

v = base64.urlsafe_b64decode(v.encode("ascii")[2:])

|

||||

return v.decode(FS_ENCODING, "replace")

|

||||

|

||||

|

||||

def quotep(txt):

|

||||

btxt = txt.encode("utf-8", "replace")

|

||||

quot1 = quote(btxt, safe=b"/")

|

||||